- The paper introduces an iterative fact verification system that leverages LLMs to generate and answer questions for enhanced claim verification.

- The paper compares its dynamic approach with traditional static pipelines, reporting up to a 4.9 F1 score improvement on medical datasets.

- The paper incorporates predicate logic and varied evidence sources, offering transparent and explainable reasoning in verifying medical claims.

Step-by-Step Fact Verification System for Medical Claims

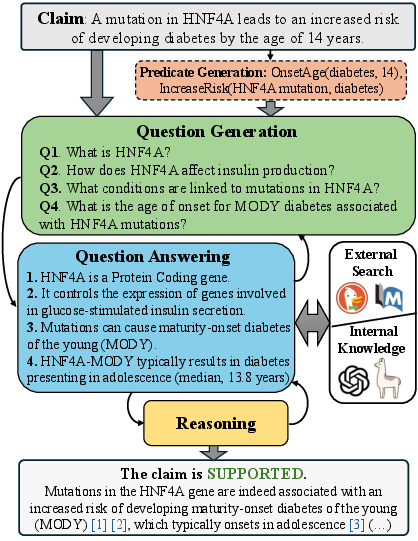

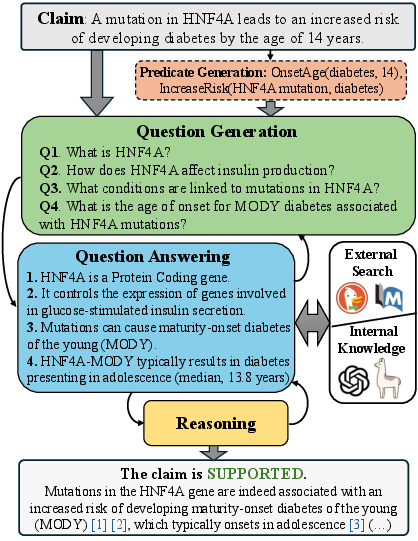

The paper presents a step-by-step fact verification system focused on the domain-specific application of medical claims, aiming to enhance the veracity prediction of such claims by systematically generating and answering questions to gather evidence. The approach leverages LLMs to transform the traditional pipeline process into a dynamic and explainable system.

System Overview

The authors propose an iterative fact verification (FV) approach using LLMs, contrasting it with traditional FV systems that utilize a static three-part pipeline. Traditional systems consist of document retrieval methods like BM25 or semantic search, followed by evidence extraction using sentence embeddings, and finally, a veracity prediction via encoder-only inference models such as DeBERTa.

In contrast, the proposed system iteratively collects and answers questions, drawing more context and knowledge before predicting the veracity of the claim. This approach allows for an explainable reasoning process and is examined on three medical fact-checking datasets using different LLMs, external web search, and structured reasoning through logic predicates.

Figure 1: The step-by-step fact verification system used in our study iteratively collects additional knowledge and evidence until it can predict a veracity verdict.

Empirical Findings

The paper reports empirical results showcasing the improved performance of the step-by-step FV systems over traditional methods. Notably, the application of this iterative approach in domain-specific datasets demonstrates superior precision and recall metrics.

- Dataset Performance: The system achieved an F1 score improvement of up to 4.9 points over traditional systems on medical datasets HealthFC, CoVERT, and SciFact. This highlights the efficacy of the LLM-based iterative approach in handling queries that require deep contextual understanding and reasoning capability.

- Knowledge Source Influence: The study shows that the choice between internal LLM knowledge and external web sources affects verification performance. Web search notably improved results on datasets with more technical claims (SciFact), while internal knowledge sufficed for simpler health-related queries.

- Predicate Logic Application: Incorporating first-order logic predicates into the verification process increased precision for some datasets. However, this approach sometimes led to recall degradation due to problems with overspecified predicates.

System Architecture

The system architecture integrates multiple components to iteratively refine the verification process:

- Question Generation: Initial and follow-up questions are systematically generated to dissect and explore the claim deeply.

- Evidence Collection: Evidence is retrieved from predefined knowledge sources such as internal LLM databases or web searches. Innovative usage of predicate logic mechanisms for structured evidence retrieval is tested.

- Reasoning and Verdict Prediction: A reasoning module evaluates the completeness of evidence before deciding the claim's truthfulness. The process is traceable and generates explanations based on iterative question-answer pairs.

Limitations and Ethical Considerations

The FV system described in the paper relies heavily on LLMs, which can produce misleading information when not properly verified, emphasizing the need for human oversight in sensitive domains such as healthcare. Moreover, the reliance on external APIs can result in a slower processing speed compared to systems using integrated models, highlighting the trade-off between accuracy and efficiency in real-time applications.

Conclusion

This comprehensive study advances the FV field by introducing a more dynamic, iterative, and explainable approach to verifying complex medical claims. While traditional systems offer speed and simplicity, the step-by-step approach provides nuanced insights that are particularly beneficial for domain-specific fact-checking. The findings encourage further exploration of how advanced LLM systems can refine domain-specific verification processes, possibly integrating structured sources like knowledge graphs in future research.