1. Introduction

Real-time object detection has witnessed remarkable progress, spurred by the introduction of the You Only Look Once (YOLO) framework (Redmon et al., 2015 ). YOLO revolutionized the field by recasting object detection as a single regression problem, enabling simultaneous classification and localization, thereby achieving faster processing speeds compared to region-proposal-based methods. This review examines the evolution of YOLO architectures, with a particular emphasis on the incorporation of attention mechanisms, culminating in a discussion of the hypothetical YOLOv12. The integration of attention aims to address the increasing complexity of object detection tasks by selectively focusing on pertinent image regions, enhancing both accuracy and efficiency (Bardwell-Evans et al., 2021 ). However, these mechanisms introduce computational challenges that must be overcome to maintain real-time performance. This review synthesizes existing research, highlights key advancements, and outlines future research directions in attention-driven real-time object detection.

2. Foundational YOLO Architectures: From v1 to v4

The original YOLO architecture (Redmon et al., 2015 ) established a new paradigm for object detection by using a single convolutional neural network (CNN) to simultaneously predict bounding boxes and class probabilities, contrasting sharply with two-stage detectors. Its unified approach allowed for high-speed processing, making it suitable for real-time applications. The initial YOLO network, comprising 24 convolutional layers and 2 fully connected layers, demonstrated impressive generalization capabilities across various visual domains.

Successive versions of YOLO have built upon this foundation, progressively enhancing both accuracy and speed. YOLOv3, as detailed in "YOLOv3: An Incremental Improvement" (Redmon et al., 2018 ), introduced multi-scale predictions through a feature pyramid network, enabling the detection of objects at varying sizes. This enhancement significantly improved the detection of smaller objects and boosted localization accuracy. Furthermore, YOLOv3 refined the bounding box prediction algorithm, employing K-means clustering on the training dataset to optimize anchor box sizes, reducing localization errors, and adopting logistic regression for objectness scores.

YOLOv4, described in "YOLOv4: Optimal Speed and Accuracy of Object Detection" (Bochkovskiy et al., 2020 ), marked a significant milestone by integrating several state-of-the-art techniques. It adopted CSPDarknet53 as its backbone network, utilizing Cross Stage Partial (CSP) networks to improve learning capability while reducing computational complexity and memory usage. Spatial Pyramid Pooling (SPP) was incorporated to maintain robust feature extraction across various scales without resizing inputs. The Path Aggregation Network (PAN) optimized the flow of information from bottom-up layers, enabling high-level semantic features to flow towards the shallow layers, contributing to better localization and classification at various image scales. These enhancements solidified YOLO's position as a preferred choice for real-time detection tasks.

3. Integrating Attention Mechanisms in YOLO Architectures

The integration of attention mechanisms represents a pivotal step in the evolution of YOLO architectures, driven by the need to enhance object detection precision and robustness, especially in complex scenes. Attention mechanisms enable the model to dynamically focus on the most relevant parts of the input image, thereby improving feature extraction and suppressing irrelevant background noise.

YOLOv4 incorporated a Modified Spatial Attention Module (SAM) (Bochkovskiy et al., 2020 ) designed to refine feature maps by emphasizing crucial spatial details. By leveraging spatial information more effectively, the SAM guides the neural network to concentrate on meaningful features, thereby increasing detection performance.

Further advancements have explored various attention mechanisms, including spatial attention, channel attention, and self-attention, each offering unique advantages. Spatial attention focuses on identifying salient spatial regions, while channel attention emphasizes informative feature channels. Self-attention mechanisms, inspired by transformers, capture long-range dependencies within the image, enabling a more holistic understanding of the scene.

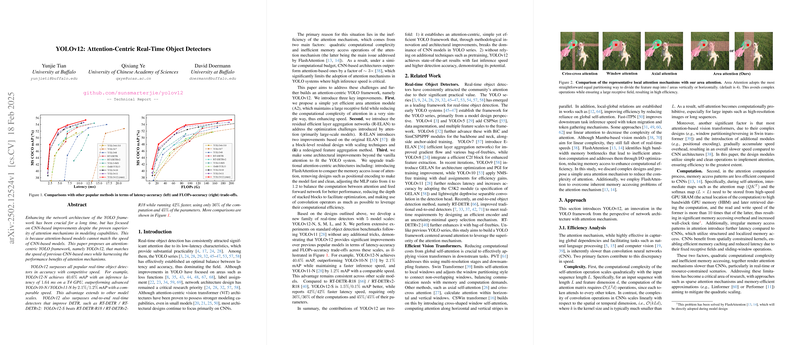

4. YOLOv12: An Attention-Centric Paradigm

The hypothetical YOLOv12 represents a significant advancement in attention mechanisms, further refining the model's detection capabilities. Key innovations include Area Attention and Residual Efficient Layer Aggregation Networks (R-ELAN) (Tian et al., 18 Feb 2025 ). Area Attention extends traditional attention by focusing on broader spatial regions relevant to the object detection task, enhancing the model's understanding of object context and reducing computational overhead by efficiently prioritizing critical regions. R-ELAN integrates residual learning with layer aggregation techniques, providing an efficient mechanism for feature extraction that preserves rich contextual details while maintaining the model's speed. This novel architecture consolidates multi-scale features more effectively, facilitating richer feature hierarchies crucial for resolving fine details in object detection tasks.

YOLOv12 distinguishes itself from its predecessors through superior performance on established benchmarks like COCO and PASCAL VOC datasets, demonstrating higher mean Average Precision (mAP) and reduced latency (Tian et al., 18 Feb 2025 ). The integration of Area Attention and R-ELAN addresses bottlenecks evident in earlier versions, setting a new standard in efficient object detectors.

5. Performance Evaluation and Trade-offs

Evaluating object detection models requires comprehensive benchmarking, considering both accuracy and computational efficiency. Standard benchmarks provide a standardized environment for objective evaluation, measuring accuracy and computational efficiency to offer a holistic view of model performance (Tian et al., 18 Feb 2025 ).

Mean Average Precision (mAP) is a critical metric for gauging the accuracy of object detection models. Models like YOLOv12 demonstrate significant mAP improvements, indicating advanced detection capabilities across challenging datasets. Concurrently, latency reduction is vital for real-time applications. Optimizing model architectures and leveraging parallel processing capabilities of modern hardware enable latency reductions without sacrificing accuracy.

Model scaling, denoted as Nano (N), Small (S), Medium (M), Large (L), and Extra-Large (X), caters to different performance and resource requirements. Nano and Small models are designed for resource-constrained devices, offering faster processing but lower accuracy. Medium models balance speed and accuracy, while Large and Extra-Large models prioritize accuracy in high-performance environments.

Deployment across different hardware platforms unveils trade-offs between speed and accuracy. High-performance platforms, such as GPUs and TPUs, efficiently handle large models, offering both high speed and accuracy. Conversely, Edge devices with low-power CPUs prioritize speed and energy efficiency, compromising on model size and accuracy. Techniques like model quantization and pruning are often employed to manage speed under constrained conditions.

6. Future Directions and Challenges

Attention-centric models hold significant implications for real-time systems, enhancing efficiency and interpretability. However, challenges remain in deploying these models in resource-constrained environments. Future advancements may include developing more sophisticated attention modules that dynamically adjust their focus based on scene complexity or specific task requirements. Integrating multi-scale attention or cross-attention techniques could further enhance the model's ability to detect objects across varying scales and contexts.

The integration of self-supervised learning with attention mechanisms offers another promising direction, enabling models to better leverage large amounts of unlabeled data. Furthermore, ensuring the robustness of attention models to adversarial attacks and noisy data remains an active area of research. Addressing data bias and fairness is also crucial for the responsible deployment of attention-centric models, requiring comprehensive testing and incorporation of fairness-aware mechanisms. Continued research into efficient, robust, and fair implementations will be critical as these technologies become increasingly integrated into various sectors.

7. Conclusion

The YOLOv12 framework represents a significant advancement in real-time object detection through its innovative use of attention mechanisms. By prioritizing relevant image parts, YOLOv12 improves accuracy and efficiency in detection tasks. The framework's architecture leverages modern neural networks to process images seamlessly, ensuring real-time application viability. As computing power increases and machine learning techniques evolve, the YOLO framework will likely adapt, incorporating emerging advancements in the AI domain to address more complex object detection challenges. Future iterations may explore more advanced attention mechanisms, integrate contextual learning techniques, and incorporate multi-modal data to further refine detection capabilities. YOLOv12 sets the foundation for future versions, advocating for AI systems that are not just faster but also more intelligent and adaptable.