Here's a detailed summary of the paper you provided, focusing on its key contributions, methodology, results, and significance:

Title: SynthDetoxM: Modern LLMs are Few-Shot Parallel Detoxification Data Annotators

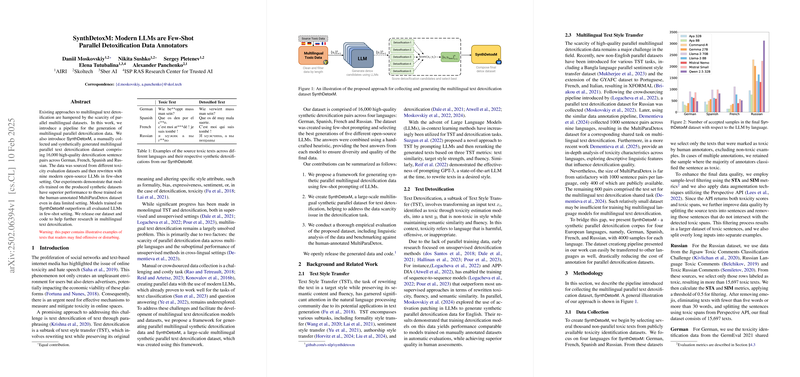

Core Idea: The paper addresses the scarcity of parallel multilingual datasets for text detoxification. It proposes a pipeline to generate synthetic parallel detoxification data using few-shot prompting of LLMs. This pipeline results in SynthDetoxM, a new multilingual dataset of high-quality detoxification sentence pairs.

Key Contributions:

- Framework for Synthetic Data Generation: The paper introduces a methodology for generating synthetic parallel multilingual detoxification data. This framework leverages the few-shot learning capabilities of modern LLMs to rewrite toxic text into non-toxic equivalents while preserving meaning.

- SynthDetoxM Dataset: The major contribution is the creation of SynthDetoxM, a large-scale multilingual synthetic parallel dataset. It contains 16,000 detoxification pairs across four languages: German, Spanish, French, and Russian (4,000 pairs per language). The dataset is created by selecting the best generations from multiple open-source LLMs, combined using a hand-crafted heuristic. The dataset and code are publicly released.

- Empirical Evaluation: The paper includes a thorough evaluation of SynthDetoxM. This evaluation involves:

- Linguistic analysis of the dataset.

- Benchmarking against the human-annotated MultiParaDetox dataset.

- Automatic evaluation using metrics like Style Transfer Accuracy (STA), Content Similarity (SIM), Fluency (FL), and a combined J-score.

- Side-by-Side (SBS) evaluation using GPT-4o to judge the quality of detoxified outputs.

Methodology:

- Data Collection:

- Non-parallel toxic texts are gathered from existing toxicity identification datasets in German, French, Spanish, and Russian.

- Sample-level filtering is applied using STA and SIM metrics to ensure high data quality.

- Data augmentation techniques are employed, utilizing the Perspective API to identify and isolate toxic spans within sentences.

- Parallel Data Generation:

- Various open-source LLMs (Qwen 2.5 32B, Command-R 32B, Gemma 2 27B, Aya Expanse (32B and 8B), Mistral Small 22B, Mistral Nemo 12B, and Llama 3.1 (70B and 8B)) are used in a few-shot generation setup.

- Few-shot examples (toxic/non-toxic pairs) are mined from a multilingual toxicity detection dataset, ranked based on STA and SIM scores to ensure high-quality detoxification and semantic preservation. For French, human annotators detoxified an initial set of sentences.

- Generated examples are filtered to remove refusals and ensure sufficient detoxifiability.

- Outputs from different LLMs are ranked and combined to create the final dataset, prioritizing diversity and quality.

- Evaluation:

- Sequence-to-sequence models (mT0-XL) are trained on SynthDetoxM and compared against models trained on MultiParaDetox.

- The quality of the generated data is assessed using STA and SIM scores from the Perspective API.

- Automatic evaluation is performed using the metrics outlined in the original MultiParaDetox paper, including STA, SIM, FL, and J-score.

- Side-by-Side (SBS) comparisons using GPT-4o as an evaluator are used to judge the quality of detoxified outputs from different models.

Key Results:

- Models trained on SynthDetoxM consistently outperform those trained on the human-annotated MultiParaDetox dataset, even in data-limited settings.

- Training on the full SynthDetoxM dataset results in models that surpass the performance of most LLMs in few-shot generation setups.

- Side-by-side evaluations show a clear preference for the detoxifications produced by models trained on SynthDetoxM compared to those trained on MultiParaDetox.

- French achieves comparable automatic metric scores to other languages, suggesting that detoxification models trained on this data would perform similarly.

- Qwen 2.5 32B tended to generate the most preferable detoxifications.

Significance:

- Addresses Data Scarcity: SynthDetoxM directly addresses the lack of parallel multilingual detoxification data, enabling the development of more effective multilingual detoxification models.

- Cost-Effective Data Generation: The proposed framework provides a cost-effective alternative to manual data collection, reducing the annotation costs for parallel detoxification datasets.

- Improved Detoxification Performance: The paper demonstrates that synthetic data generated using LLMs can achieve comparable or superior performance to human-annotated data, opening up new avenues for training detoxification models.

- Multilingual Applicability: The findings and the SynthDetoxM dataset are valuable for researchers working on multilingual natural language processing, particularly in the areas of text style transfer and toxicity mitigation.

- Outperforms LLMs in Few-Shot Setting: The model fine-tuned on the generated data outperforms many LLMs in a few-shot generation setup, which is a significant result.

Limitations:

- The paper focuses only on explicit types of toxicity, not more subtle forms.

- Definitions of toxicity can vary drastically between languages.

- Computational resource constraints led to the use of smaller models for data generation.

- The evaluation would be strengthened by comparison with proprietary models.

- Limited by amount of annotated non-parallel toxic datasets in some of the languages.

Ethical Considerations:

- The paper acknowledges the ethical responsibilities involved in working with text detoxification, emphasizing the goal of creating a safer and more inclusive online environment. It clarifies that the goal is not to suppress free speech but to offer non-toxic alternatives, encouraging users to choose better language. The risk of misuse (e.g., generating harmful content) is recognized.