- The paper's main contribution is the JUMP framework that optimizes universal multi-prompts for cross-domain jailbreaking in LLMs, achieving high attack success rates.

- It employs iterative stages like Selector, Mutator, Constraint, and Evaluator to balance prompt effectiveness and human-readability through perplexity control.

- Experimental results validate both JUMP and its defense counterpart, DUMP, which show significant improvements over baseline methods for adversarial attack and defense.

Jailbreaking with Universal Multi-Prompts: A Detailed Analysis

This essay provides an in-depth analysis of the paper "Jailbreaking with Universal Multi-Prompts," focusing on its methodologies, experimental findings, and implications for AI research and development.

Introduction to Jailbreaking and Universal Multi-Prompts

The development of LLMs has introduced new ethical challenges, including the threat of adversarial attacks aimed at jailbreaking the models to generate harmful content. While previous research primarily optimized adversarial inputs for singular tasks, "Jailbreaking with Universal Multi-Prompts" introduces JUMP, a framework designed to optimize universal multi-prompts for effective attacks across multiple domains.

Figure 1: Framework of our proposed method, JUMP. We perform a universal jailbreak attack by optimizing universal multi-prompts, framed by a red dashed line.

The paper also introduces DUMP for defense purposes, adapting the methodology to protect models from such attacks. This approach aligns with the broader goal of enhancing LLMs’ safety and reliability.

Methodology and Algorithmic Design

The JUMP framework builds upon the previous works like BEAST and AdvPrompter, focusing on optimizing universal multi-prompts rather than single-task adversarial inputs. JUMP** uses a combination of Selector, Mutator, Constraint, and Evaluator stages to iteratively improve prompt generation.

JUMP's Optimization Process

- Selector: Randomly samples a subset of templates from a larger set, reducing computation costs during evaluation.

- Mutator: Generates new candidates by expanding existing ones with token probabilities.

- Evaluator: Computes the average cross-entropy loss across a batch of instructions, assessing each prompt's effectiveness.

- Constraint: Introduces perplexity control to ensure prompts remain human-readable and not easily detectable by defense mechanisms.

Experimental Results

Attack Success Rate (ASR) and Perplexity Trade-offs

The experiments demonstrate JUMP's superiority over baseline methods like AdvPrompter and AutoDAN in achieving higher ASR across various models, albeit with increased perplexity. Integration of the perplexity constraint showed a significant reduction in ASR, highlighting the delicate balance between effectiveness and detectability.

Figure 2: Tradeoffs between perplexity and ASR under different settings.

Impact of Initialization and Handcrafted Prompts

The transition from JUMP to JUMP++ involved using carefully designed prompts for initialization, showing improved results in terms of both ASR and perplexity. This improvement underscores the significance of initial conditions in optimizing attack strategies.

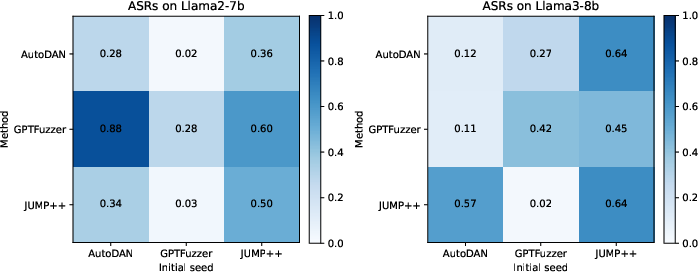

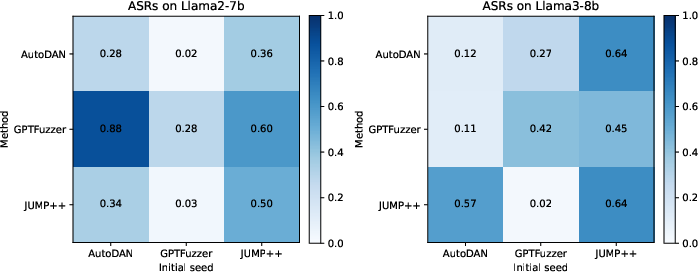

Figure 3: Ablations on the performance of three prompting methods (including JUMP++) under different types of initialization.

Transferability and Defense Implications

The adaptability of JUMP to defend scenarios introduces DUMP, which optimizes defense prompts using a similar methodology. Experiments revealed DUMP’s effectiveness against prominent attacks like AutoDAN, significantly reducing ASR compared to scenarios without defense.

Figure 4: ASR curves against AutoDAN for the three defense settings: No Defense, SmoothLLM, and DUMP.

Conclusion

The paper presents a novel approach in optimizing universal multi-prompts for jailbreaking and defending LLMs. JUMP and DUMP demonstrate the ability to handle attacks across various models, achieving high ASR while maintaining lower perplexity. These methodologies offer potent tools for both attackers and defenders, steering the conversation towards safer and more secure AI systems.

Future research should consider further optimization of the initialization process and explore additional strategies to mitigate the trade-offs between attack success rates and prompt stealthiness. As adversarial techniques continue to evolve, frameworks like JUMP and DUMP will be crucial in maintaining the integrity and trustworthiness of AI systems.