MedXpertQA: Benchmarking Expert-Level Medical Reasoning and Understanding

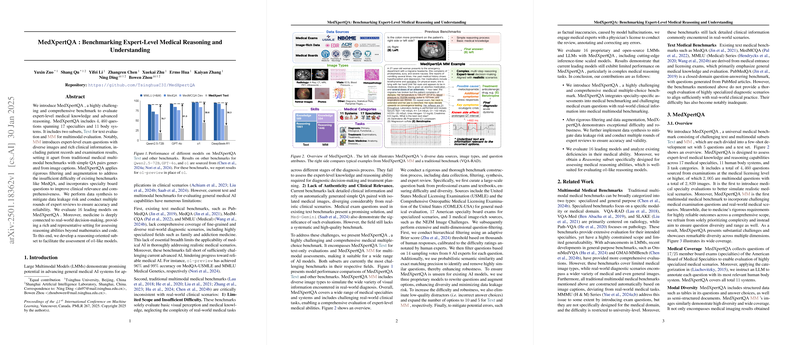

The paper introduces MedXpertQA, an advanced benchmarking framework aimed at evaluating and enhancing the capabilities of AI models in medical reasoning and understanding. MedXpertQA stands out as a highly challenging and comprehensive benchmark that includes 4,460 questions across 17 medical specialties and 11 body systems. The benchmark is structured into two subsets: one for text-only evaluations (MedXpertQA-Text) and another for multimodal assessments (MedXpertQA-Multi).

Key Contributions and Methodology

MedXpertQA addresses several inadequacies found in existing medical AI benchmarks. Current benchmarks often lack sufficient difficulty, specialty-specific evaluations, and the ability to simulate real-world diagnostic complexities. MedXpertQA overcomes these limitations by integrating expert-level exam questions that include diverse images and contextual clinical information, such as patient records and examination results.

The development of MedXpertQA involved rigorous data curation, filtering, and augmentation processes:

- Data Curation and Filtering: The authors curated questions from professional medical exams and textbooks, including USMLE, COMLEX-USA, and 17 American specialty board exams. They employed adaptive filtering using Brier scores and semantic similarity measures to ensure the selected questions challenge both humans and AI models.

- Data Synthesis and Expert Review: To mitigate data leakage risks, the paper describes a data augmentation process that involves rewriting questions and options. This was followed by multiple rounds of expert reviews to ensure the accuracy and validity of the benchmark content.

- Multimodal Assessment: MedXpertQA-Multi provides a multimodal benchmark that includes diverse image types and real-world clinical scenarios to simulate the broad spectrum of visual and textual information encountered in medical practice.

Evaluation and Results

The benchmark was used to evaluate 16 leading AI models, both proprietary and open-source, including inference-time scaled models like OpenAI's o1 model and others. The results demonstrate that current models still struggle with complex medical reasoning tasks, indicating a substantial gap in expert-level reasoning capabilities. Specifically, even the most advanced models achieved limited performance, particularly on reasoning-heavy subsets.

Implications and Future Directions

MedXpertQA has significant implications for the development of AI in healthcare. By setting a higher standard for medical AI benchmarking, it paves the way for more robust and clinically relevant AI applications. From a theoretical standpoint, the benchmark highlights the importance of integrating comprehensive and challenging evaluations to push AI boundaries in specialized domains like medicine.

Future developments could leverage the insights gained from MedXpertQA to inform reinforcement learning strategies, improve multimodal AI capabilities, and guide the design of models that better synthesize complex medical data. Additionally, MedXpertQA could serve as a template for developing benchmarks in other specialized fields that require advanced machine reasoning.

In summary, MedXpertQA represents a significant step forward in benchmarking AI for expert-level medical reasoning and understanding. Its rigorous design ensures comprehensive coverage and presents a meaningful challenge to current AI systems, driving further advancements in the field.