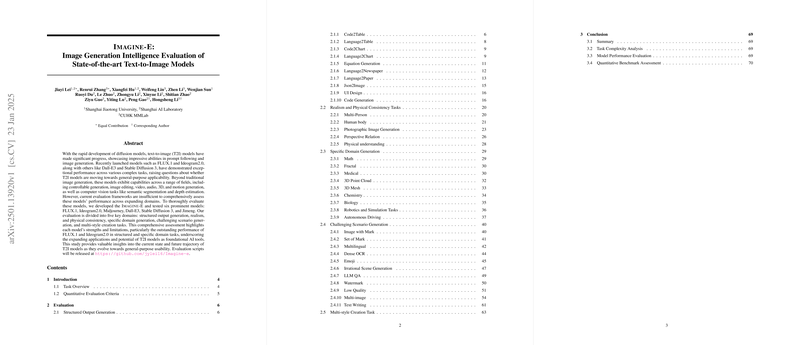

Overview of "Imagine-E: Image Generation Intelligence Evaluation of State-of-the-art Text-to-Image Models"

The paper "Imagine-E: Image Generation Intelligence Evaluation of State-of-the-art Text-to-Image Models" explores the rapidly advancing domain of text-to-image (T2I) diffusion models. Recent innovations such as FLUX.1, Ideogram2.0, Dall-E3, and Stable Diffusion 3 have shown impressive capabilities in following prompts and generating images, raising questions about their potential as general-purpose models. However, the paper argues that current evaluation frameworks fall short of comprehensively assessing models across expanding domains like controllable generation, image editing, and various computer vision tasks. To address this gap, the authors propose a novel evaluation framework called "Imagine-E" to rigorously assess the performance of six leading models in five key domains.

The assessment framework includes a diverse set of tasks:

- Structured Output Generation: Evaluation of the models' ability to generate structured outputs like tables, figures, and documents from code or natural language is rigorous because these tasks demand precise formatting and a high level of understanding from the models. FLUX.1 showed exceptional performance, closely aligning with human expectations in these tasks.

- Realism and Physical Consistency: This domain focuses on models' adherence to physical laws and realism in generated images. The evaluation covers scenarios like multi-person interactions and complex body poses. FLUX.1 and Ideogram2.0 excelled in generating visually credible human figures, though minor errors in physical principles persist.

- Specific Domain Generation: The evaluation assesses models' capabilities in specialized domains including mathematics, 3D modeling, chemistry, biology, and medical imaging. FLUX.1 and Ideogram2.0 demonstrated significant utility in scientific image generation, but challenges remain, especially in depicting accurate 3D structures and mathematical concepts.

- Challenging Scenario Generation: This involves creating images for complex tasks such as multi-language understanding, dense text, and utilizing emojis. FLUX.1 and Ideogram2.0 were highly effective in these situations, particularly in generating multilingual content and interpreting emoji inputs.

- Multi-style Creation Task: The models are assessed on their ability to handle over thirty distinct artistic styles, combining elements with different styles. Although all models show competence, Midjourney stands out for its superior aesthetic appeal in diverse styles.

The paper’s evaluation framework also incorporates quantitative metrics, including CLIPScore, HPSv2, and Aesthetic Score. However, these often failed to align with human subjective ratings, especially in complex tasks. The GPT-4o evaluation was more consistent with human judgments, although improvements are needed for tasks requiring fine detail analysis.

Implications and Future Directions

The research highlights the growing versatility of T2I models and their potential as foundational AI tools across various domains. Despite their impressive achievements, the paper identifies several challenges, particularly in structured output generation and adherence to physical reality. From a theoretical standpoint, this paper underscores the need for improved evaluation frameworks to fully capture the models’ multifaceted capabilities. Practically, the insights gathered here could guide further development, enhancing models’ robustness and applicability in real-world scenarios.

The advancement of T2I models suggests exciting possibilities for future AI applications, including more sophisticated human-computer interaction and creative industries. However, the path towards truly general-purpose models remains complex, necessitating continued exploration in both model architectures and evaluation methodologies.