Exploring and Controlling Diversity in LLM-Agent Conversation

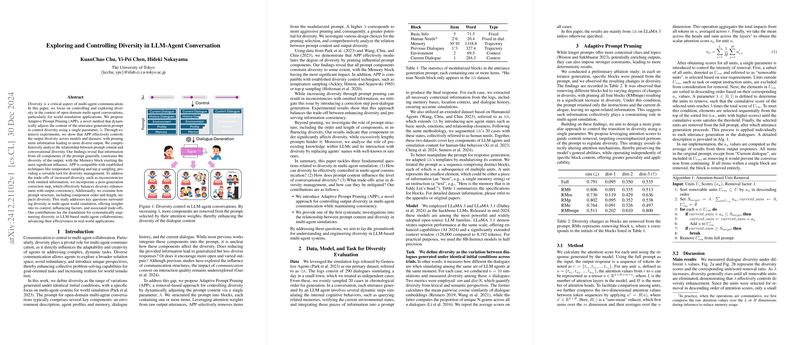

The paper "Exploring and Controlling Diversity in LLM-Agent Conversation" by KuanChao Chu and collaborators focuses on a pertinent issue within the field of open-domain multi-agent conversations: the control and exploration of diversity in generated dialogues. The importance of this topic lies in its direct influence on multi-agent systems' adaptability and creativity, essential for effectively tackling complex, dynamic tasks. The ultimate goal of the paper is to enhance the realism and problem-solving capabilities of agents in world simulation contexts, both practically and theoretically.

The authors introduce a novel method called Adaptive Prompt Pruning (APP), which facilitates the control of conversational diversity through manipulation of the utterance generation prompt using a single parameter, . The APP method is notable for its dynamic approach, as it adjusts the prompt content based on attention scores derived from the model's output. With this pruning mechanism, a higher indicates more aggressive removal of prompt components, leading to greater diversity in the response generation. The paper posits that diversity can be effectively managed by leveraging attention weights to remove redundant or overly constraining elements from prompts.

Empirical evidence from the paper demonstrates that APP can successfully modulate output diversity across various LLMs and datasets by selectively removing elements that exert different levels of constraint on the output. Notably, the research identifies the Memory block as having the most significant constraining effect on diversity. This finding provides a crucial insight for future research into the design and configuration of prompt structures to optimize diversity in LLM-agent conversations.

The paper further examines the compatibility of APP with established generation diversity techniques, such as temperature sampling and top-p sampling, highlighting its versatility as a tool for enriching dialogue diversity. Moreover, the authors address the trade-offs inherent in diversity enhancement, such as the potential for inconsistencies with omitted information, by introducing a post-generation correction step. This correction process effectively mitigates the trade-offs, maintaining output consistency without significantly reducing the achieved diversity.

Beyond the evaluation of the APP method, the paper explores various factors influencing diversity, including the order and length of prompt components, as well as the frequency of entity names. The researchers find that block order significantly affects diversity, with certain configurations resulting in diminished dialogue quality and variation. Excessively verbose prompts are identified as detrimental to diversity, suggesting that brevity and precision in prompt design are desirable attributes.

The implications of this work are twofold. In practical terms, it offers a methodological advancement for enhancing dialogue diversity in multi-agent systems, thereby improving realism and reducing repetition in simulated environments. Theoretically, it lays the groundwork for systematic approaches to engineering diversity in LLM-based collaborations, stimulating further research into optimizing interactive AI agents.

Moving forward, there is potential for future developments in AI that hinge on a deeper understanding of diversity in conversational agents. Tailoring diversity through adaptive techniques can enhance the performance of AI systems in autonomous decision-making, human-agent collaboration, and complex problem-solving scenarios. The architectural insights provided by this paper could also inspire novel applications in human-computer interaction and digital assistant technologies.

To summarize, this paper presents a rigorous exploration of diversity control in LLM-based multi-agent systems, providing actionable methodologies and fostering a comprehensive understanding of the interplay between prompt structure and dialogue diversity.