An Expert Overview of Calibration Process for Black-Box LLMs

The paper under consideration provides a comprehensive survey of calibration processes specifically targeting black-box LLMs, focusing on the techniques employed to assess and enhance the reliability of their outputs. Unlike white-box LLMs, black-box models such as GPT and Claude restrict access to internal parameters, interacting with users solely through APIs. This paper's contribution is significant as it systematically reviews existing methodologies for calibrating black-box LLMs, delineating the challenges and advancements unique to this domain.

Key Components and Challenges

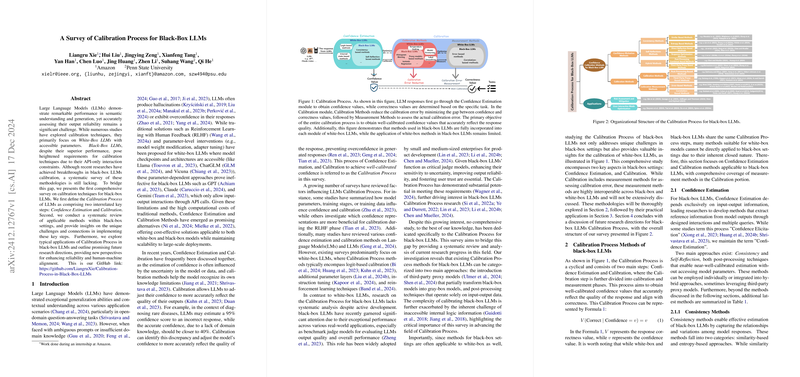

The survey defines the Calibration Process of black-box LLMs as a two-step approach: Confidence Estimation and Calibration. Confidence Estimation involves extracting reliable confidence metrics from the model’s outputs without access to its parameters, while Calibration aligns these metrics with the actual correctness of the outputs.

- Confidence Estimation: For black-box LLMs, confidence estimation focuses on input-output interactions since the model's parameters remain inaccessible. Techniques outlined include consistency methods and self-reflection strategies, where the model's responses are repeatedly queried and evaluated for variance or self-assessed certainty. Consistency approaches often leverage semantic similarities among multiple samples, while self-reflection might involve the model generating confidence scores for its answers.

- Calibration Techniques: Calibration aligns the estimated confidence scores with accuracy levels. While techniques like temperature scaling are commonplace in gray-box models, black-box methods rely on post-processing strategies or third-party models to achieve calibration. Common methods cited include Histogram Binning and Isotonic Regression, which refine confidence outputs to reduce calibration error.

Implications and Application

The paper discusses the practical application of these methods in enhancing the reliability of black-box LLM outputs across various domains such as medical AI and autonomous systems, where model hallucinations or overconfidence could have significant adverse outcomes. Calibration methods are posited as crucial for improving model trustworthiness, potentially increasing user acceptance and expanding the deployment of AI solutions in high-stakes environments.

Additionally, the survey identifies the necessity of robust benchmarks to evaluate calibration methods comprehensively. Such benchmarks should ideally accommodate the diverse evaluation criteria of different applications, moving beyond simplistic binary judgments to include factors like logical coherence and human satisfaction.

Speculations on Future Developments

The survey speculates on future research directions, emphasizing the development of bias detection and mitigation techniques tailored for black-box models. The absence of access to the internal model states poses unique challenges, necessitating novel methods to detect and correct biases without compromising model integrity. Furthermore, the calibration of long-form text generation remains an open problem, demanding more sophisticated methods that consider the subjective nature of text-based evaluations.

Conclusion

This survey paper fills a crucial gap in existing literature by addressing the intricacies of calibrating black-box LLMs, providing detailed insights into both foundational techniques and practical applications. By doing so, it lays the groundwork for future innovations aimed at enhancing the reliability and trustworthiness of LLMs in real-world applications. This not only underscores the importance of calibration in AI but also highlights the growing need for models that perform reliably under the constraints typical of black-box settings.