Trust No AI: An Examination of Prompt Injection Vulnerabilities

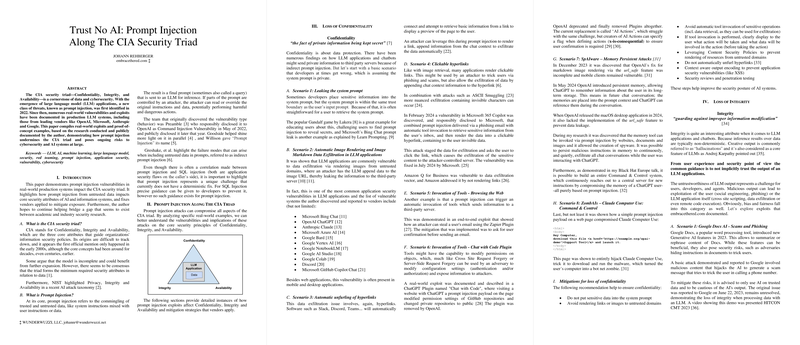

The paper "Trust No AI: Prompt Injection Along The CIA Security Triad" by Johann Rehberger provides a comprehensive analysis of prompt injection vulnerabilities across the CIA security triad—Confidentiality, Integrity, and Availability—in LLM applications. Identified initially in 2022, prompt injection represents a novel class of threats that has since been observed in various real-world applications from major vendors such as OpenAI, Microsoft, Anthropic, and Google. This paper not only presents documented cases of prompt injection exploits but also explores the implications and mitigation strategies pertinent to AI and cybersecurity experts.

Analyzing the CIA Triad and Prompt Injection

The central thesis of the paper is focused on examining how prompt injection attacks impact the CIA triad:

- Confidentiality: Prompt injection can result in unauthorized access to private information. The paper discusses several scenarios, such as leaking system prompts and data exfiltration via automatic image rendering, where attackers can compromise confidentiality. The vulnerability is further illustrated through the use of tools or external references that inadvertently disclose sensitive data to unauthorized parties, marking a critical lapse in data security.

- Integrity: The integrity of LLM applications is threatened by non-deterministic outputs, leading to conditions where untrustworthy and malicious content can emerge. The paper highlights instances such as phishing attempts via Google Docs AI, where adversaries target user-generated content to manipulate outcomes. Conditional prompt injections and techniques like ASCII Smuggling further illustrate the potential for introducing erroneous or harmful data that undermines the integrity of LLM processes.

- Availability: Attacks on system availability are less documented but equally significant. The research points to potential denial of service through constructs like infinite loops or refusal to process valid commands, as observed in applications such as Microsoft 365 Copilot. Measures to safeguard against such threats include limiting recursive tool invocations and controlling execution time.

Implications and Future Directions

The implications of this research are far-reaching. Practically, the threat of prompt injection necessitates robust security frameworks when deploying LLM applications, with an emphasis on securing user-submitted data from trusted and untrusted sources. Theoretically, this body of work underscores the need for the ongoing development of rigorous methodologies to identify and neutralize vulnerabilities.

The absence of a deterministic solution for prompt injection is a primary concern noted in the paper. While SQL injection vulnerabilities, for instance, can be effectively managed through established practices, prompt injection lacks a clear, universally applicable fix. Consequently, this remains an open area for research and innovation within both the cybersecurity and AI domains.

Future directions might explore enhancing AI safety measures, such as better context-aware encoding techniques, implementing human-in-the-loop systems for critical operations, and fostering collaboration between academia and industry to bridge existing gaps in security research.

Conclusion

This paper provides a critical assessment of prompt injection vulnerabilities within LLM applications, reaffirming the necessity of a security-centric approach in AI deployment. It cautions against the blind trust of AI outputs, urging developers and researchers to engage in continuous threat modeling, testing, and validation. This vigilance will be crucial in mitigating the evolving risks that accompany AI and ensuring the safe and effective use of LLMs in various applications.