An Essay on "Evaluating and Aligning CodeLLMs on Human Preference"

The paper "Evaluating and Aligning CodeLLMs on Human Preference" addresses the notable gap in the current landscape of code LLMs (codeLLMs) by emphasizing the importance of human preference alignment in code generation tasks, an area often overlooked by traditional benchmarks focused on code correctness. The authors propose a human-curated benchmark, CodeArena, and introduce a synthetic instruction corpus, SynCode-Instruct, to test and improve the alignment between model-generated responses and human preferences.

Key Contributions

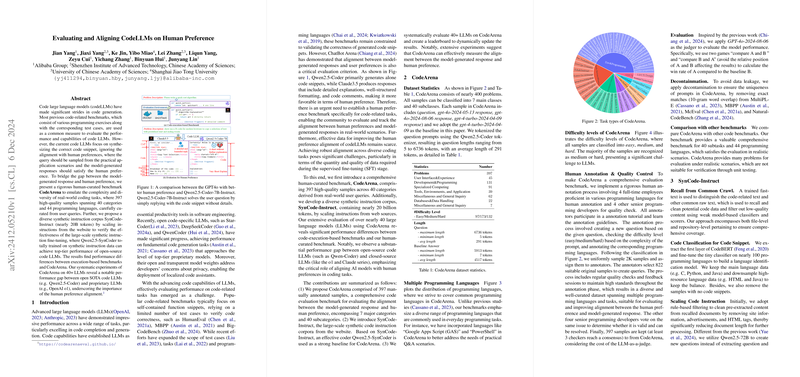

- CodeArena Benchmark: Recognizing the deficiency in existing benchmarks, which largely ignore human preferences, the authors developed CodeArena, a benchmark encompassing 397 curated samples from real-world scenarios, spanning 40 categories and 44 programming languages. This benchmark offers a comprehensive testbed for evaluating how closely codeLLMs align with human expectations in terms of response quality, detail, and usefulness.

- SynCode-Instruct Initiative: To augment the capabilities of codeLLMs through exposure to a wider array of scenarios, the authors generated SynCode-Instruct, a large-scale synthetic instruction dataset comprising nearly 20 billion tokens. This initiative endeavors to enhance model performance by synthesizing user-aligned responses from large-scale data sourced from the web.

- Performance Evaluation Across LLMs: Systematic experiments on over 40 state-of-the-art (SOTA) LLMs reveal a significant performance disparity between open-source models and proprietary counterparts, stressing the role of human alignment in bridging the gap. The paper reveals stark contrasts between execution-based benchmarks and CodeArena, providing insight into the nuanced requirements of human-centric evaluation.

Results and Implications

The findings reveal that current open-source codeLLMs, such as Qwen2.5-Coder, lag behind proprietary models like GPT-4o and Claude in aligning with human preferences, despite comparable performance on traditional execution-based benchmarks like HumanEval and MBPP. These proprietary models demonstrate superior capability in generating comprehensive, user-preferred responses enriched with explanations and context, rather than mere code correctness.

The implications of this research are multifaceted. On a practical level, aligning models with human preferences could redefine productivity in software engineering, facilitating the creation of more intuitive and efficient code systems. Theoretically, it highlights the complex intersection between functional and experiential quality in AI—as simply producing error-free code isn't sufficient for user satisfaction or practical utility.

Future Directions

The paper opens avenues for future research in refining reward models for RLHF (Reinforcement Learning from Human Feedback) in code generation, and devising new benchmarks and evaluation paradigms that prioritize user engagement metrics. Additionally, the scaling of synthetic instruction corpora presents a promising direction for developing LLMs that cater to a diverse array of programming languages and coding scenarios.

The insights from this research underscore the critical role of human-centric benchmarks in the development of codeLLMs, aiming not only for technical correctness but also for experiential alignment with end-user expectations. As the field of AI continues to evolve, the alignment of model outputs with human preferences is expected to become an increasingly integral component of comprehensive model evaluation and deployment.