A Comprehensive Survey on LLM-Based Social Agents in Game-Theoretic Scenarios

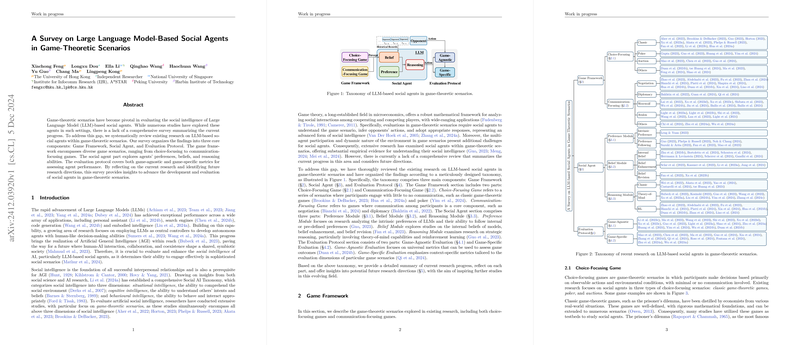

This paper presents a comprehensive survey of research focused on LLM-based social agents within the context of game-theoretic scenarios. The authors systematically categorize existing work into three principal components: Game Framework, Social Agent, and Evaluation Protocol. This structure provides a coherent overview of the field and highlights the diverse applications and challenges faced by LLM-based agents in strategic environments.

Game Framework

The Game Framework is bifurcated into two primary categories: Choice-Focusing Games and Communication-Focusing Games. Choice-Focusing Games, such as classic game-theoretic games, poker, and auctions, primarily involve scenarios with limited communication, emphasizing decision-making based on observable actions and environmental factors. In contrast, Communication-Focusing Games, including negotiation, diplomacy, and social deduction games like Werewolf, center on scenarios where interaction and communication are pivotal, revealing the strategic capabilities of LLMs independent of purely rational decision-making.

The authors identify several key insights across these dimensions, such as the necessity for prompt robustness testing and the exploration of whether social agents exhibit strategic behavior mediated through "action language" in low-communication games like poker and auction. These observations underscore the current gaps and guide future research in crafting more stable and sophisticated LLM-driven agents.

Social Agent

The paper explores the core components of LLM-based social agents: Preference, Belief, and Reasoning Modules. It investigates whether agents inherently possess human-like preferences and can adhere to predefined preferences through role adaptation. The Preference Module evaluates the extent to which LLMs can simulate strategic preferences, akin to those observed in human decision-making.

The Belief Module focuses on the extent to which LLM agents hold and modify beliefs. It critically evaluates both the internal representations of beliefs and how these manifest in agents' external behaviors, exploring belief enhancement strategies and the agents’ ability to revise beliefs upon new information.

The Reasoning Module explores strategic reasoning through experiment designs based on Theory-of-Mind (ToM) and reinforcement learning (RL) techniques. ToM-based strategies enable agents to infer other players’ mental states, enhancing strategic interactions in games with incomplete information. RL methodology aids in exploring dynamic environments, bolstering long-term strategic planning and decision-making in complex scenarios.

Evaluation Protocol

The paper discusses evaluation methodologies critical for assessing the effectiveness of LLM-based social agents, dividing them into Game-Agnostic and Game-Specific Evaluations. The former entails universal metrics like win rates for outcome assessment across games, whereas the latter involves metrics tailored to specific game dynamics, such as behavior analysis and cooperative measures considering the game's context.

The authors propose the development of a comprehensive evaluation framework incorporating hierarchical, abstract, and quantifiable metrics. Such frameworks would deeply analyze game results beyond win/loss to fully understand agent efficiencies, strategic decisions, and adaptability within varied scenarios.

Implications and Future Directions

The survey identifies several promising directions for future work, including creating standardized benchmarks to mitigate LLM data contamination risks and synthesizing benchmark data to provide more rigorous evaluations. Integrating RL techniques with LLMs could significantly improve agents' capabilities in handling multi-round and complex scenarios, pointing towards a more realistic and robust agent architecture. Furthermore, mining emergent behavioral patterns across diverse cultural and linguistic scenarios can offer richer insights into agents' strategic reasoning and adaptability.

In conclusion, this survey rigorously evaluates the current landscape of LLM-based social agents within game-theoretic contexts, setting the stage for innovations aimed at achieving more comprehensive, adaptable, and human-aligned AI systems. It highlights the importance of testing these systems across a wide range of environments and the necessity for further advancements in building truly intelligent social agents.