Analyzing the NYT-Connections Benchmark: Evaluating LLM Reasoning Capabilities

The paper presents the NYT-Connections benchmark, a novel evaluation framework aimed at assessing deliberate reasoning in LLMs. By building upon the framework of the New York Times Connections game, the authors introduce a task that emphasizes "System 2" cognitive processes over the instinctual "System 1" processes typically leveraged by LLMs. This essay outlines the methodology, findings, and the broader implications of this work within the field of AI and LLM development.

Benchmark Design

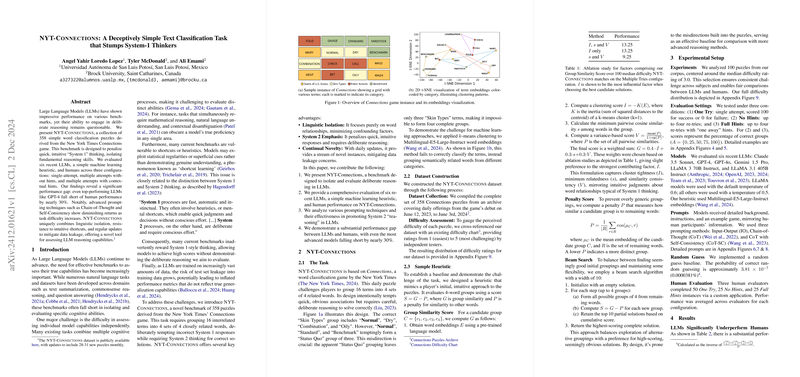

The NYT-Connections benchmark consists of 358 word classification puzzles, each requiring participants to group 16 interrelated terms into four sets of closely related words. This design seeks to discourage intuitive shortcuts characteristic of System 1 thinking and instead requires more deliberate, System 2 reasoning skills. The task enforces linguistic isolation and is updated regularly to minimize the risk of data leakage, thus providing a robust environment for evaluating an LLM's genuine reasoning capabilities.

Experimental Evaluation

The researchers evaluated six state-of-the-art LLMs, including GPT-4 and Claude 3.5, as well as a simple machine learning heuristic and human participants across three conditions: single attempt (One Try), multiple attempts without hints (No Hints), and multiple attempts with contextual hints (Full Hints). As a form of assessment, they employed sophisticated prompting techniques, including Chain-of-Thought (CoT) and Self-Consistency, to gauge the models' engagement with System 2 type reasoning.

Findings and Observations

A significant outcome from this paper is the observed performance gap between LLMs and human participants. Even the highest-performing model, Claude 3.5, achieved a maximum accuracy of 40.25% in the Full Hints setting, compared to 60.67% by humans. This discrepancy underlines the current limitations of LLMs in deliberate reasoning tasks.

While the addition of hints notably improved human performance, LLMs showed inadequate utilization of such information, sometimes posting lower scores when hints were incorporated. This discrepancy indicates a fundamental difference in how humans and LLMs process auxiliary context during problem-solving.

Moreover, the intuitive baseline heuristic designed to emulate System 1 thinking achieved comparable results to several LLMs, highlighting that current models might still largely rely on pattern recognition rather than true understanding or reasoning. This finding raises questions about the extent to which contemporary LLM architectures genuinely emulate cognitive processes beyond pattern recognition.

Implications and Future Directions

The results underscore crucial challenges in the development of LLMs capable of System 2 reasoning, especially when faced with tasks that resist heuristic shortcuts. The NYT-Connections benchmark provides a valuable tool for exploring and understanding these challenges more deeply. Further research should focus on refining prompting techniques and exploring alternative approaches capable of enhancing the reasoning abilities of LLMs.

Moreover, extending the evaluation framework to accommodate larger and more diverse datasets or culturally diverse versions of the benchmark may provide broader insights into LLM generalization capabilities. Additionally, further paper into the model architecture, possibly incorporating more neuro-symbolic approaches, could facilitate a leap toward enhanced reasoning capabilities.

Conclusion

The paper contributes a significant resource for examining the reasoning capacities of LLMs. By highlighting the disparity in performance between humans and models and the mixed efficacy of prompting strategies, it encourages ongoing dialogue and progress in the quest for models that can genuinely reflect higher-order cognitive processes. NYT-Connections sets a noteworthy benchmark in the continuous evaluation and enhancement of LLM intelligence and reasoning skills.