Overview of "TimeMarker: A Versatile Video-LLM for Long and Short Video Understanding with Superior Temporal Localization Ability"

The paper presents TimeMarker, a novel Video-LLM (Video-LLM) designed to excel in video understanding across various temporal scales, from short to long video content. This model addresses significant challenges prevalent in existing models related to temporal localization and efficient processing of videos with vastly differing durations. TimeMarker is constructed to engage in high-quality dialogue based on video content, asserting a strong temporal localization capability that distinguishes it from current paradigms in the field.

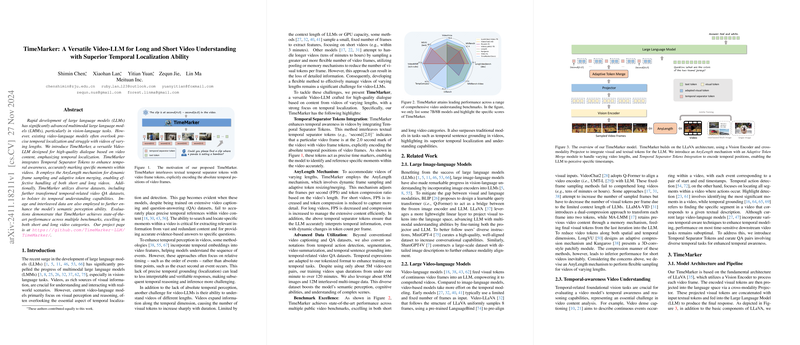

Innovation and Architecture

TimeMarker introduces two key innovations: Temporal Separator Tokens and the AnyLength mechanism. Temporal Separator Tokens interleave textual tokens with video frame tokens to explicitly encode the absolute temporal positions of video frames. This facilitates precise temporal grounding and enables the model to handle temporal reasoning and search tasks more effectively, significantly enhancing its interpretability.

The AnyLength mechanism involves dynamic frame sampling and adaptive token merging strategies to accommodate videos of varying lengths. It adjusts the frame rate and token compression dynamically, allowing for both detailed short video analysis and efficient long video management. This approach addresses the traditional drawbacks of fixed-frame sampling or uniform token compression, which often result in information loss or increased computational demand.

Data and Training Strategies

The model's training is executed in a three-stage process, focusing on multimodal alignment, high-quality knowledge learning, and instruction tuning. TimeMarker utilizes a diverse dataset that includes transformed temporal-related video QA datasets, boosting its semantic and temporal understanding capabilities. It also employs a significant amount of image and interleaved multi-image data to augment semantic perception, engaging with a variety of temporal tasks that demand a comprehensive understanding of visual sequences.

Empirical Evaluation

TimeMarker undergoes rigorous evaluation across multiple benchmarks, demonstrating state-of-the-art performance. It excels in both short and long video categories and showcases significant improvements over existing baselines in tasks like temporal sentence grounding. For example, the model achieves an R@1, IoU=0.3 score of 73.5% on the Charades-STA benchmark, and it outperforms traditional models even when operating in a zero-shot setting, thereby illustrating its superior temporal localization abilities.

Implications and Future Directions

TimeMarker's architecture and methodology have significant implications for the development of Video-LLMs. By effectively enhancing temporal awareness and addressing video length variability, it sets a new standard for future models aiming for comprehensive video understanding. The integration of temporal separator tokens and a flexible sampling mechanism may inspire further research into temporal reasoning capabilities within multimodal models, potentially improving applications in video content analysis, automated video editing, and real-time video interaction systems.

In conclusion, TimeMarker represents a substantial advancement in Video-LLMs, offering a robust framework for addressing longstanding temporal and scalability issues. Its design choices and results suggest a promising avenue for applying large multimodal models to real-world video understanding tasks, providing a versatile tool for researchers and practitioners in the field.