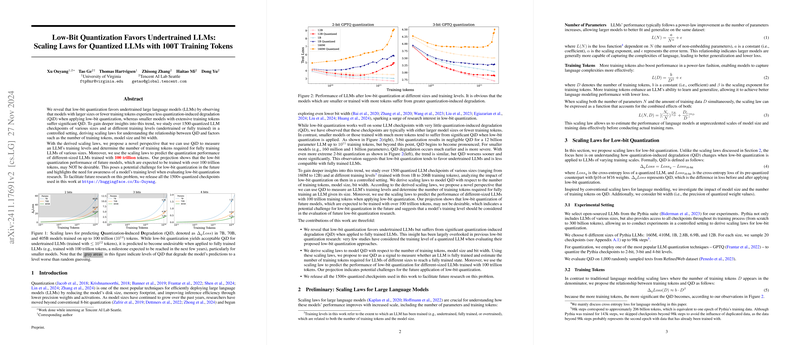

The paper conducts an extensive empirical investigation into the behavior of low-bit post-quantization on LLMs and derives a set of scaling laws that characterize the quantization-induced degradation (QiD) in relation to three key factors: the number of training tokens, model size, and bit width. The paper is based on over 1500 quantized checkpoints extracted from the Pythia suite, covering various model scales (from 160M to 12B parameters) and training regimes (ranging from 1B to roughly 206B tokens). The authors demonstrate that low-bit quantization is significantly more benign for undertrained LLMs compared to fully trained ones.

The main contributions and findings can be summarized as follows:

- Unified Scaling Law for QiD The work introduces a unified scaling law to predict QiD, formulated as

where: - is the quantization-induced degradation (difference in cross-entropy loss between quantized and 16-bit models), - is the number of non-embedding parameters, - is the number of training tokens, - is the bit width, and - , , , are fitted constants. Fitting to the data yields strong numerical evidence with , , and , indicating that QiD increases with training tokens and decreases with both model size and bit width.

- Empirical Evidence and Methodology

- For a 12B-parameter LLM, QiD remains negligible with 3-bit quantization when trained up to tokens; however, degradation becomes more pronounced as training token counts increase.

- Smaller models (e.g., 160M to 1B parameters) experience significant QiD much earlier in training.

- The empirical trends are robust across different quantization methods and remain consistent when tested on different datasets.

- Insights on Model Training Levels A key observation is that low-bit quantization tends to favor undertrained models. This phenomenon is attributed to the fact that early in training, weight fluctuations are large, granting the models an inherent robustness to perturbations introduced by quantization. In contrast, fully trained checkpoints display very small weight variations; thus, even a minor shift due to quantization can push the weights outside the narrow operational range, leading to significant performance degradation or even collapse.

- Predictive Projections and Future Challenges Based on the derived scaling laws, the paper predicts the performance of quantized LLMs trained with 100 trillion tokens. The projections indicate that whereas undertrained LLMs (typically trained with fewer tokens than the full dataset) maintain acceptable QiD even at low-bit widths, fully trained models—especially the smaller ones—suffer severe QiD. For instance, for a 70B-scale model, maintaining a QiD below 0.2 (corresponding to about a 20% likelihood reduction) requires considerably fewer training tokens under 4-bit quantization than would be encountered in a 100-trillion-token setting. The tables provided include strong numerical predictions for the token counts required to maintain desired levels of QiD across various bit widths and model sizes.

- Extension to Native Low-Bit LLMs The paper also extends the discussion to native low-bit models. Experiments with a 1-bit LLM (BitNet b1.58) reveal that while initial training performance is competitive with bf16 counterparts, significant performance gaps emerge in later training stages. This suggests that the precision bottleneck observed in post-quantized models is also relevant for models trained directly in low precision, thereby casting doubt on the scalability of native low-bit architectures as training token counts continue to grow.

- Practical Implications and Recommendations The work suggests that future low-bit quantization research should explicitly consider a model’s training level as a critical factor. When evaluating novel quantization techniques, experimenters must account for the increased sensitivity of fully trained models to quantization-induced errors. This is particularly important as training datasets continue to expand, potentially reaching the threshold of 100 trillion tokens, where the current low-bit quantization methods may become impractical due to severe degradation.

In summary, the paper offers a comprehensive theoretical and empirical framework for understanding the interplay between training tokens, model size, and quantization precision. The derived scaling laws not only provide a predictive tool for assessing QiD in future large-scale LLMs but also highlight a fundamental limitation: low-bit quantization, while efficient for deployment, may intrinsically favor models that have not fully exploited the available training data. This finding has significant implications for both the design of emerging neural architectures and the practical deployment of quantized LLMs in high-performance applications.