This work details the construction and evaluation of generative agents designed to simulate the attitudes and behaviors of 1,052 specific individuals, grounded in extensive qualitative interview data (Park et al., 15 Nov 2024 ). The core contribution lies in demonstrating that agents instantiated with rich, idiosyncratic personal histories derived from interviews achieve higher fidelity in replicating individual-level survey responses and aggregate experimental results, with reduced demographic bias compared to agents based solely on demographics or short personas.

Agent Architecture

The generative agent architecture utilizes a LLM, specifically GPT-4o, augmented with individual-specific memory derived from comprehensive interview data.

- Memory Source: The primary memory component for each agent is the complete transcript of a two-hour, semi-structured, voice-to-voice interview conducted with the corresponding human participant. These interviews, averaging 6,491 words, capture detailed life histories, values, beliefs, social contexts, and personal narratives.

- Memory Injection: When responding to a query (e.g., a survey question), the agent's prompt includes the entire interview transcript, instructing the LLM to simulate the individual based on this detailed context.

- Expert Reflection Module: To enhance the LLM's synthesis of the lengthy transcripts, a pre-processing step involves an "expert reflection" module. GPT-4o analyzes each transcript from the perspectives of four simulated domain experts: a psychologist, a behavioral economist, a political scientist, and a demographer. Each "expert" generates bulleted insights relevant to their domain. These reflections are stored. When a query is posed, the system classifies the query's domain relevance and appends the corresponding expert's reflections to the prompt alongside the full transcript.

- Prompting Strategy: A chain-of-thought (CoT) reasoning process is employed. The LLM is prompted to:

- Interpret the query and response options.

- Reason about how the specific individual, based on their interview and relevant expert reflections, would evaluate each option.

- Synthesize this reasoning to select the most likely response.

- Output the final prediction. Numerical responses used a slightly adapted CoT format.

Multi-Step Context: For sequential tasks, such as behavioral games or experiments with multiple rounds, agents receive summaries of previous stimuli and their own prior actions as additional context in subsequent prompts.

An architectural overview can be conceptualized as:

1 2 3 4 5 6 7 8 9 10 |

Query -> [Domain Classifier] -> Relevant Expert Reflections

|

v

LLM (GPT-4o) <- Prompt { Instruction: "Simulate Person X"

Context: Full Interview Transcript + Relevant Reflections

Query: Target Question/Task

CoT Instructions }

|

v

Response <- [Reasoning Steps] <- Agent's Predicted Action/Response |

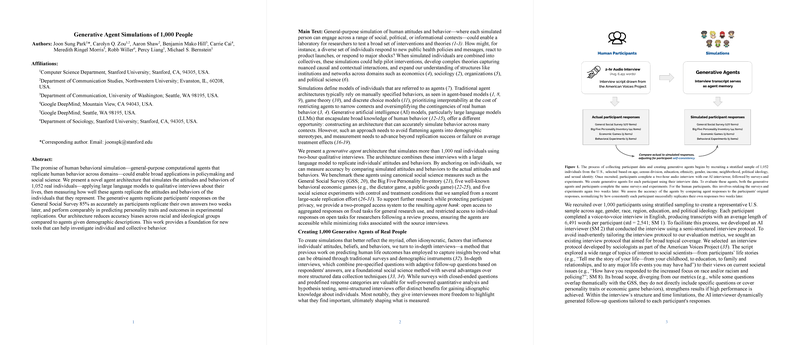

Simulation Design and Data Collection

The simulation involved creating agents for a large, representative sample and collecting comprehensive evaluation data.

- Participant Sample: 1,052 participants were recruited via the firm Bovitz, using stratified sampling to match the U.S. population on key demographic variables (age, census division, education, ethnicity, gender, income, neighborhood, political ideology, sexual identity).

- AI-Driven Interviews: Each participant engaged in a two-hour voice interview conducted by a custom AI Interviewer Agent. This agent followed a semi-structured script adapted from the American Voices Project (AVP), covering diverse life domains. The AI interviewer could generate dynamic follow-up questions, ensuring both breadth and depth while maintaining consistency across interviews. Transcripts formed the agents' core memory.

- Evaluation Instruments (Phase 1): Post-interview, participants completed an online battery comprising:

- General Social Survey (GSS): 177 core categorical items, 6 numerical items.

- Big Five Inventory (BFI-44): 44 Likert items.

- Behavioral Economic Games (N=5): Dictator, Trust (P1 & P2), Public Goods, Prisoner's Dilemma (incentivized).

- Social Science Experiments (N=5): Sampled from Camerer et al. (2016), involving treatment/control conditions.

- Self-Consistency Measurement (Phase 2): Two weeks later, participants repeated the GSS, BFI, games, and experiments (excluding the interview). This provided a crucial measure of human response stability over time, serving as the benchmark for agent performance.

Experimental Methodology and Evaluation

The evaluation framework focused on comparing agent predictions against actual participant responses, normalized by human self-consistency, and assessing performance relative to baseline methods.

- Normalization Metric: Recognizing that human responses exhibit temporal variability, the primary evaluation metric was normalized accuracy (for categorical data) or normalized correlation (for continuous data). This is calculated as:

Where performance is accuracy (GSS) or Pearson correlation (BFI, Games) against the Phase 1 responses. A score of 1.0 indicates the agent predicts the participant's Phase 1 response as well as the participant replicates their own Phase 1 response in Phase 2. Mean Absolute Error (MAE) was also used but not normalized due to potential division-by-zero issues.

- Baselines: The interview-based agents were compared against:

- Demographic Agents: Prompted only with basic demographics (age, gender, race, ideology).

- Persona Agents: Prompted with short self-descriptive paragraphs written by participants.

- Random Chance: Baseline probability.

- Exploratory Baselines: Included "Composite" agents (using actual survey/game responses as input), "Summary" agents (using LLM-generated interview summaries), and "Random Lesion" agents (using partially redacted interviews).

- Evaluation Tasks:

- Individual Prediction: Predicting Phase 1 GSS, BFI, and game responses for each participant.

- Experimental Replication: Predicting aggregate treatment effects (Cohen's d, p-values) across the five social science experiments, compared to the effects observed in the human sample.

- Bias Analysis: Demographic Parity Difference (DPD) was calculated as the performance gap between the highest- and lowest-performing demographic subgroups (based on political ideology, race, gender) for each agent type. Lower DPD indicates less performance variation across groups. Regression analyses further probed biases.

- Pre-registration: Key analyses were pre-registered.

Key Findings and Performance

The paper reports substantial predictive capabilities for the interview-based agents.

- Individual Prediction Accuracy:

- GSS: Achieved 0.85 normalized accuracy (raw accuracy: 68.85%, participant self-replication: 81.25%).

- BFI: Achieved 0.80 normalized correlation (raw correlation: r=0.78, participant self-replication: r=0.95).

- Economic Games: Achieved 0.66 normalized correlation (raw correlation: r=0.66, participant self-replication: r=0.99).

- Superiority of Interview Data: Interview-based agents significantly outperformed demographic and persona-based agents on GSS (by 14-15 normalized accuracy points) and BFI metrics. This highlights the substantial predictive value gained from rich, idiosyncratic interview content beyond demographics or brief self-descriptions. Differences in MAE for economic games were not statistically significant, suggesting these specific behaviors might be less dependent on the deep context captured by interviews or harder for the LLM to infer.

- Experimental Replication: The agent population successfully replicated aggregate treatment effects. They replicated the same four out of five experiments that the human participant sample replicated, failing to replicate the same one. Agent-predicted effect sizes showed a high correlation (r = 0.98) with human-observed effect sizes, yielding a normalized correlation of 0.99.

- Bias Reduction: Interview-based agents exhibited significantly lower DPD across political ideology and race subgroups compared to demographic-based agents for GSS, BFI, and economic games. This suggests grounding agents in individual interviews mitigates reliance on potentially biased demographic stereotypes. Gender DPD was low for all agent types.

- Exploratory Findings:

- Interview-based agents outperformed "Composite" agents (using other survey/game data), indicating interviews capture unique predictive information.

- Agents based on interviews with up to 80% content randomly removed still outperformed Composite agents, suggesting high information density in the interviews.

- Agents using LLM-generated summaries ("Summary Agents") performed well (0.83 normalized GSS accuracy), indicating the factual content is crucial, though slightly less effective than full transcripts.

- "Maximal" agents using all available data (interview + surveys + games) performed similarly to interview-only agents, suggesting the interviews captured most of the predictive signal present in the combined dataset.

Implementation Considerations

Deploying such agent simulations involves several practical considerations:

- Computational Cost: Utilizing large models like GPT-4o with extremely long prompts (full multi-thousand-word transcripts plus expert reflections) incurs significant computational costs for inference, both in terms of time and API expenses, especially at the scale of 1,000+ agents.

- Prompt Engineering: The effectiveness relies heavily on the specific prompting strategy, including the instruction format, the inclusion of expert reflections, and the chain-of-thought structure. Tuning these prompts may be necessary depending on the LLM used and the specific task.

- Data Privacy and Security: The use of deeply personal interview transcripts necessitates robust data privacy protocols. The proposed two-tier access model (open aggregated data, restricted individual agent access via application) attempts to balance research utility with privacy risks. Implementing such a model requires secure infrastructure and governance processes.

- Interview Quality: The quality and depth of the initial interview are paramount. The use of an AI interviewer aimed for consistency, but variations in interview quality could impact agent fidelity. Human-led interviews might introduce different trade-offs between personalization and consistency.

- LLM Limitations: Performance is ultimately bounded by the capabilities of the underlying LLM. While GPT-4o performed well, inherent biases, limitations in reasoning, or failures to capture subtle nuances in the transcript remain potential issues. The agents may not perfectly capture all types of human behavior, particularly highly situational or non-verbal aspects not reflected in the transcript.

- Scalability: While demonstrating scalability to ~1,000 agents, further scaling to millions would exponentially increase computational and data management challenges.

Applications in Research and Practice

This methodology offers potential applications for social science and related fields:

- Computational Social Science: Provides a platform for testing theories of individual and collective behavior by simulating interventions, policy changes, or environmental shocks on a representative virtual population grounded in real individual data.

- Pre-Testing and Piloting: Allows for rapid, low-cost piloting of surveys, experimental designs, or communication strategies before deployment with human participants.

- Exploring Counterfactuals: Enables researchers to explore "what-if" scenarios that are difficult, costly, or ethically problematic to paper in the real world.

- Augmenting Traditional Methods: Can complement surveys and lab experiments by providing richer behavioral predictions and insights into the mechanisms underlying observed patterns.

- Reduced Bias Simulation: Offers a method for simulating diverse populations that captures more individual heterogeneity and potentially exhibits less stereotypical bias compared to methods relying solely on coarse demographic data.

The work provides a foundation for building high-fidelity, individually grounded agent-based models. By leveraging extensive qualitative data through LLMs, it enables simulations that capture a significant degree of human behavioral consistency and complexity, moving beyond demographic averages towards more personalized predictions. The rigorous evaluation against human self-consistency and the focus on bias mitigation are key aspects of this approach. The development and proposed access model for the 1,000-agent bank represent a potentially valuable resource for the research community.