Understanding Failures of LLMs in Software Engineering Tasks

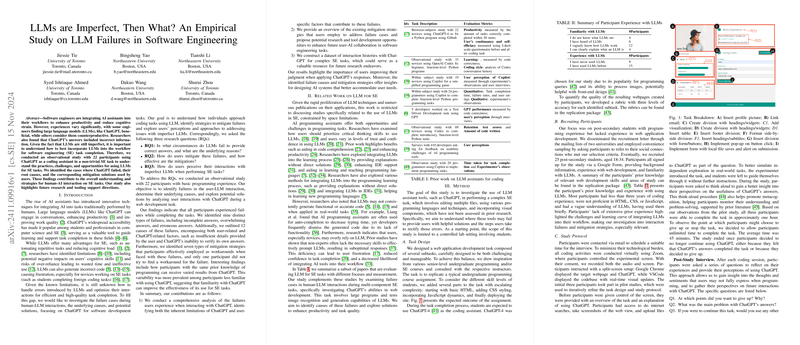

The integration of AI assistants through LLMs into software engineering (SE) tasks is increasingly prevalent within the industry. However, the current capabilities of these models, such as ChatGPT, are not without limitations. The paper, "LLMs are Imperfect, Then What? An Empirical Study on LLM Failures in Software Engineering," conducted by Tie, Yao, Li, et al., provides an empirical analysis of the shortcomings of LLMs when used by individuals with basic programming experience.

The paper addresses the imperfect nature of LLMs and seeks to understand their performance in the specific context of software engineering tasks. The authors identify various circumstances where LLMs fail to provide correct answers, the strategies users employ to mitigate these failures, and users' perceptions of interacting with LLMs.

Methodology and Findings

The researchers conducted an observational paper involving 22 participants who interacted with ChatGPT while attempting to complete a series of web development tasks. The participants were selected based on their limited SE experience, specifically to unveil common failure scenarios and mitigation strategies.

The paper meticulously categorizes failures into nine distinct types, such as incomplete answers, overwhelming or overly complex responses, and erroneous or context-lacking outputs. Among these failures, "Incomplete Answer" and "Overwhelming Answer" were prominent, each affecting over 30% of the interactions. Moreover, user-related causes such as missing prompt details or submitting overly complex tasks often led to these failures. ChatGPT-related causes included failure to consider user expertise and inability to maintain contextual continuity, resulting in incorrect or unresponsive outputs.

The authors note that while users developed various strategies to address these limitations, such as clarifying prompts and adding more contextual details, these techniques were only partially effective. For instance, while updating prompts improved outcomes in some cases, in others, it led to users falling into a "prompting rabbit-hole" — repeatedly engaging in prompting without obtaining satisfactory solutions.

Users’ Perceptions and Implications

Interestingly, 59% of ChatGPT’s responses were deemed helpful but required significant user judgment to integrate successfully. The paper highlights that while some participants felt empowered by the AI tool and reported increased confidence in similar future tasks, others expressed frustration over the lack of depth in replies and the tool's poor user experience design.

Users frequently perceived ChatGPT as a pragmatic tool for prompt-directed solutions rather than as a learning aid. This perception may account for their reliance on it for expediently solving coding issues, potentially at the cost of deeper understanding or skill development. The paper raises substantial implications for designing LLMs in a way that tailors responses based on user expertise levels and supports context-aware interactions to minimize ineffective output and user frustration.

Future Research Directions

The research presents intriguing prospects for SE researchers and LLM developers. Future work could focus on understanding and mitigating the “prompting rabbit-hole” phenomenon by introducing meta-cognitive prompting interventions. SE researchers might further explore the types of tools that best integrate with LLMs to scaffold learning in software engineering without compromising on the swift delivery of usable code. Enhancing the UX design of LLM platforms could ensure that interactions are both efficient and educationally valuable. Lastly, given the ongoing evolution of LLM capabilities, continuous examination of their application in real-world SE tasks remains crucial.

Overall, this paper offers valuable insights into the dynamics of human-LLM interaction in software engineering contexts, emphasizing the need for strategic improvements in model design and user guidance for enhanced collaboration and augmented productivity.