Analysis of "Spider: Any-to-Many Multimodal LLM"

The paper introduces Spider, an innovative framework designed to efficiently manage Any-to-Many Modalities Generation (AMMG) through a LLM. By extending the capabilities of traditional multimodal LLMs, Spider allows for generating multiple modality combinations in a single response rather than being limited to pairwise combinations such as ‘Text + Image’ or ‘Text + Audio’. This development marks a significant step forward in the field of multimodal interaction and capability.

Core Contributions

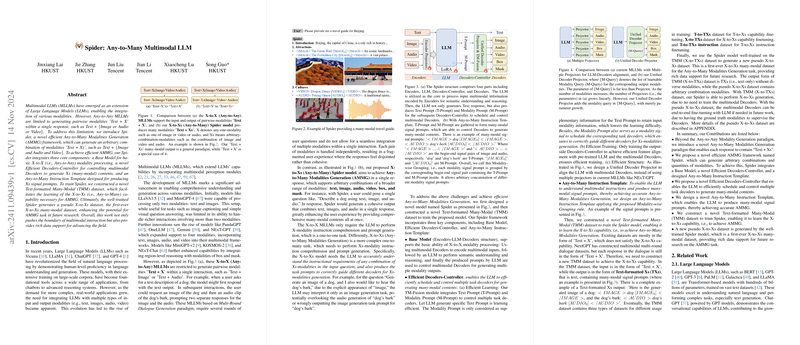

Spider’s design incorporates several intricate components aimed at achieving efficient and accurate AMMG:

- Base Model Structure: The framework utilizes a foundational structure consisting of Encoders, LLM, Decoders-Controller, and multiple Modality-specific Decoders. This structure ensures the cohesive processing and integration of inputs spanning diverse modalities.

- Decoders-Controller: This novel component optimizes the scheduling and control of various decoders. It leverages efficient learning and structure by utilizing a Unified Decoder Projector. This design feature adeptly aligns LLM outputs with the capabilities of the multimodal decoders, simplifying the complex task of any-to-many outputs.

- Any-to-Many Instruction Template: By incorporating a structured template approach, Spider enables the LLM to parse, understand, and execute multimodal instructions. It organizes various modality outputs within a seamless format, encompassing both text prompts and modality-specific prompts (e.g., image, audio).

- Training and Dataset Innovation: To support Spider’s development, the authors created a Text-formatted Many-Modal (TMM) dataset that fosters learning of X-to-Xs capabilities. This new data asset is crucial in training the model to handle diverse combinations of input and output modalities effectively.

Experimental Results

The experimental evaluation of Spider demonstrated its capacity to outperform existing models across a range of multimodal tasks. Notably, Spider achieved superior results in X-to-Text and Text-to-X generation tasks on benchmark datasets like COCO-caption and AudioCaps. Its performance in generating modality-specific outputs like images or audio is particularly notable when considering the challenge of simultaneous multimodal integration.

Results for X-to-Xs generation using B@4 metrics on the TMM test dataset were also reported, highlighting the model’s strength in processing complex many-modal outputs. Compared to previous models like NExT-GPT, Spider appears to better fulfill both instructional comprehension and multimodal output demands, indicating effective implementation of its novel architecture and learning paradigms.

Implications for Future Research

Spider’s achievements signal an important progression in the development of LLMs capable of versatile multimodal interaction. This framework opens up numerous avenues for future research:

- Enhanced Multimodal Training: As Spider has facilitated integration of multiple modalities in a single framework, further improvements in pre-training techniques could enhance the understanding and generation processes further.

- Broader Application Scenarios: The ability to swiftly adapt to various input modality combinations suggests potential for Spider-type methodologies in interactive AI systems, multimedia content generation, and real-time simulation environments.

- Dataset Expansion: The introduction of pseudo X-to-Xs datasets paves the way for more comprehensive datasets that can encapsulate broader application-specific modalities or contextual variances.

In conclusion, Spider showcases a significant leap in multimodal LLM development, offering an archetype for future systems that require concurrent interaction with complex modality combinations. Its effectiveness in generating and integrating diverse outputs stands as a promising foundation for subsequent advancements in the domain.