Audiovisual Deepfake Detection with ChatGPT: A Comparative Analysis

The presented paper, "How Good is ChatGPT at Audiovisual Deepfake Detection: A Comparative Study of ChatGPT, AI Models and Human Perception," explores the burgeoning field of audiovisual deepfake detection by analyzing the capabilities of ChatGPT in comparison with state-of-the-art AI models and human evaluators. Deepfakes, which are synthetic multimedia content generated using deep learning and AI techniques, pose significant challenges to authenticity and raise ethical implications, particularly in areas such as politics, security, and personal privacy.

Methodology and Experimental Design

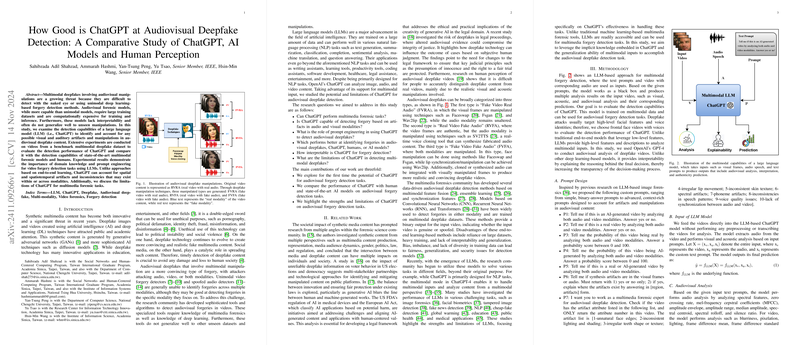

Central to the methodology is examining how LLMs like ChatGPT can assess both audio and visual modalities to identify deepfakes. The researchers utilize videos from a well-established multimodal deepfake dataset to evaluate ChatGPT's detection capabilities. Unlike traditional end-to-end learning approaches, ChatGPT is leveraged through a custom prompt-based interface that guides its analysis of spatial and spatiotemporal inconsistencies within audiovisual content. This approach highlights the importance of domain-specific knowledge and careful prompt engineering, which acts as a pivotal mechanism to harness ChatGPT's inherent multimodal capabilities for forensic analysis.

The paper follows an empirical approach, assessing various text prompts with ChatGPT to determine which configurations lead to the most accurate assessment of video authenticity. These prompt-based queries are designed to nudge ChatGPT toward recognizing artifacts that are consistent with deepfake characteristics.

Key Findings and Results

The performance of ChatGPT in this context reveals several noteworthy points. Some prompts, particularly those focusing on artifact detection, provided more accurate analysis when they were crafted with specificity and targeted both audio and visual artifacts concurrently. Despite these advancements, ChatGPT's average detection accuracy was comparable to human evaluators but lagged behind deep learning-based models explicitly trained for deepfake detection tasks, which demonstrated higher levels of precision and recall.

A critical insight raised by the paper is the inherent interpretability of ChatGPT, which can provide qualitative insights into the detection process, unlike many black-box AI model approaches. This interpretability, however, comes at the cost of raw performance accuracy when compared with cutting-edge forensic AI models.

Implications and Future Directions

The paper brings to light practical and theoretical implications of using LLMs like ChatGPT for audiovisual deepfake detection. Practically, such LLMs offer an accessible tool that does not necessitate extensive domain-specific datasets, allowing for broader deployment. Theoretically, findings emphasize leveraging prompt-engineering to improve model responsiveness and accuracy in complex multimodal tasks.

Looking forward, future developments in AI could aim to combine the interpretative strengths of LLMs with the accuracy of specialized forensic models. By doing so, the forensics community could develop detection tools that not only achieve high accuracy but also provide insights into the decision-making process, improving the robustness and transparency of deepfake detection methodologies.

This research highlights the potential yet challenges in adopting general-purpose LLMs for complex audiovisual forensic tasks, advocating for further innovation in model training, prompt engineering, and model interpretability to expand the capability of such AI systems in the field of multimedia forensics.