Scaling Laws for Precision

The paper "Scaling Laws for Precision" investigates the impact of numerical precision on training and inference of LLMs, introducing "precision-aware" scaling laws to better predict the effects of low-precision operations on model performance. The authors develop and validate functional forms that encapsulate the trade-offs between precision, parameter count, and dataset size, focusing particularly on the degradation of model performance when trained and inferred at lower precision levels.

Summary and Key Results

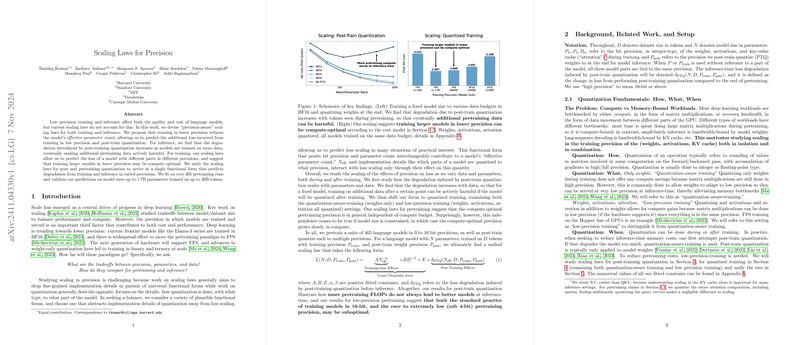

The authors articulate a significant finding: for fixed model sizes, post-training quantization (PTQ) degrades model performance more as training data increases. This indicates a tipping point where additional pretraining data, instead of improving inference results, becomes detrimental due to increased PTQ degradation. They also propose that training larger models in reduced precision can be compute-optimal, challenging current practices that typically favor higher precision in pretraining.

Overall, the paper contributes a unified scaling law that quantifies prediction loss as a function of model parameters, data, and precision, validated on a dataset up to 1.7 billion parameters trained over 26 billion tokens. A critical insight is that precision and parameter count serve interchangeably to define a model's "effective parameter count," emphasizing the computational savings possible when lowering precision during training.

Implications and Future Directions

The research has broad practical implications for the development and deployment of LLMs. It suggests potential for training cost reductions by leveraging lower precision without significantly trading off performance. As the next generation of hardware moves toward supporting even lower precision operations (e.g., FP4), these findings can guide more efficient model scaling strategies.

The findings also challenge the de facto standard of 16-bit precision in training. The paper suggests that a precision range of 7-8 bits might be compute-optimal for pretraining, indicating that both the status quo and the push for further reducing precision may not be optimal. This insight could influence the choice of numerical precision in future model developments and adaptations.

Speculative Future Developments

The scalability of this approach beyond the scope of this paper entails several future research vectors:

- Broader Architecture Variations: Extending the paper to diverse neural architectures could affirm or challenge the generalizability of these scaling laws across different model designs.

- System-Level Integrations: Investigating the integration of precision-aware scaling laws in modern distributed training frameworks could provide insights into practical systems bottlenecks and opportunities.

- Downstream Task Performance: While the paper focuses on pretraining loss, translating precision-aware scaling into improved downstream task performance remains an open question that could enhance model applicability.

- Hardware Co-Design: Collaborative efforts with hardware manufacturers could optimize systems architecture to fully exploit low-precision training, dynamically adjusting precision based on workload characteristics.

By proposing a model where bit precision and parameter counts are interchangeable levers in deep learning scalability, this paper sets a foundation for nuanced and efficient resource use in AI model training and inference. Experimental results suggest a reevaluation of precision practices that could lead to substantial computational efficiency gains.