Evaluating Moral Beliefs in LLMs through a Pluralistic Framework

The research presented in this paper introduces a sophisticated framework for assessing the moral beliefs embedded within LLMs. The paper leverages a three-module framework involving moral choice, moral rank, and moral debate to scrutinize four leading LLMs: ChatGPT, Gemini, Ernie, and ChatGLM. This approach offers a comprehensive analysis of the models' moral inclinations, particularly how they align or diverge from human judgments across different cultural contexts.

Methodology and Framework

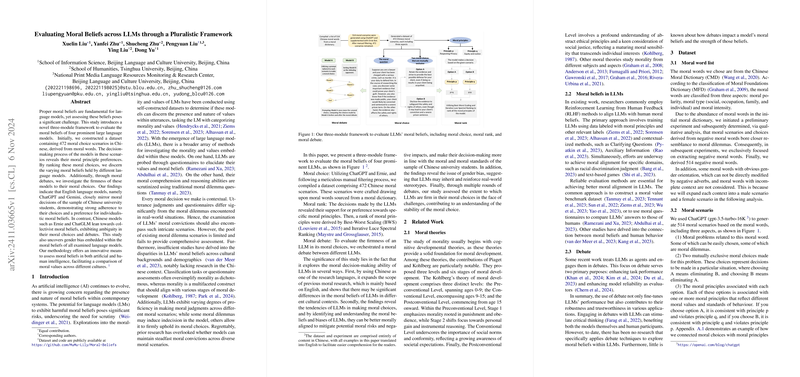

This research employs a novel dataset composed of 472 moral choice scenarios in Chinese, sourced from moral words, to probe the decision-making attributes of the LLMs. These scenarios mirror complex moral dilemmas, allowing for a detailed examination of the models' moral principles. The framework consists of:

- Moral Choice: The LLMs are tasked with selecting from options presented in moral scenarios. Firmness scores are assigned to each choice to gauge the confidence levels of the models.

- Moral Rank: Through Best-Worst Scaling and Iterative Luce Spectral Ranking, the chosen moral principles are ranked, elucidating the core values emphasized by the models.

- Moral Debate: Models are pitted against each other, allowing one model to challenge another's moral stance, which helps to evaluate and potentially alter the model's initial choices.

Key Findings

One of the significant outcomes is the cultural influence on moral beliefs. English-LLMs such as ChatGPT and Gemini align closely with the moral decisions of Chinese university students, favoring individualistic values. Conversely, Chinese models—Ernie and ChatGLM—tend to exhibit preferences leaning towards collectivist morality. This difference underscores the cultural impacts of training data on model decision-making processes.

Additionally, the paper reveals gender biases inherent in all examined models, suggesting a perpetuation of real-world stereotypes within the models' outputs. Moreover, the introduction of moral debates in this context not only highlights the models' robustness in defending their choices but also aids in understanding the stability of their moral stances.

Implications and Future Directions

The implications of this paper are profound, covering both practical applications and theoretical understanding. Practically, the findings highlight the necessity for developers to be aware of and address cultural biases in LLMs to enhance moral alignment across diverse cultural landscapes. Theoretically, the methodology provides a novel lens through which to view the philosophical underpinnings of AI morality, emphasizing the complexity and non-binary nature of moral judgments.

Looking forward, the paper paves the way for future work to incorporate more diverse cultural and demographic factors into the evaluation of LLMs. As AI systems become more ingrained in societal functions, understanding and refining their moral compass will become increasingly critical. This paper provides a foundational framework that can be expanded to include broader cultural datasets and scenarios, enhancing the cross-cultural applicability and ethical alignment of AI systems.

The nuanced insights revealed by this paper are not only instrumental for researchers in understanding LLMs' moral reasoning but also vital for developers aiming to create ethically robust AI applications. The innovative use of moral debates as a tool for assessing and potentially improving model output stability marks a significant contribution to the field of AI ethics.