Exploration of Run-Time Strategies for Medical Challenge Problems

The paper "From Medprompt to o1: Exploration of Run-Time Strategies for Medical Challenge Problems and Beyond" presents a comprehensive examination of advanced run-time strategies to enhance the performance of LLMs on medical challenge problems. The paper provides intriguing insights into the evolving capabilities of LLMs, particularly comparing OpenAI's o1-preview model and GPT-4 series guided by sophisticated prompt engineering techniques like Medprompt.

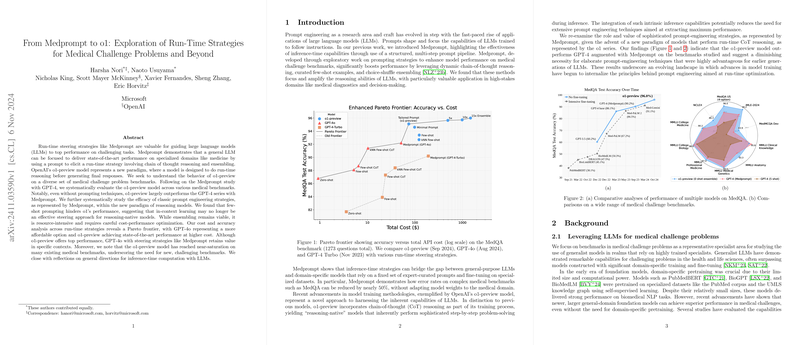

Primarily, the paper evaluates the o1-preview model which embodies a paradigm shift by incorporating chain-of-thought (CoT) reasoning directly into its training, thus making it a "reasoning-native" model. This design contrasts with the classical approach where performance enhancements heavily relied on prompt engineering strategies, such as those exemplified by Medprompt. The authors conducted a systematic evaluation across a variety of medical benchmarks, notably finding that o1-preview surpasses GPT-4 models augmented with Medprompt, even without the use of prompting techniques.

A key finding is that classical prompting strategies, especially few-shot prompting, are not as effective with reasoning-native models like o1-preview as they were with previous model iterations. This result suggests a diminished necessity for these strategies as LLMs advance in their intrinsic reasoning capabilities.

Performance Evaluation and Benchmark Saturation

The empirical results demonstrate the o1-preview model's superior performance across numerous medical benchmarks. For instance, the o1-preview model achieved a 96.0% accuracy on the MedQA benchmark using simple zero-shot prompting, outperforming the GPT-4 Medprompt's 90.2%. The paper also introduces a new multilingual benchmark, JMLE-2024, derived from the Japanese medical licensing exam, to mitigate concerns about benchmark saturation and potential test set contamination from models trained on expansive datasets.

The analysis also highlights that the o1-preview model achieved remarkable accuracy not only on standard English datasets but also on non-English datasets such as JMLE-2024, thereby endorsing its proficient handling of diverse linguistic contexts without explicit translation into English.

Methodological Insights and Implications

This research critically evaluates the role of various prompting techniques, determining that while methods like ensembling continue to offer utility, especially in reducing variance, they are resource-intensive. Intriguingly, the findings suggest that tailored and minimal prompts combined with strategic ensembling improve performance without complex prompting interventions. Furthermore, the paper emphasizes that reasoning tokens—an innovative feature of the o1 model—enable it to optimize reasoning steps internally, often yielding higher accuracies when longer reasoning time is allocated.

Future Directions and Challenges

In conclusion, the paper underlines the shifting landscape of LLMs towards models that inherently perform sophisticated reasoning, thus reducing the dependence on intricate prompt engineering. It proposes several promising directions for future research, including enhancing model efficiency through metareasoning, optimizing input usage, and integrating external resources at runtime. Additionally, the paper encourages exploration of adaptive ensembling strategies to efficiently balance the trade-offs between accuracy, cost, and computational resource allocation.

Nevertheless, the paper also points out certain limitations. The fast-paced evolution of LLMs potentially saturates existing benchmarks, reducing their efficacy in evaluating truly state-of-the-art models. It acknowledges the ongoing refinement needed to fully unlock the potential of models like o1-preview, particularly pertaining to best interaction practices that align with its advanced reasoning capabilities.

In essence, this paper significantly contributes to understanding the practical and theoretical implications of evolving run-time strategies for LLMs in high-stakes domains such as medicine, presenting a pathway for future improvements in AI-based problem-solving frameworks.