MILU: A Comprehensive Benchmark for Indic Language Understanding

Evaluating the capabilities of LLMs across diverse languages remains a pressing challenge in the field of NLP. The research paper "MILU: A Multi-task Indic Language Understanding Benchmark" introduces MILU, an extensive evaluation benchmark specifically designed to assess LLMs in 11 Indic languages. This benchmark represents a significant step towards bridging the gap in evaluating LLM capabilities in languages that deploy non-Latin scripts and are considered low-resource, such as those prevalent in India.

Benchmark Scope and Design

The MILU benchmark spans 8 domains and 42 subjects, covering both general and culturally specific knowledge. These domains include STEM fields, as well as culturally relevant areas such as Arts & Humanities, Social Sciences, and Law & Governance. The benchmark is designed with an India-centric focus, incorporating material from national, state-level, and regional examinations. Subjects covered are broad, reflecting local history, arts, festivals, laws, alongside traditional academic subjects like science and mathematics. This comprehensive approach emphasizes not only linguistic competence but also cultural understanding, making MILU unique in its purpose and scope.

Evaluation and Findings

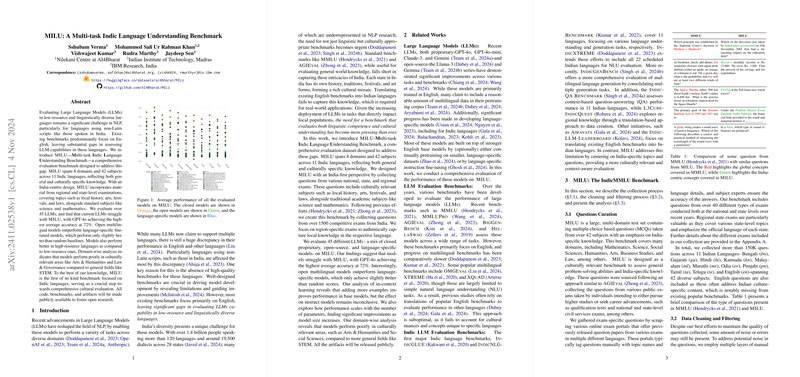

MILU evaluates 45 LLMs, which are a mix of closed proprietary models, open-source multilingual models, and language-specific fine-tuned models. The findings from the evaluation reveal that current LLMs face substantial challenges when tasked with MILU, with GPT-4 achieving the highest average accuracy at 72%. Notably, open multilingual models tend to outperform language-specific models, which only marginally surpass random baselines. This trend is significant considering the growing interest in developing LLMs that can perform efficiently across multiple languages without extensive language-specific fine-tuning.

A detailed analysis highlights that models perform notably better in high-resource languages like Hindi and Bengali than in low-resource ones. Moreover, in terms of domain-specific performance, models exhibit higher accuracy in general fields such as STEM, while struggling with culturally relevant domains. This underpins the necessity for enhanced dataset representation encompassing culturally specific data to elevate performance in non-STEM domains.

Implications and Future Directions

The introduction of MILU serves as a pivotal benchmark for assessing LLMs within the specific context of Indic languages. This lays the foundation for significant advancements in creating models capable of understanding and generating contextually and culturally appropriate responses. The results highlight areas for improvement, particularly in creating more inclusive multilingual datasets that reflect cultural diversity.

From a practical standpoint, MILU emphasizes the dire need for more robust multilingual strategies that can address the disparity in model performance across different languages and domains. Future research endeavors should focus on enhancing model architectures and training paradigms to accommodate culturally specific data more effectively.

In conclusion, MILU not only provides a comprehensive evaluation framework but also sets the stage for future research aimed at developing inclusive, culturally competent LLMs. As such, it is expected to stimulate further studies and lead to the creation of models that better understand and cater to the linguistic and cultural intricacies of India's diverse languages.