Overview of "Foundations and Recent Trends in Multimodal Mobile Agents: A Survey"

The evolving landscape of multimodal mobile agents, as presented in this comprehensive survey, reflects pivotal advancements in mobile agent technologies. The survey covers a breadth of foundational models and recent trends, underscoring the increased demand for agents that exhibit real-time adaptability and efficient processing of multimodal data. This essay provides a detailed summary and analysis of the research findings, highlighting critical aspects and suggesting potential directions for future inquiry.

Key Technological Advancements

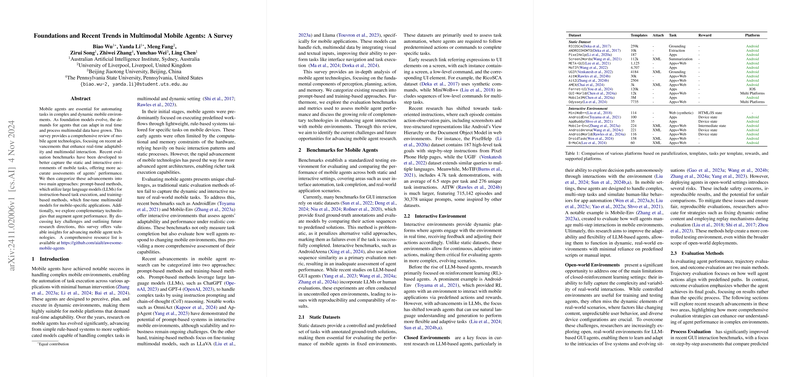

The field of mobile agent research has witnessed transformative developments, primarily categorized into prompt-based methods and training-based methods. Prompt-based methods employ LLMs for instruction-based task execution. Demonstrated systems, such as OmniAct and AppAgent, highlight the capabilities of LLMs like GPT-4 in executing complex tasks through instruction prompting and chain-of-thought (CoT) reasoning. However, scalability and robustness continue to pose challenges.

Conversely, training-based methods focus on the fine-tuning of multimodal models tailored for mobile-specific applications. Examples include LLaVA and its counterparts, which integrate visual and textual inputs to enhance task execution, especially in interface navigation. These paradigms illustrate a significant shift from static rule-based systems to dynamic, adaptable frameworks.

Evaluation Benchmarks

Evaluating mobile agents remains complex, particularly in capturing the dynamic and interactive nature of mobile tasks. Recent benchmarks like AndroidEnv and Mobile-Env provide novel environments to assess agent performance in realistic conditions, measuring adaptability beyond task completion metrics. These platforms address the limitations inherent in traditional static datasets and offer a comprehensive view of agent capabilities in interactive environments.

Components of Mobile Agents

The survey explores four core components underpinning mobile agents: perception, planning, action, and memory. These elements work in synchrony to enable agents to perceive, plan, and execute tasks in dynamic environments. The perception process, for instance, now benefits from multimodal integration, overcoming limitations of earlier methods that struggled with excessive irrelevant information.

Effective planning, categorized into dynamic and static strategies, remains crucial for mobile agents to adapt to environments with fluctuating inputs. Actions executed through GUI interactions, API calls, and collaborations demonstrate the agent's ability to mimic human behavior across diverse tasks. Moreover, memory mechanisms, both short-term and long-term, enhance task execution by allowing agents to retain task-relevant information.

Implications and Future Directions

The surveyed technologies present several implications for the future of mobile agents. The necessity for enhanced security and privacy mechanisms is critical, given the risks associated with open environments. Moreover, improving the adaptability of mobile agents to dynamic settings and fostering multi-agent collaboration are integral areas for continued research.

Future work should explore innovative strategies to bolster agent behavior in rapidly changing environments, employing privacy-preserving techniques to secure sensitive data. Additionally, advancing multi-agent frameworks could enable more efficient task coordination and execution, propelling the practical applicability of mobile agents.

Conclusion

This survey embodies a significant scholarly contribution to the understanding of multimodal mobile agents. The discourse on benchmarks, core components, and methodologies not only sheds light on the current technological landscape but also sets the stage for future innovations. The continuous evolution of mobile agent technologies will undoubtedly reshape the domain, with implications for both practical applications and theoretical development in artificial intelligence research.