An Evaluation of Lingma SWE-GPT for Automated Software Improvement

The paper presents Lingma SWE-GPT, a series of open-source LLMs targeted at improving the efficiency and effectiveness of automated software improvement tasks. The presented models, Lingma SWE-GPT 72B and Lingma SWE-GPT 7B, represent a notable effort to match the performance of existing closed-source models like GPT-4o and Claude 3.5 Sonnet, while maintaining accessibility and alleviating privacy concerns associated with closed systems.

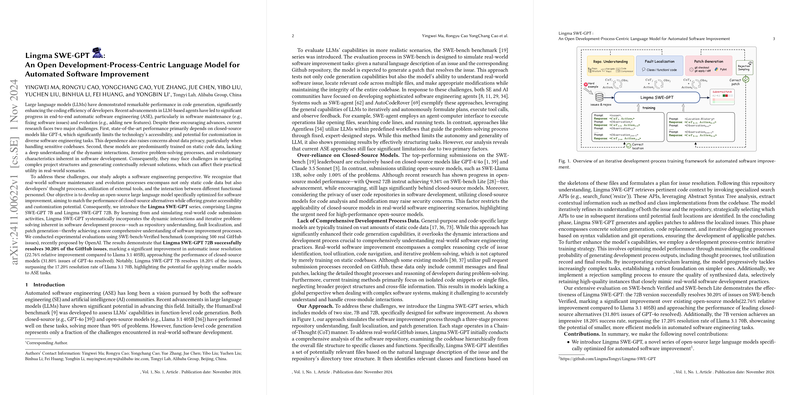

Lingma SWE-GPT significantly progresses from the reliance on static code data, addressing a key limitation in existing models. It employs a more dynamic approach by adopting a workflow that mimics the real-world software engineering process. The model follows a well-defined development pipeline: repository understanding, fault localization, and patch generation. This method notably allows the model to adapt to dynamic interactions and iterative processes inherent in software development. Such a technique could advance the real-world utility of LLMs in practical software development scenarios where the ability to discern complex project structures and generate context-sensitive solutions is critical.

The paper introduces an extensive evaluation of Lingma SWE-GPT that relies on SWE-bench Verified and Lite benchmarks, setting it vis-à-vis existing open-source and closed-source models. The results reveal that Lingma SWE-GPT 72B resolves 30.20% of GitHub issues, showcasing a 22.76% relative improvement in automatic issue resolution capabilities over Llama 3.1 405B, and nearly equaling the performance of GPT-4o. This finding demonstrates strong potential for open-source models in practical automated software tasks, presenting a more accessible counterpart to the currently dominant closed-source models.

Additionally, the model explores how smaller-scale models, such as Lingma SWE-GPT 7B, can produce competitive results. With a resolution rate of 18.20% on the benchmarks, the 7B model surpasses the 17.20% resolution rate of Llama 3.1 70B. This highlights the utility and efficiency of smaller models, which might appeal to settings with constrained computational resources.

One of the pivotal contributions of the paper is the development process-centric training strategy, which is key to the model's robust performance. This approach efficiently leverages the real-world dynamics of software processes, refining the models through curated development data synthesis, including reasoning patterns, tool interactions, and practical problem resolutions. By incorporating a comprehensive curriculum training strategy, Lingma SWE-GPT shows improved capabilities to handle increasingly complex software tasks with higher reliability.

The implications of this research, both practical and theoretical, invite future exploration in several directions. Practically, Lingma SWE-GPT establishes a framework that democratizes access to high-performing automation tools for software improvement. Theoretically, the results underline the importance of a dynamic and process-oriented training paradigm to enhance the contextual understanding and execution abilities of LLMs in intricate, real-world applications. The authors speculate that further advancements could explore more sophisticated tool usage, reasoning, and verification capabilities, crucial for advancing AI-assisted software engineering into more extensive domains and broader stages of the software lifecycle.

In summary, this paper clearly defines a path towards accessible and efficient models for software engineering tasks, challenging the status quo dominated by closed-source models. The research lays a foundation for future inquiries into enhancing LLMs' comprehension and reasoning capabilities, with profound implications for the automation and quality enhancement of software engineering.