Insightful Analysis on "LoRA vs Full Fine-tuning: An Illusion of Equivalence"

The research article titled "LoRA vs Full Fine-tuning: An Illusion of Equivalence" addresses a pertinent topic within the field of machine learning by comparing Low-Rank Adaptation (LoRA) and full fine-tuning strategies in adapting pre-trained LLMs for specific tasks. While parameter-efficient fine-tuning methods, particularly LoRA, propose computational and resource-friendly alternatives, this paper explores the nuanced differences that arise when comparing these methods at an in-depth level.

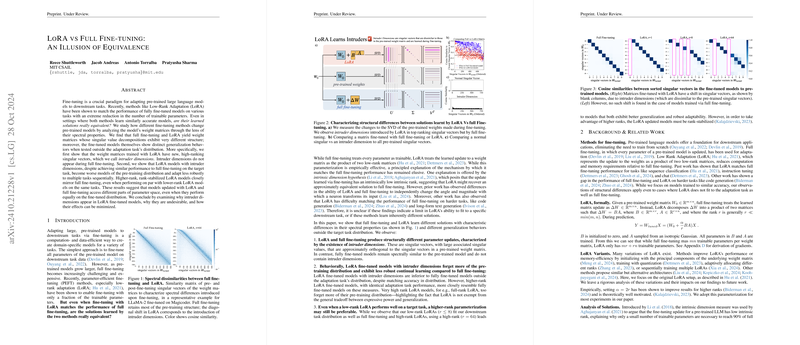

The authors systematically investigate whether models fine-tuned with LoRA and full fine-tuning, achieving comparable task accuracy, actually reach similar model states. Their analysis reveals pronounced structural and generalization differences, despite equivalent accuracy on direct adaptation tasks. The paper is underpinned by an examination of the spectral properties of weight matrices post-fine-tuning. Specifically, they leverage Singular Value Decomposition (SVD) to illustrate that LoRA and full fine-tuning yield significantly different weight structures, with LoRA models introducing what they term "intruder dimensions." Intruder dimensions refer to new, high-ranking singular vectors that appear in LoRA-tuned models, diverging considerably from pre-trained weight structures.

Key Insights

- Distinct Parameter Spaces: Fine-tuning methods, despite similar accuracy, exploit different segments of the parameter space. The authors note significant structural variations between LoRA and full fine-tuning, attributable to the presence of intruder dimensions in LoRA models.

- Robustness and Adaptability: LoRA's adaptation, particularly at lower ranks, results in models that show less robustness and more forgetting when exposed to sequential multi-task learning setups. This indicates lesser retention of previously acquired knowledge compared to fully fine-tuned counterparts.

- Pre-training Distribution Fidelity: The analysis shows a U-shaped trend regarding LoRA models' capability to retain fidelity to the pre-training distribution while tuning for a task. Notably, LoRA models with higher precision at rank 64 demonstrated less forgetting of the pre-training distribution compared to full fine-tuning.

- Effectiveness of Rank Conditioning: An intriguing discovery is the impact of scaling the LoRA parameterization hyperparameter, , consistent with rank (i.e., ). This scaling was shown to enhance effective rank utilization and reduce intruder dimensions, consequently stabilizing LoRA at higher ranks.

Practical and Theoretical Implications

The findings of this paper substantiate the idea that full fine-tuning and LoRA touch different parts of model learning dynamics, specifically in the structure and stability of parameter updates. Practically, this insight could guide practitioners in choosing the fine-tuning strategy better tailored to their needs depending on resource constraints and desired performance characteristics such as task transferability and robustness.

Theoretically, the introduction of "intruder dimensions" challenges the intrinsic dimension hypothesis, which implies a simplistic view of LoRA's ability to mimic performance equivalently to full fine-tuning. Instead, the differing generalization behaviors ascribed to intrinsic dimensional variance suggest the need for a refined understanding of these adaptation modalities.

Speculations on Future Developments

Considering the shift of research focus towards generalized learning and robust AI applications, this paper's findings might pioneer nuanced PEFT methods where rank and parameter adaptation is optimized dynamically based on task complexity. Future research could explore hybrid approaches that mitigate the intruder effect while preserving LoRA's computational efficacy. Moreover, the examination of LoRA variations, such as more complex structural adaptations and their spectral impacts, remains a ripe area for investigation.

Overall, while LoRA presents an attractive alternative to full fine-tuning regarding task-specific adaptation, its nuanced impact on model structure and task generalization highlights the importance of understanding underlying mechanism differences, encouraging further exploration in adaptive and flexible fine-tuning strategies.