Tailored-LLaMA: Optimizing Few-Shot Learning in Pruned LLaMA Models with Task-Specific Prompts

The paper presents a focused exploration into the fine-tuning of LLMs, specifically through the innovation of the Tailored-LLaMA approach. It addresses a pertinent challenge in the deployment of LLMs: adapting these typically expansive models for task-specific applications while mitigating computational demands. Tailored-LLaMA employs a tri-fold strategy involving structural pruning, prompt engineering, and the LoRA method to fine-tune pruned LLaMA variants effectively.

Structural Pruning and Efficiency

The process begins with structural pruning, designed to reduce model size without degrading performance disproportionately. The authors employ a method analogous to DepGraph by assessing parameter inter-dependencies within LLaMA’s architecture, categorized through a dependency graph. The pruning is quantitatively driven, focusing on groups of interdependent parameters. This first-phase pruning compresses the model from 7B parameters to 5B and 4B parameters, achieving various compression ratios without significant performance losses. The results indicate substantial accuracy retention, with a mean recovery rate of 95.68% for a 20% compression ratio.

Task-Specific Prompting and Fine-Tuning

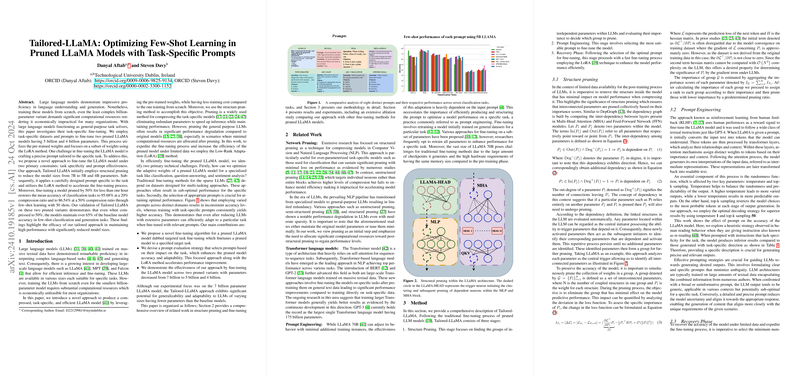

Subsequent to pruning, the paper emphasizes the critical role of task-specific prompts. The researchers developed a prompt evaluation strategy to identify optimal prompts most likely to enhance the pruned models' performance on specific tasks. The few-shot performance demonstrated a restoration of mean classification accuracy to 95.68% at a 20% compression ratio and 86.54% at a 50% compression ratio, affirming the efficacy of tailored prompts.

Implementation of LoRA Method

The final phase involves the Low-Rank Adaptation (LoRA) method that expedites fine-tuning while requiring only limited data. LoRA facilitates an efficient parameter update process, training only the low-rank matrices which significantly reduces the data required. This approach results in a notable decrease in computational overhead, allowing fine-tuning on a single GPU in under one hour, a potentially transformative improvement for practical applications of LLMs in resource-constrained environments.

Implications and Future Directions

The paper's results hold meaningful implications for the implementation of LLAMs and other similar structures within constrained computational infrastructures. By demonstrating the ability to maintain high performance with reduced model sizes, this work opens avenues exploring further efficiencies in other large-scale models across different application domains. The adaptability of Tailored-LLaMA suggests potential for scalable approaches in diverse AI and NLP tasks beyond those explored.

The paper sets a solid foundation for additional research into fine-tuning and pruning strategies, particularly concerning the impact of task-specific prompting and low-rank parameter adaptations. Future investigations might delve into automating the selection of optimal prompts or further optimizing the structural pruning methodologies to enable widespread, efficient utilization of LLMs across varying scales and disciplines.

In conclusion, this paper provides a significant contribution to the field by bridging the gap between large-scale model capabilities and practical deployment needs, recommending methodologies that can enhance the efficiency of AI systems while maintaining their effectiveness across specified tasks.