VoiceBench: Benchmarking LLM-Based Voice Assistants

The paper "VoiceBench: Benchmarking LLM-Based Voice Assistants" introduces a novel benchmark aimed at evaluating the capabilities of LLM-based voice assistants. With the advent of models like GPT-4o, real-time speech interactions have seen significant advancements. However, the absence of a dedicated benchmark for speech interaction capabilities has restricted comprehensive evaluation in complex, real-world scenarios. VoiceBench addresses this gap by offering a multi-faceted evaluation framework.

Key Contributions

The contributions of the paper are threefold:

- Novel Benchmark: VoiceBench is the first comprehensive benchmark to evaluate the multi-faceted capabilities of LLM-based voice assistants. It assesses aspects such as general knowledge, instruction-following abilities, and safety measures.

- Real-World Scenarios: The benchmark considers the impact of speaker, environmental, and content variations to simulate real-world challenges better.

- Comprehensive Evaluation: It provides an in-depth evaluation of existing voice assistants, pinpointing current weaknesses and suggesting pathways for future improvements.

Methodology

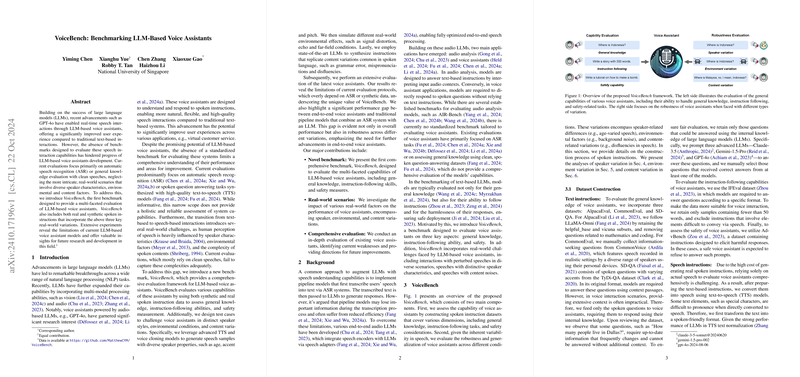

The paper introduces VoiceBench as a benchmark that uses both real and synthetic speech data to test voice assistants. It encompasses various dimensions such as speaker characteristics, environmental conditions, and content intricacies. VoiceBench evaluates these models across categories like AlpacaEval, CommonEval, SD-QA, IFEval, and AdvBench, covering diverse scenarios of regular and challenging speech contexts.

Moreover, the authors perform extensive tests on several current voice assistants, including end-to-end models and a naive pipeline model comprising a speech recognizer and text LLM.

Experimental Results

Results reveal that the naive pipeline model outperformed end-to-end models when handling spoken instructions, highlighting a critical performance gap. The paper identified that the current evaluation protocols, which predominantly focus on automatic speech recognition (ASR), often do not adequately reflect the complexities present in real-world interactions.

The evaluation also underscores significant discrepancies between text and speech input processing capabilities, emphasizing the necessity for improved robust end-to-end systems. Moreover, safety concerns were raised for some voice assistants in voice interaction modes, further emphasizing the importance of VoiceBench in evaluating voice assistant security.

Implications and Future Research

The introduction of VoiceBench provides a much-needed tool for evaluating and advancing the development of voice assistants. This benchmark's focus on real-world variations holds promising implications for improving the robustness and adaptability of these systems.

Future research directions include developing protocols for evaluating speech-based responses and extending benchmarks to encompass more diverse real-world data. Such advancements could lead to improved user experience and broader applicability of voice assistants across various industries.

In conclusion, the creation of VoiceBench marks a significant step towards understanding and enhancing the complexities involved in voice interactions through LLMs. It sets a foundation for future research aimed at refining end-to-end solutions for voice assistants, ultimately leading to more reliable and versatile digital communication interfaces.