Steering Knowledge Selection Behaviors in LLMs via SAE-Based Representation Engineering

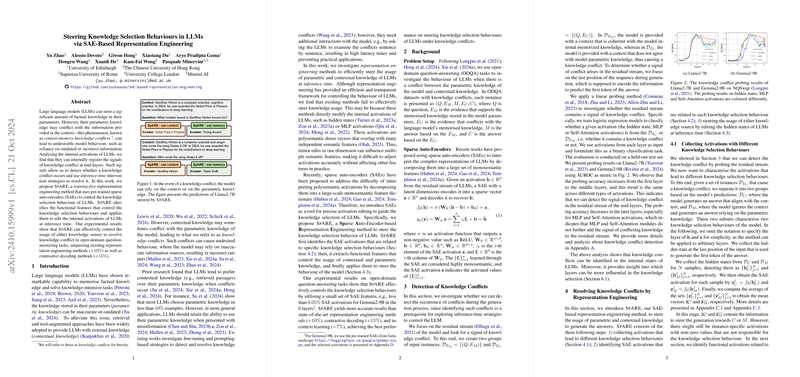

The paper "Steering Knowledge Selection Behaviors in LLMs via SAE-Based Representation Engineering" addresses the challenge of context-memory knowledge conflicts in LLMs. These conflicts arise when the knowledge inherently stored in the model parameters contradicts the information provided in the context, potentially leading to the use of outdated or incorrect data.

Background and Motivation

LLMs possess a remarkable ability to memorize facts and perform well on knowledge-intensive tasks. Nonetheless, the reliance on parametric knowledge can sometimes be problematic, especially when this knowledge is inconsistent with up-to-date contextual information. The current solution involves retrieval-augmented models that use external contextual data. However, discrepancies between contextual and parametric information can cause undesirable model behaviors, where preference might lean toward incorrect sources.

Existing methods attempt to resolve knowledge conflicts through various means, such as fine-tuning or prompting, but often require additional model interactions, leading to inefficiencies. This paper proposes a novel approach, SpARE, that leverages sparse auto-encoders (SAEs) for efficient behaviour control at inference time, without additional training requirements.

Methodology

SpARE, the proposed method, operates on the principles of representation engineering, utilizing SAEs to steer knowledge selection processes. The approach is training-free and involves three primary steps:

- Detection of Knowledge Conflicts: The method identifies knowledge conflicts through analyzing the internal activations of the model. The recognizability of these conflicts within mid-layer residual streams suggests potential layers for intervention.

- Functional SAE Activation Identification: SpARE identifies specific activations related to knowledge selection behaviors by calculating mutual information metrics. This enables precise extraction of features that control the knowledge selection.

- Activation Editing for Behavior Steering: The method modifies the model's internal activations to steer the choice between contextual and parametric knowledge. It emphasizes selective editing to minimize unintended effects on unrelated features.

Results

The experimental evaluation focused on open-domain question-answering tasks with inherent knowledge conflicts. SpARE demonstrated a superior ability to manage knowledge selection behaviors over existing representation engineering methods and contrastive decoding techniques. Notably, it achieved notable accuracy improvements, with performance gains of up to 15% over contrastive decoding methods and 10% over alternative representation engineering approaches.

Implications and Future Directions

The paper's findings underscore the potential of SAEs in facilitating more nuanced model control without additional training efforts. This capability is particularly relevant for instances where rapid adaptation to new information is critical, such as in real-time applications. The results suggest promising avenues for further research into scalable and efficient techniques for managing complex LLM behaviors.

Future work might explore the applicability of SpARE across a broader range of tasks and model architectures, potentially expanding beyond open-domain question answering. Additionally, refinement of the SAE features to increase interpretability and fine-tune control mechanisms could enhance this approach's utility in diverse AI applications.

In summary, this research contributes a novel, efficient method for dynamically managing knowledge conflicts in LLMs, providing a valuable tool for enhancing the adaptability and reliability of AI systems.