Insights on Reverse Question Answering: An Analysis

The paper "Reverse Question Answering: Can an LLM Write a Question so Hard (or Bad) that it Can't Answer?" explores the intriguing challenge of Reverse Question Answering (RQA), evaluating whether LLMs can generate a valid question from a given answer. This paper distinguishes RQA from traditional Question Answering (QA) tasks by emphasizing reasoning strategies and consistency across various answer types, thus shedding light on the abduction and deduction reasoning capabilities of LLMs.

Key Contributions

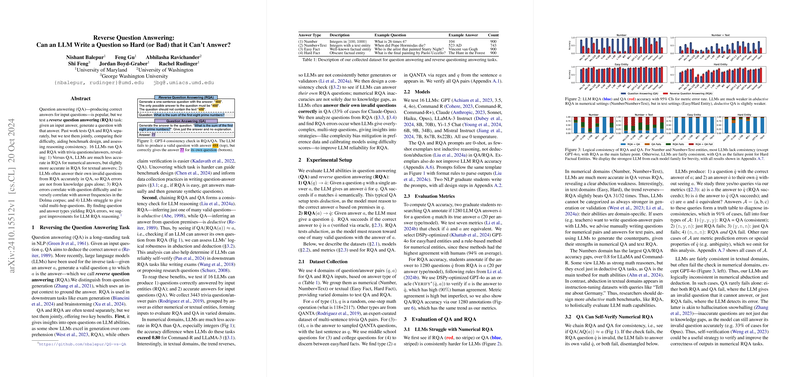

The authors explore 16 LLMs across four distinct answer domains: numerical answers (Numbers and Number+Text) and textual answers (Easy and Hard Facts). A comprehensive dataset of 3443 trivia question-answer pairs is utilized to test both QA and RQA tasks, employing a carefully designed evaluation setup. A central focus of the paper is to uncover disparities in LLM performance between these tasks and identify underlying issues that influence the model's ability to reason and self-verify.

Findings

- Performance Disparities:

- Numerical Answers: LLMs exhibit significantly lower accuracy in RQA compared to QA when the answers are numerical, with performance discrepancies exceeding 0.80 for some models. This clearly highlights a substantial limitation in abductive reasoning capabilities for numerical data.

- Textual Answers: Conversely, models perform slightly better in RQA than QA for textual answers, suggesting a domain-specific advantage in generating questions from known entities.

- Logical Consistency:

- Upon analyzing the consistency of results when chaining RQA and QA tasks, a trend emerges in which models frequently identify and answer their own invalid RQA-generated questions correctly in QA. This indicates that many RQA failures are not purely due to knowledge deficiencies but could stem from improper question formulation.

- Error Correlation:

- The paper finds correlations between RQA errors and factors like question difficulty and answer frequency. Particularly, RQA mistakes in Number+Text categories arise more with rare entities, while overly complex multi-hop questions often lead to errors in the Numbers domain.

Implications and Future Work

The implications of these findings are twofold: from a theoretical perspective, the paper challenges assumptions about LLM reasoning capabilities, particularly highlighting weaknesses in numerical domains. Practically, it advises enhancing LLMs through calibrated model tuning and training data modifications that mitigate bias towards generating complex and inaccurate questions.

For future research, the analysis suggests creating more abductive reasoning benchmarks and improving self-verification mechanisms to augment LLM robustness in generating valid, answer-verifiable questions. This dual focus on reasoning and consistency promises to enhance the utility of LLMs in educational, brainstorming, and exam generation contexts.

In conclusion, this paper provides a critical examination of the RQA task, offering insights into the domain-specific abilities and limitations of current LLMs. By pinpointing areas of weakness, it sets the stage for future advancements in the logical coherence and reasoning processes of AI LLMs.