Modeling Future Conversation Turns to Teach LLMs to Ask Clarifying Questions

The paper "Modeling Future Conversation Turns to Teach LLMs to Ask Clarifying Questions" explores an innovative approach to enhancing LLMs by training them to ask clarifying questions. This research addresses the prevalent issue where LLMs misinterpret ambiguous user inputs by assuming a singular meaning rather than seeking clarification, a scenario that can frustrate users.

Methodology

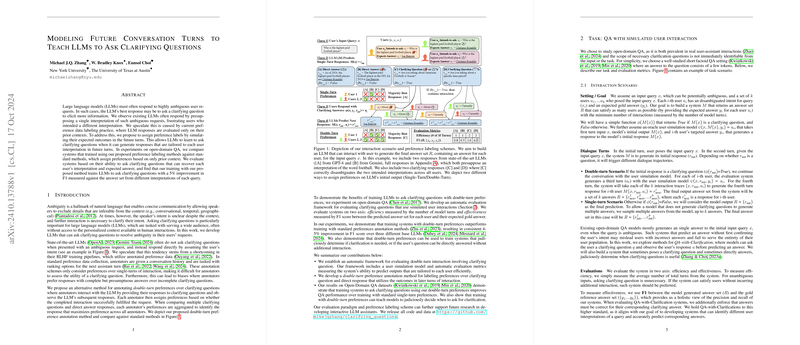

The authors propose a novel training approach that leverages future conversation turns to train LLMs in asking clarifying questions. Unlike standard preference labeling, which evaluates responses based solely on prior context, this method assigns preference labels by simulating the expected outcomes of future interactions. This double-turn preference annotation involves:

- Simulated User Interaction: The LLMs engage in a setup where users respond to clarifying questions, and the LLM's success is judged based on its ability to accurately fulfill user requests through multi-turn interactions rather than single presumptive responses.

- Preference Aggregation: Preferences are aggregated across multiple annotators, focusing on interactions that lead to successful outcomes for different user interpretations.

Experimental Framework

The paper implements experiments using open-domain QA, a challenging context due to its inherent ambiguities. The introduced framework evaluates LLMs on:

- Efficiency: Measured by the number of conversational turns required to achieve a satisfactory response.

- Effectiveness: Assessed using F1 scores to gauge the alignment of the system's answer set with the expected gold answer for each user interpretation.

Results

Among the significant numerical observations, training models with double-turn preferences resulted in a consistent 4-5% improvement in F1 scores across different LLM bases, such as Llama2-7b, Gemma-7b, and Llama3-8b. The paper demonstrates that this methodology not only enhances the ability to ask clarifying questions but also enables the model to astutely decide when a direct answer suffices versus when clarification is needed.

Implications and Future Work

Practically, this research has implications for developing more interactive and adaptive AI systems capable of nuanced understanding and response generation. By improving how LLMs manage ambiguity, the approach enhances user satisfaction and efficiency in human-AI interactions.

Theoretically, the findings encourage further exploration of multi-turn learning models and alternative preference annotation methods that emphasize the interaction process. The release of code and data for this research invites continuation and diversification of methods to refine LLMs in various applications.

Future research may explore extending this framework to more complex dialogues involving multiple types of engagement strategies and broader conversational contexts. Emphasizing collaboration with human annotations in simulations could also form a part of evolving this approach to cover even more nuanced interpretations.

In conclusion, this paper presents substantial advances in teaching LLMs to engage interactively by asking clarifying questions, thus moving closer to AI systems that better emulate human conversational capabilities.