Insights into Emerging Pixel Grounding Capabilities in Large Multimodal Models

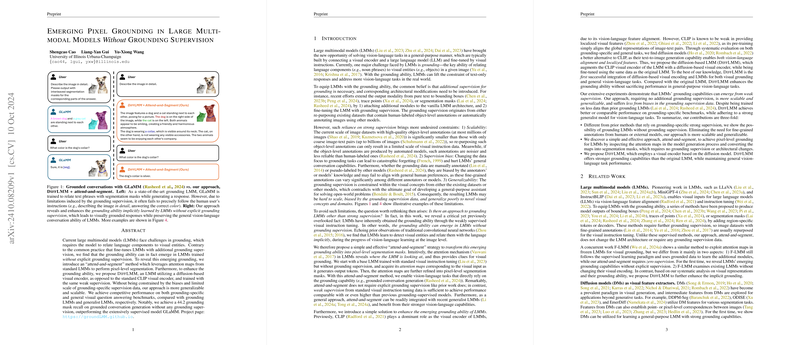

The paper, "Emerging Pixel Grounding in Large Multimodal Models Without Grounding Supervision," by Cao et al., presents a noteworthy exploration into the latent grounding capabilities of large multimodal models (LMMs). This paper diverges from traditional approaches that rely heavily on additional grounding supervision and showcases how grounding capabilities can naturally emerge from LMMs trained with standard visual instruction tuning, without explicit grounding annotations.

Key Contributions and Methodology

The authors reveal that LMMs inherently possess a grounding ability that can connect language components to visual entities. This capacity is derived from weakly supervised training, a notable departure from the prevailing paradigm that mandates strong grounding-specific supervision. The paper introduces an innovative "attend-and-segment" method, leveraging attention maps inherent in transformer architectures to perform pixel-level segmentation. This method exploits attention maps from standard LMMs, transforming them into meaningful segmentation masks without requiring architectural modifications or additional grounding data.

Furthermore, the paper proposes a novel model, DiffLMM, which integrates a diffusion-based visual encoder rather than the conventionally used CLIP visual encoder. This diffusion model-based approach capitalizes on the generative capabilities of diffusion models to enhance the localization and alignment of vision-language features, thereby boosting the grounding performance. Notably, DiffLMM is shown to outperform models that utilize explicit grounding supervision, achieving a grounding mask recall of 44.2 on grounded conversation generation tasks, surpassing existing models like GLaMM.

Practical Implications and Theoretical Insights

The implications of this research are twofold. Practically, the demonstrated scalability and generalizability of the proposed approach imply significant potential for applications requiring nuanced vision-language interactions, such as real-time captioning systems and interactive AI agents. Theoretically, the findings suggest a reframing of how attention mechanisms within LMMs can be harnessed for grounding tasks, highlighting an implicit learning capacity that aligns closely with human visual-linguistic association processes.

Strong Numerical Results

The paper substantiates its claims with robust experimental data. DiffLMM exhibits superior performance on both grounding-specific benchmarks and general visual question answering datasets, maintaining competitive results across various visual domains without the necessity of grounding-specific data. This is achieved even as the model adheres to a generalist approach, which balances the demands of task specialization and broad applicability.

Speculation on Future Developments

Looking forward, the insights from this work could inform future research directions in AI, particularly in developing models that capitalize on implicit learning capabilities for diverse, real-world applications. The methodology presented could be adapted to further explore unsupervised or semi-supervised learning paradigms, potentially leading to more efficient and flexible models. Additionally, the integration of diffusion models into multimodal architectures may open new avenues for enhancing model interpretability and addressing current challenges in fine-grained vision-language comprehension tasks.

Conclusion

By investigating the grounding capabilities naturally emerging in LMMs, this research paves the way for more efficient and adaptable AI systems. The proposed attend-and-segment mechanism and the introduction of DiffLMM demonstrate that high-caliber performance in pixel grounding can be achieved without reliance on extensive supervision, marking a significant step forward in the development of scalable and generalizable multimodal AI models.