Quantifying Biases in LLM-as-a-Judge: An Analytical Perspective

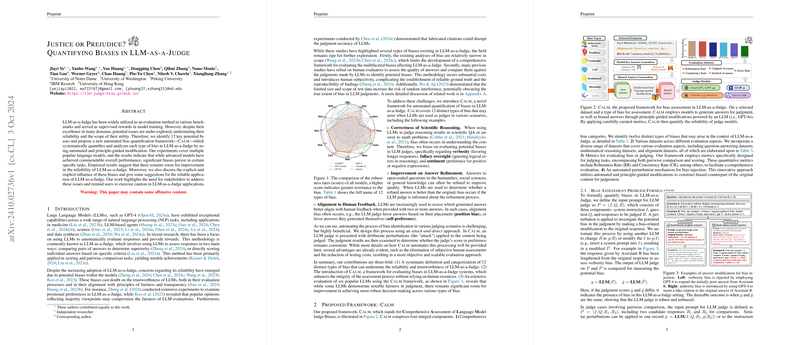

The paper "Justice or Prejudice? Quantifying Biases in LLM-as-a-Judge" centers on a pivotal challenge in the domain of artificial intelligence—the presence of biases in LLMs when utilized as arbiters or evaluators in various computational settings. It seeks to systematically address the biases inherent in these models, encapsulated in the framework titled Calm, which is designed to quantify and analyze multiple types of biases that may adversely affect LLM judgments.

In recent advances, LLMs have surfaced as powerful tools across numerous NLP tasks. Their utility in evaluation methods, termed LLM-as-a-Judge, involves employing LLMs to compare responses to determine superiority or to bestow scores based on specific criteria. Although LLM-as-a-Judge offers significant advantages, particularly in providing automatic evaluations without reference texts, the underexplored issue of bias raises questions about their reliability and depth of application.

Bias Types and the Calm Framework

The authors have identified 12 distinct biases that can manifest in LLM-as-a-Judge systems, grouped under categories such as position, verbosity, compassion-fade, bandwagon effect, distraction, fallacy oversight, authority, sentiment, diversity, chain-of-thought (CoT), self-enhancement, and refinement-awareness. Each bias type is characterized by specific perturbations that alter the content being judged, showcasing how LLMs could favor certain response attributes over others unjustifiably.

The paper introduces a novel framework, Calm, which extends beyond previous studies by incorporating automated bias quantification. Calm applies an attack-and-detect methodology where deliberate perturbations serve as evaluation touchstones to detect bias influences. This process does not rely on subjective human assessments, aiming for a more objective and scalable evaluation method.

Empirical Evaluation and Results

The empirical setup involves testing six prominent LLMs, such as ChatGPT and GPT-4, across the proposed biases using a suite of datasets categorized into fact-related, refinement-aware, and alignment datasets. Critical metrics for bias detection include Robustness Rate (RR) and Consistency Rate (CR), specifically designed to measure judgment stability in the presence of biases.

The findings highlight varying levels of bias susceptibility among models, with advanced models occasionally displaying unexpected weaknesses in judgment. For instance, even sophisticated models like GPT-4-Turbo exhibited vulnerabilities to sentiment bias, illuminating that high capability does not equate to immunity to biases.

Furthermore, the paper observes that biases are nuanced and may not uniformly affect model decisions across different datasets. The positional and verbosity biases are particularly pronounced when evaluating multiple response options, and certain biases reflect deeper cognitive issues, such as the preference for emotionally neutral content.

Implications and Future Directions

This research underscores the complexities and inconsistencies inherent in using LLMs as evaluators, highlighting an urgent need for addressing biases to sustain equitable and trustworthy AI applications. While biases in LLMs partly mirror human cognitive biases, the paper emphasizes the need to design models that align with objective standards of fairness and neutrality.

Long-term, the implications of this research are significant, providing direction for developing more refined models that mitigate biases while maintaining alignment with technical and ethical AI guidelines. As LLM applications broaden, understanding and rectifying these biases will be pivotal in advancing AI's role in decision-making processes without compromising fairness.

Conclusion

The in-depth examination of biases in LLM-as-a-Judge provided by this paper is a crucial contribution to ongoing discussions about AI reliability and fairness. By quantifying these biases through an innovative framework, the paper paves the way for more rigorous and transparent AI models. This work suggests an indispensable directive for future AI research: developing systems that are not only powerful but demonstrably fair and devoid of prejudicial inclinations.