Upcycling Instruction Tuning from Dense to Mixture-of-Experts via Parameter Merging

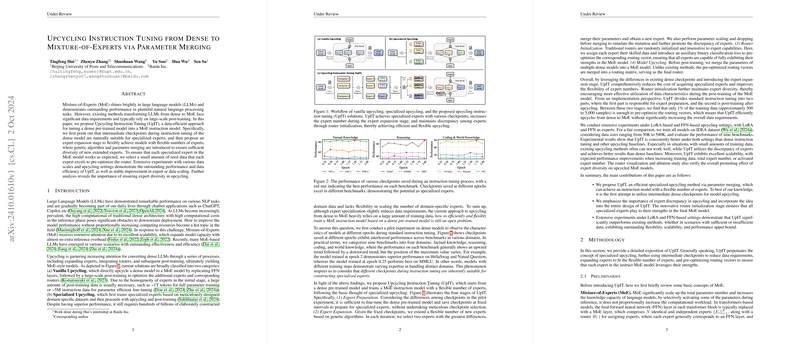

The paper "Upcycling Instruction Tuning from Dense to Mixture-of-Experts via Parameter Merging" introduces a data-efficient method named Upcycling Instruction Tuning (UpIT) to transform dense pre-trained models into Mixture-of-Experts (MoE) instruction models. Traditional methods for transforming dense LLMs to MoE models often require extensive datasets and large-scale post-training, imposing significant computational burdens. UpIT proposes a mechanism that leverages intermediate checkpoints during the instruction tuning of dense models to create specialized experts efficiently.

Methodological Innovations

1. Expert Preparation:

The paper identifies that intermediate checkpoints from instruction tuning exhibit varied performance across different domains. By training dense models to generate multiple intermediate checkpoints, UpIT utilizes these checkpoints as specialized experts proficient in distinct domains. This step eliminates the need for domain-specific datasets and reduces the complexity traditionally associated with training specialized experts.

2. Expert Expansion:

To meet flexible requirements in terms of the number of experts, UpIT introduces a genetic algorithm-based parameter merging strategy. By selecting two experts with minimal similarity and merging their parameters, new expert models can be generated. This process simulates genetic mutation and maintains sufficient diversity among experts.

3. Router Initialization:

The paper recognizes that the random initialization of routers in traditional methods leads to misallocation of tokens in early training stages. UpIT addresses this through a data selection approach, curating expert-specific data tailored for each model and pre-optimizing the routing vectors. This ensures that each expert fully utilizes its strengths, enhancing the overall capability of the MoE model.

4. Model Upcycling:

UpIT involves merging the dense model's parameters into a MoE model after pre-optimizing experts and routing vectors. The expert modules and routers are integrated into the final MoE framework, followed by post-training to refine the model further.

Empirical Evaluation

The experiments conducted in the paper cover both LoRA-based and FFN-based upcycling settings. Extensive benchmarks, including HumanEval, GSM8K, HellaSwag, BBH, MMLU, NQ, TriviaQA, ARC-c, and ARC-e, were used to evaluate the performance of UpIT against other baseline methods. The key findings are:

- Performance: UpIT consistently outperforms existing upcycling methods. For instance, in LoRA-based settings, UpIT achieves an average improvement of 2.22% over LoRAMoE(8E,A2) and 2.76% over LoRAMoE(16E,A2).

- Data Efficiency: UpIT substantially reduces the data requirements. With only 50K training samples, LoRA-based UpIT(8E,A2) matches the performance of LoRAMoE trained on 500K samples. Furthermore, FFN-based UpIT achieves competitive performance even with smaller datasets.

- Scalability: The performance of UpIT scales effectively with the increase in the number of experts and training data. In contrast, traditional methods exhibit diminishing returns or performance degradation when scaling up experts, highlighting UpIT's efficiency in utilizing diverse experts.

- Expert Diversity: The importance of expert diversity is underscored, with UpIT's proposed methods ensuring that different experts specialize in distinct domains, leading to a more efficient utilization of training data.

Implications and Future Developments

UpIT demonstrates a significant leap towards more efficient and scalable MoE models. The approach could serve as a foundation for future work involving even larger LLMs and more sophisticated domain-specific tasks. By addressing the computational and data inefficiencies of traditional methods, UpIT opens avenues for broader application of MoE models in real-world scenarios while maintaining high performance and flexibility.

Potential future developments could focus on further refining the expert expansion mechanism, exploring more advanced genetic algorithms, and investigating the interplay between different domains of expertise among the checkpoints. Additionally, extending the UpIT framework to other architectures and tasks beyond natural language processing could offer significant advancements in the AI research community.

In summary, the paper presents a method that cleverly leverages intermediate checkpoints for specialized expert creation and uses a combination of genetic algorithms and pre-optimized routers to build robust MoE models. UpIT establishes a new standard for data efficiency, flexibility, scalability, and performance in transforming dense LLMs into expert-driven models.