PairDistill: Pairwise Relevance Distillation for Dense Retrieval

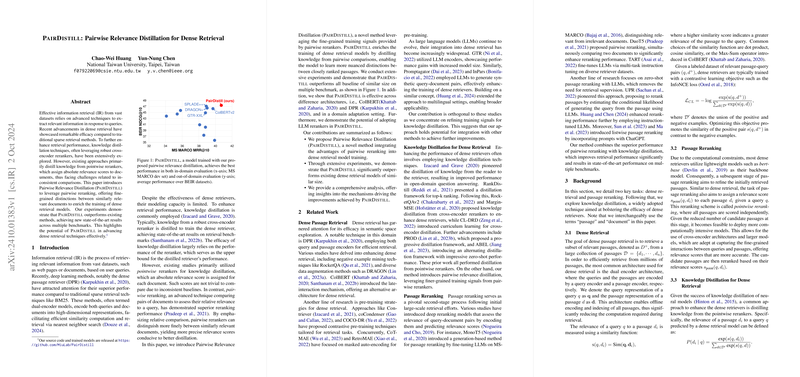

The paper "PairDistill: Pairwise Relevance Distillation for Dense Retrieval," authored by Chao-Wei Huang and Yun-Nung Chen from National Taiwan University, introduces a new method called Pairwise Relevance Distillation (PairDistill) aimed at enhancing dense retrieval models. This method leverages the fine-grained training signals provided by pairwise rerankers to distill knowledge into dense retrievers, achieving state-of-the-art performance across several benchmarks.

Motivation and Background

Information retrieval (IR) is crucial for extracting relevant documents from extensive datasets, with applications ranging from web search to academic indexing. Dense retrieval methods, particularly dual-encoder models like the Dense Passage Retriever (DPR), have shown significant improvements over traditional sparse retrieval techniques such as BM25 due to their ability to compute high-dimensional embeddings for queries and documents. However, despite their effectiveness, dense retrievers often require enhancement through knowledge distillation from more powerful rerankers.

Knowledge distillation traditionally uses pointwise rerankers that assign absolute relevance scores to documents. While pointwise reranking is effective, it faces limitations due to challenges in score calibration across different documents. On the other hand, pairwise reranking, which compares pairs of documents to determine their relative relevance, offers a more robust and fine-grained approach, leading to more accurate relevance distinctions.

Proposed Method: PairDistill

The core of PairDistill lies in incorporating pairwise relevance knowledge into the training of dense retrievers. This is done by distilling pairwise reranking signals, thereby enriching the learning process.

Pairwise Reranking Techniques

The paper utilizes two methods for pairwise reranking:

- Classification-based Pairwise Reranking: This involves training a binary classifier on triplets of query, document-a, and document-b. The classifier learns to predict whether document-a is more relevant than document-b by encoding the triplet and outputting a probability score.

- Instruction-based Pairwise Reranking: This approach leverages LLMs for zero-shot reranking. The model is instructed to choose the more relevant document given a query, and the probabilities are derived from the model's output.

Loss Function and Training Process

PairDistill combines several loss components:

- Contrastive Loss: Leveraged from traditional dense retriever training.

- Pointwise Distillation Loss: Aligning dense retriever scores with those of a pointwise reranker.

- Pairwise Distillation Loss: Aligning dense retriever's relative relevance predictions with those from the pairwise reranker.

The final loss function is a weighted sum of these components, fine-tuned through iterative training to avoid overfitting and to iteratively enhance the model's retriever capabilities.

Experimental Validation and Results

The authors conduct extensive experiments to validate the efficacy of PairDistill. The key findings are as follows:

- In-Domain Performance: PairDistill achieves a Mean Reciprocal Rank (MRR) of 40.7 on the MS MARCO dev set, outperforming ColBERTv2 and achieving new state-of-the-art results. It also shows strong performance on TREC DL19 and DL20 datasets.

- Out-of-Domain Generalization: The model consistently outperforms other dense retrievers on BEIR and LoTTE benchmarks, demonstrating its robustness across diverse datasets.

- Open-Domain Question Answering: PairDistill shows superior performance on NaturalQuestions, TriviaQA, and SQuAD datasets in terms of Recall@5, surpassing leading dense retrievers like ColBERTv2.

Implications and Future Directions

The results highlight the potential of pairwise relevance distillation in dense retrieval. By offering a more nuanced training signal, PairDistill enhances the retriever's ability to discern fine-grained relevance distinctions, thereby improving both in-domain performance and cross-domain generalizability.

Future work could explore reducing the computational overhead associated with training pairwise models, perhaps through more efficient sampling techniques or approximation methods. Additionally, extending this approach to multilingual settings or incorporating other types of distillation signals could further broaden its applicability.

Conclusion

PairDistill represents a significant advancement in the field of information retrieval by integrating pairwise reranking signals into the training of dense retrieval models. This approach not only sets new benchmarks but also opens up multiple avenues for future research to enhance retrieval techniques further.