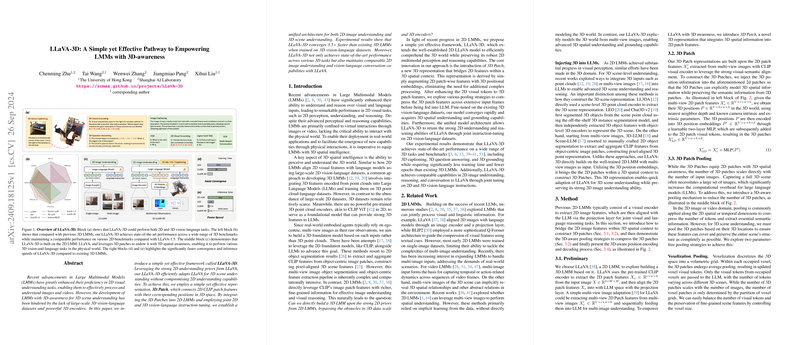

Overview of LLaVA-3D: Enhancing Large Multimodal Models with 3D Awareness

The paper presents a novel framework, LLaVA-3D, which extends the capabilities of Large Multimodal Models (LMMs) by incorporating 3D spatial awareness. Traditional LMMs have excelled in 2D visual tasks but have lacked proficiency in understanding 3D scenes due to limitations in datasets and powerful 3D encoders. This work meticulously builds upon LLaVA, a 2D LMM, by introducing a representation termed "3D Patch," which enriches 2D features with 3D spatial context through position embeddings. By integrating 3D patches into the existing 2D LMM framework, LLaVA-3D achieves a unified approach for both 2D and 3D scene understanding.

Methodological Insights

The core of LLaVA-3D is its innovative approach to integrating 3D spatial information into LMMs. The authors propose a mechanism where 2D image patches derived from CLIP features are augmented with 3D positional embeddings to form 3D patches. This transformation allows the model to bridge the gap between 2D image tokens and their spatial presence in 3D environments. The model then applies three-dimensional awareness pooling strategies such as Voxelization and Farthest Point Sampling (FPS) to compress these 3D patches, thereby maintaining computational efficiency while retaining scene information.

A two-staged training process further refines the model's ability to handle both 2D and 3D tasks. Initial training focuses on aligning 3D patches with LLMs using available region-level and scene-level 3D datasets, followed by a task-specific instruction tuning that incorporates 2D image and 3D scene tasks. This dual-pronged strategy ensures that LLaVA-3D maintains strong performance on 3D tasks while preserving its existing 2D capabilities.

Experimental Evaluation

LLaVA-3D sets a new benchmark in a variety of 3D vision-and-language tasks. The results indicate a significant improvement over previous state-of-the-art models in 3D question answering tasks across multiple datasets such as ScanQA, SQA3D, MMScan QA, and OpenEQA. Remarkably, LLaVA-3D continues to outperform other models even when they undergo task-specific fine-tuning, underscoring the efficiency and robustness of the proposed framework.

In the field of 3D dense captioning, LLaVA-3D shows substantial advancements, exceeding previous methods by a sizable margin in challenges such as Scan2Cap and MMScan Captioning. The unique capability of performing 2D click-based 3D dense captioning is particularly noteworthy, allowing users to interactively obtain 3D object captions and bounding boxes by simply clicking on 2D images.

Furthermore, evaluations confirm that LLaVA-3D retains the robust 2D understanding performance of its predecessor, with results on various 2D benchmarks demonstrating comparability to the original LLaVA model, thus validating the effectiveness of integrating 3D features without compromising 2D capabilities.

Implications and Future Directions

The introduction of LLaVA-3D marks a significant stride in bridging 2D image analysis with 3D scene understanding, offering profound implications for applications such as autonomous navigation, augmented reality, and robotics where spatial awareness is crucial. It demonstrates the feasibility of adapting existing 2D models for 3D tasks through efficient representations and training paradigms.

Speculatively, future work might focus on further enhancing LLaVA-3D's dynamic adaptability in environments that require real-time interaction or manipulation, such as robotic navigation and control systems. Additionally, exploring the integration of more complex 3D datasets and extending the model's capability into real-world applications will be an exciting path forward in the field of 3D-aware artificial intelligence. The framework sets a precedent for subsequent models seeking to incorporate holistic multi-modal and multidimensional understanding.