A Preliminary Evaluation of OpenAI's o1 on PlanBench

The paper entitled "LLMs Still Can't Plan; Can LRMs? A Preliminary Evaluation of OpenAI's o1 on PlanBench" by Karthik Valmeekam, Kaya Stechly, and Subbarao Kambhampati provides a detailed examination of the planning capabilities of OpenAI's recent o1 model, primarily through the use of the PlanBench benchmark. PlanBench, originally developed in 2022 in response to GPT-3, serves as a critical tool for assessing and comparing the planning abilities of various LLMs. This paper revisits PlanBench's static test set and presents new findings on the performance of both traditional LLMs and the new o1 model, which OpenAI classifies as a Large Reasoning Model (LRM).

Methodology and Evaluation

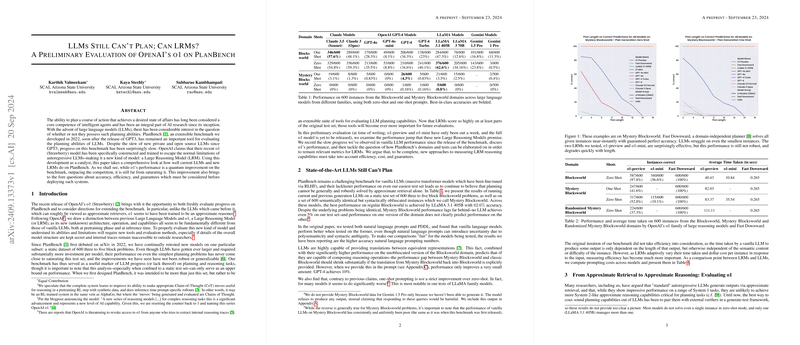

The paper undertakes a robust evaluation of the o1 model against other LLMs within the PlanBench framework, emphasizing accuracy, efficiency, and computational cost. PlanBench itself consists of a set of structured planning problems, including simple Blocksworld tasks and the more challenging Mystery Blocksworld, which features syntactic obfuscation of problem instances.

Key Findings

- Performance on Blocksworld Problems:

- The o1 model achieves a notable 97.8% accuracy on the standard Blocksworld tasks, which contrasts sharply with LLaMA 3.1 405B's 62.6% accuracy.

- This high level of accuracy by o1 is a significant contrast to prior LLMs, indicating a shift from approximate retrieval to more advanced reasoning capabilities.

- Mystery Blocksworld Challenges:

- Performance on the Mystery Blocksworld tasks remains an issue. The highest-performing LLMs barely surpass 5% accuracy, while o1 handles 52.8% of these problems correctly. This gap highlights the o1 model's ability to navigate the synthetic data used in the reinforcement learning pre-training phase, facilitating more robust problem-solving.

- Extended Benchmark Testing:

- The accuracy of o1 diminishes significantly as the complexity of the Blocksworld problems increases. For problems requiring 20 to 40 planning steps, the accuracy drops to 23.63%, signaling a scalability issue within the LRM's reasoning capabilities.

- Handling Unsolvable Instances:

- The o1 model partially identifies unsolvable planning problems, correctly identifying 27% of unsolvable Blocksworld instances. However, it often generates incorrect plans for unsolvable problems, limiting its reliability in critical applications.

Efficiency, Cost, and Guarantees

One of the central discussions in the paper is the trade-off between accuracy and cost. The computational efficiency of o1 is assessed through the number of "reasoning tokens" it generates during inference, a cost metric not applicable to previous LLMs. The o1 model's compute and monetary costs per instance are significantly higher, bringing into question its practicality for broader applications. For instance, running 100 instances on o1-preview incurs a cost of \$42.12, compared to \$0.44 for Claude 3.5 (Sonnet).

Classic planning algorithms, like Fast Downward, achieve perfect accuracy within negligible computational time and cost, demonstrating the current industry's trade-offs when employing more general LRMs. Fast Downward achieves 100% accuracy at a fraction of the cost, raising concerns about LRMs' utility outside the domain of AI research, given their black-box nature and inability to provide formal correctness guarantees.

Implications and Future Directions

The implications of this paper are multifaceted. Practically, deploying general reasoning models such as o1 in safety-critical domains remains problematic due to their lack of robustness and guarantees. Theoretically, the performance leap from LLMs to LRMs suggests that introducing reinforcement learning and reasoning-specific training regimes can significantly enhance planning capabilities. However, such advancements are juxtaposed against increasing operational costs and the absence of transparency in model architecture and reasoning processes.

Future research should consider developing more transparent and interpretable LRMs, ensuring safer deployment in real-world applications. Additionally, hybrid models combining LLMs with classical planners or external verification systems (LLM-Modulo systems) may offer a middle ground, yielding high accuracy with reasonable costs and guarantees.

In conclusion, while the o1 model shows substantial improvements over traditional LLMs on planning tasks, particularly on standard problems like Blocksworld, its performance on complex, obfuscated, and unsolvable tasks underscores the necessity for further advancements in AI planning technology. This paper provides a critical snapshot of the current state of planning capabilities in large models and sets the stage for subsequent explorations into efficient, reliable, and scalable AI reasoning systems.