LLMs as World Models via Action Precondition and Effect Knowledge

The paper "Making LLMs into World Models with Precondition and Effect Knowledge" by Xie et al. explores the potential for LLMs to function as world models by leveraging synthetic data generation and fine-tuning techniques. This research is driven by the necessity for intelligent agents to reason about how actions affect the world states they operate within, a task traditionally managed by world models.

Key Contributions

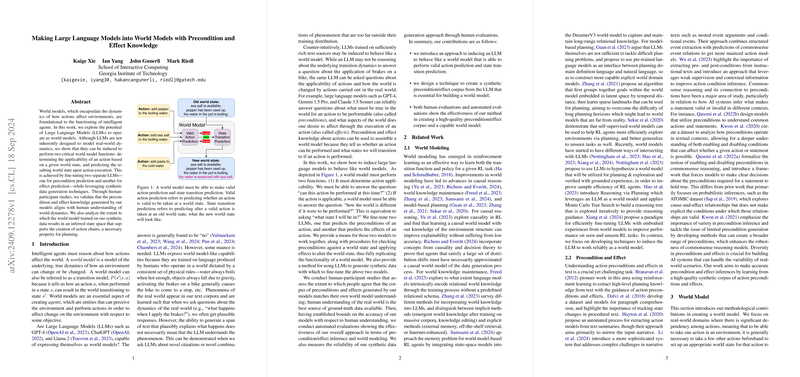

The core innovation of this paper lies in demonstrating that LLMs, whilst not inherently designed as models of real-world dynamics, can be induced to perform functions critical to world models. These functions include:

- Action Validity Determination: Predicting whether an action is applicable in a given world state.

- State Transition Prediction: Forecasting the new world state resulting from an action.

Methodology

Xie et al. developed a method to fine-tune LLMs like GPT-4 for two separate tasks: precondition prediction and effect prediction. Their approach uses synthetic data generation to craft high-quality precondition and effect datasets, enabling the LLMs to learn the dependencies between actions and world states.

- Precondition and Effect Inference: The team fine-tuned two LLMs: one for predicting action preconditions and another for predicting action effects. These LLMs were trained on a synthetic dataset generated through a novel global-local prompting technique using GPT-4, aimed at ensuring significant action chaining within the action plans.

- Semantic State Matching: Two GPT-4-based modules were designed to handle the semantic matching required to determine if inferred preconditions are satisfied within a current world state and how to update the world state based on inferred effects.

Evaluation

The effectiveness of this approach was validated through extensive human-participant studies and automated evaluations. Key findings include:

- Corpus Quality: The global-local prompting technique generated a corpus with significant action chaining and high reliability. Human evaluations confirmed the rationality of the generated action preconditions and effects.

- Inference Accuracy: The fine-tuned LLMs demonstrated strong empirical performance on metrics like F1, BLEU-2/3, ROUGE-L, and Sentence Mover's Similarity (SMS), suggesting accurate knowledge inference from the synthetic data.

- World Model Performance: The LLM-based world model reliably performed valid action prediction and state transition prediction, verified through both automatic metrics and human evaluation, indicating a high alignment with human world model understanding.

Implications

Practical Implications

The practical implications of this work are significant for fields like reinforcement learning, autonomous agents, and simulation environments. Using LLMs for world modeling can potentially streamline the development of intelligent systems capable of sophisticated reasoning about real-world dynamics, thereby enhancing the performance of applications such as robotics, game AI, and virtual assistants.

Theoretical Implications

This research advances the theoretical understanding of leveraging LLMs in contexts beyond traditional language tasks. It illustrates the adaptability of LLMs in encoding and reasoning about structured knowledge in a form that supports dynamic planning and decision-making processes.

Future Directions

The paper opens several avenues for future exploration:

- Broader Domain Adaptation: Extending the approach to a wider range of domains, particularly those with more complex and less structured action chains.

- Enhanced Causal Reasoning: Integrating causal inference mechanisms to enhance the intuitiveness and accuracy of the world models.

- Interactive Agents: Developing interactive agents that use these LLM-based world models to navigate and interact with live environments in real-time.

Conclusion

The work by Xie et al. illustrates a novel methodology for transforming LLMs into functional world models capable of predicting action validity and state transitions through precondition and effect knowledge. This approach represents a convergence of LLMs and world modeling, providing a robust foundation for future research and application in intelligent agent development.