Mixture of Diverse Size Experts

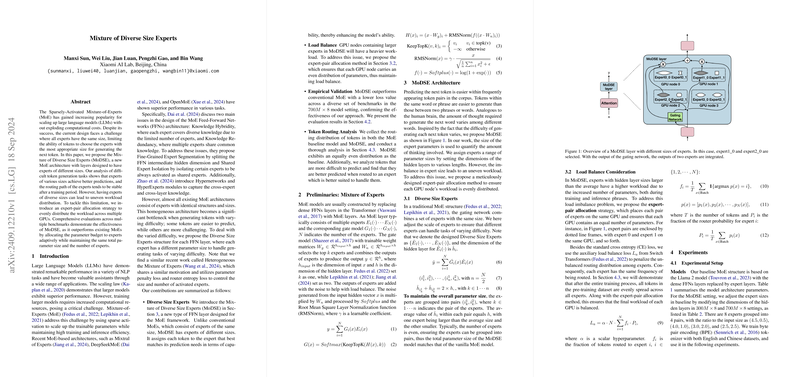

The paper "Mixture of Diverse Size Experts" introduces a novel architecture for the Mixture-of-Experts (MoE) framework. Traditional MoE architectures are characterized by their homogeneous expert sizes, which may limit their ability to efficiently handle tasks of varying difficulties. This paper proposes an innovative approach, termed Mixture of Diverse Size Experts (MoDSE), designed to address this limitation by incorporating experts of different sizes within an MoE layer. This newly introduced architecture aims to enhance the model's capacity to manage tasks with varied prediction demands.

Contributions

The paper's main contributions include:

- Diverse Size Experts: Introduction of the MoDSE architecture where FFN layers consist of experts with varying sizes. This design allows each token to be routed to the expert best suited for its prediction, thereby improving model efficacy.

- Load Balance Strategy: Proposals of an expert-pair allocation method to ensure even workload distribution across GPU nodes, a necessary consideration due to the heterogeneous expert sizes.

- Empirical Validation: Demonstration of MoDSE's effectiveness over conventional MoE models through comprehensive evaluations across multiple benchmarks.

- Token Routing Analysis: An in-depth investigation into how tokens are distributed among experts in both MoE and MoDSE settings, revealing that MoDSE maintains an equally balanced routing distribution.

Experimental Setup

The experiments are conducted on MoE models derived from the Llama 2 framework. Two model configurations are explored: and , where 8 experts are divided into 4 pairs with different size ratios. The training utilizes the Adam optimizer, cosine learning rate scheduling, and the ZeRO optimization framework, carried out on NVIDIA A800 GPU clusters.

Results and Analysis

Performance

Table 1 compares MoDSE and baseline MoE models on downstream tasks, showing MoDSE's superior performance across various benchmarks:

- AGIEval: 28.1 vs. 26.2 (Acc.)

- MMLU: 29.9 vs. 26.5 (Acc.)

- INTENT: 16.5 vs. 13.6 (Acc.)

- GSM8K: 7.7 vs. 5.9 (EM)

- LAMBADA: 38.9 vs. 36.8 (EM)

- Others show similarly improved results.

Both training and validation loss curves (Figure 2) confirm that MoDSE converges earlier and maintains lower cross-entropy loss values compared to baseline models, indicating more efficient training dynamics.

Token Routing Analysis

The balance of token routing distributions is crucial for the model's efficiency. Initial epochs show uneven token distributions, particularly for larger experts. However, by the end of training, MoDSE achieves a more balanced distribution similar to the baseline MoE model (Figure 3).

Importantly, the routing of more difficult-to-predict tokens indicates significant improvement in the MoDSE setting. These tokens are better handled by larger experts, confirming that diverse expert sizes enhance model capability for challenging tasks (Table 2).

Implications

Practical Implications:

- The introduction of diverse expert sizes enables better handling of varied prediction tasks, leading to improved performance in LLM benchmarks.

- The expert-pair allocation strategy assures balanced computational load across GPU nodes, enhancing the practical usability of MoDSE architectures in large-scale training environments.

Theoretical Implications:

- The concept of aligning expert sizes with token prediction difficulties provides a new direction for optimizing MoE architectures.

- Future research could investigate further diversification in expert capacities and their impact on different NLP tasks.

Conclusion and Future Developments

The MoDSE architecture offers a compelling enhancement to existing MoE models by incorporating diverse expert sizes to better manage tasks of varying difficulty. This results in improved model performance while maintaining computational efficiency. Future work could extend this architecture to larger models and investigate its impact on even more diversified tasks and datasets. Continuing to refine load balancing strategies and exploring other aspects of heterogeneous expert collaboration remains a promising area of research.