Qwen2-VL: Enhancing Vision-Language Model's Perception of the World at Any Resolution

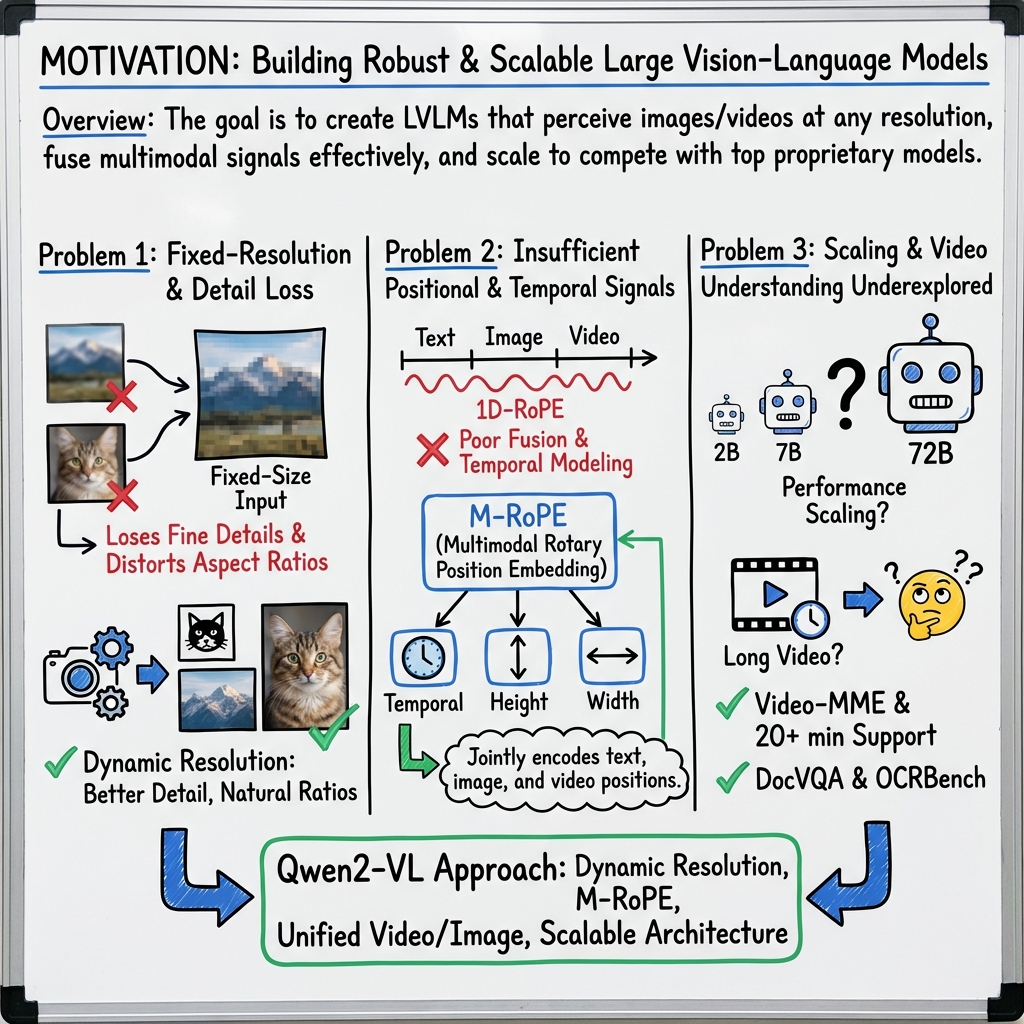

Abstract: We present the Qwen2-VL Series, an advanced upgrade of the previous Qwen-VL models that redefines the conventional predetermined-resolution approach in visual processing. Qwen2-VL introduces the Naive Dynamic Resolution mechanism, which enables the model to dynamically process images of varying resolutions into different numbers of visual tokens. This approach allows the model to generate more efficient and accurate visual representations, closely aligning with human perceptual processes. The model also integrates Multimodal Rotary Position Embedding (M-RoPE), facilitating the effective fusion of positional information across text, images, and videos. We employ a unified paradigm for processing both images and videos, enhancing the model's visual perception capabilities. To explore the potential of large multimodal models, Qwen2-VL investigates the scaling laws for large vision-LLMs (LVLMs). By scaling both the model size-with versions at 2B, 8B, and 72B parameters-and the amount of training data, the Qwen2-VL Series achieves highly competitive performance. Notably, the Qwen2-VL-72B model achieves results comparable to leading models such as GPT-4o and Claude3.5-Sonnet across various multimodal benchmarks, outperforming other generalist models. Code is available at https://github.com/QwenLM/Qwen2-VL .

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview: What is this paper about?

This paper introduces Qwen2-VL, a family of AI models that can understand both language and visuals (like pictures and videos). The main idea is to help the model “see” the world more like humans do—at any resolution—so it can notice tiny details in high-quality images, make sense of long videos, read text inside images (even in many languages), and even act as a visual assistant (for example, operating a phone screen or guiding a robot).

What questions or goals did the researchers have?

To make this easier to follow, here are the main goals of the paper, written in everyday terms:

- Can we build a vision-LLM that handles images at any size without losing details?

- Can we teach the model to understand the “where” and “when” of things in text, images, and videos (positions and time) in a unified way?

- Can one model handle both images and videos well, instead of treating video as a totally separate thing?

- What happens when we scale up the size of the model and the amount of training data—does performance keep improving?

- Can the model read and understand text in images (including many languages), solve visual math problems, and act as a visual agent (e.g., operate a phone screen)?

How does the model work? Methods explained simply

Think of understanding visuals like solving a jigsaw puzzle: you break an image into pieces and then figure out what each piece shows and how pieces fit together.

Here’s how Qwen2-VL does that:

Models of different sizes

There are three versions:

- Qwen2-VL-2B: small and efficient (good for devices).

- Qwen2-VL-7B: medium, strong performance for many tasks.

- Qwen2-VL-72B: very large, top performance for complex tasks.

All three use the same “eye” (a Vision Transformer with ~675 million parameters) paired with different “brains” (LLMs of 1.5B, 7.6B, or 72B parameters).

Naive Dynamic Resolution: seeing clearly at any size

Most models force images to a fixed size (like shrinking a big photo to 224×224), which can blur away details. Qwen2-VL changes that by:

- Allowing images of any resolution and turning them into a flexible number of “visual tokens.”

- Visual tokens are like tiny tiles cut from the image; more tiles mean more detail.

- To keep things efficient, it gently “compresses” nearby tiles together (think of bundling 4 small tiles into 1), so the LLM isn’t overloaded.

This helps the model keep important details from high-res images instead of squishing them away.

M-RoPE: understanding position and time across modalities

“Position embeddings” tell a model where things are. Traditional LLMs use 1D positions (like the order of words). But images are 2D (height and width), and videos add time (3D).

M-RoPE (Multimodal Rotary Position Embedding) is like giving every token a GPS:

- For text: positions work as usual (in one dimension).

- For images: each tile gets coordinates for height and width.

- For videos: each frame also gets a time stamp, so the model knows the order of events.

This helps the model track where things are in space and when they happen.

Unified image and video understanding

Instead of treating videos as totally different, Qwen2-VL trains on images and videos together:

- It samples videos at 2 frames per second and uses “3D convolution,” which you can imagine as looking at small cubes across frames, not just flat patches.

- This lets the model understand motion and longer clips without blowing up memory.

Training process (in three stages)

The model learns in steps, like school:

- Vision-only pretraining: it learns to see and associate images with text (like reading signs in photos).

- Full-model pretraining: both vision and language parts learn together on lots of mixed tasks (e.g., visual Q&A, OCR, videos).

- Instruction tuning: it practices following instructions in a chat format (including multimodal conversations with images and videos).

Special data formats use simple tokens to mark where images start and end, how to refer to boxes in images, and how to run “agent” actions (like tapping a phone screen).

What did they find? Main results and why they matter

Here are the key takeaways, explained plainly:

- Stronger at reading documents and text inside images (OCR), including charts and diagrams:

- The large 72B model sets or matches state-of-the-art results on DocVQA, ChartQA, InfoVQA, TextVQA, and OCRBench.

- It’s especially good at high-resolution documents where tiny text matters.

- Multilingual image text understanding:

- The model reads text in many languages (like Japanese, Korean, French, German, Italian, Russian, Vietnamese, Arabic) inside images.

- On a public multilingual benchmark (MTVQA) and internal tests, it performs better than most general models, often beating GPT-4o, especially for non-English OCR.

- Better video understanding:

- It does very well on video tests, including understanding longer clips and complex motion (e.g., EgoSchema, MVBench).

- It can handle videos over 20 minutes, which is rare.

- Strong agent abilities:

- It can act on visual input: operate a phone interface by tapping/swiping, play simple card games, help navigate virtual spaces, and assist with robot-like tasks.

- On phone UI tests, it matches the right action and clicks the right spot more often than previous models.

- Scaling helps:

- Increasing model size and training data improves performance across tasks.

- The biggest model (72B) performs comparably to leading closed models (like GPT-4o and Claude 3.5 Sonnet) on many multimodal benchmarks, and often beats other open general models.

- Limitations:

- On some very tough academic-style tests (like MMMU), there’s still room to improve.

- For extremely long videos, they limited frames during testing, which may cap performance.

Why does this research matter?

In simple terms, this work helps AI see and think more like we do:

- It preserves tiny visual details by handling any image resolution, which is crucial for reading documents, maps, receipts, or fine-grained science diagrams.

- It uses a unified way to track “where” (space) and “when” (time), helping the model understand videos and multi-image stories.

- It supports real-world tasks: operating devices visually, guiding robots, and helping users with instructions based on what the AI “sees.”

- It works across languages, making it more useful for global users and multicultural content.

- It shows that growing models and data for vision-language tasks pays off, pushing the field toward more capable, general-purpose multimodal AI.

In the future, models like Qwen2-VL could:

- Help students read and understand tricky diagrams and charts.

- Assist workers by reading documents or analyzing videos (e.g., safety checks, tutorials).

- Power intelligent assistants that can interact visually with devices and environments.

- Improve accessibility by reading signs and documents in many languages and formats.

Overall, Qwen2-VL is a step toward AI that can truly “look, read, and act” in the world—accurately and at any resolution.

Collections

Sign up for free to add this paper to one or more collections.