Finetuning LLMs to Emit Linguistic Expressions of Uncertainty

The paper presents a novel method to address the challenge of aligning the expressed confidence of LLMs with the actual accuracy of their predictions by employing supervised fine-tuning on uncertainty-augmented data. This approach is particularly aimed at improving the calibration of LLMs, enabling them to generate linguistic expressions of uncertainty alongside their responses to information-seeking queries. The main objectives are to augment the reliability of LLMs and enhance user trust by providing transparent confidence measures.

Background and Motivation

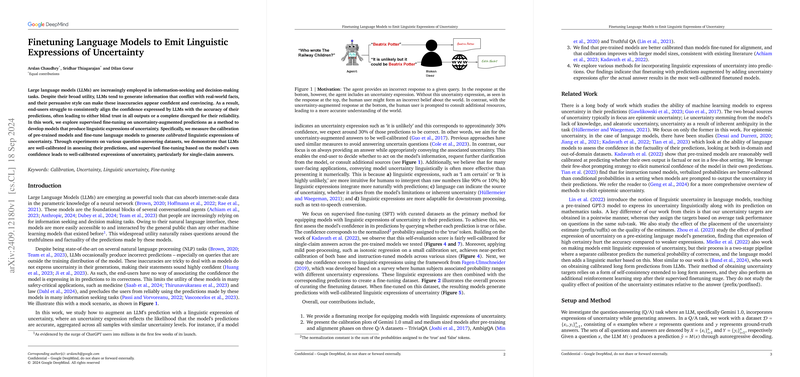

Despite their widespread applicability, LLMs frequently produce outputs with misleading confidence, especially when responding to out-of-distribution queries. The absence of uncertainty expressions in their outputs can lead to over-reliance or complete disregard by end-users, particularly in sensitive domains such as healthcare or law. Addressing this, the paper focuses on enriching LLMs with the ability to express uncertainty in a calibrated manner, bridging the gap between model confidence and prediction correctness.

Methodology

The authors propose a methodical approach involving several stages:

- Self-Evaluation for Calibration: The paper uses a true/false self-evaluation task to assess the pre-trained model's confidence in its outputs. Through isotonic regression applied to these self-evaluation scores, nearly perfect calibration is achieved for models across different sizes.

- Mapping Confidence to Linguistic Terms: Using a predefined mapping from numerical confidence scores to linguistic terms like "likely" or "highly unlikely," derived from human perception studies, models are trained to express uncertainty in a linguistically intuitive manner.

- Fine-Tuning on Augmented Data: The fine-tuning process incorporates uncertainty expressions into the datasets, explored through three augmentation techniques: prefixed, postfixed, and interleaved uncertainty expressions. Among these, postfixed expressions exhibited the best calibration results due to less interference with the generative process.

Experimental Results

The paper conducts extensive experiments, demonstrating that the proposed approach significantly enhances the calibration of LLMs. For models across sizes and training stages, using datasets like TriviaQA and AmbigQA, postfixed uncertainty expressions yielded the lowest Expected Calibration Error (ECE) and Brier Score, indicating improved alignment between expressed confidence and accuracy.

A noteworthy observation from the experiments is that larger models show inherently better calibration, and finely calibrated models were capable of producing well-correlated linguistic uncertainty expressions. However, models designed to be aligned with specific instructions showed comparatively poorer calibration.

Implications and Future Directions

The introduction of well-calibrated linguistic uncertainty alongside predictions paves the way for more reliable and transparent AI systems. This advancement is particularly promising for enhancing user trust in AI, especially in domains where the consequences of incorrect or misleading information can be severe.

Practically, this method provides a middle ground between providing complete answers and abstaining from responses when uncertainty is high. It empowers users with an informed basis for decision-making, potentially consulting additional sources when the model expresses significant uncertainty.

From a theoretical standpoint, these findings underline the importance of calibrating not just the outputs but also the model’s confidence expression mechanisms. The paper suggests incorporating this fine-tuned uncertainty expression framework as an intermediary phase in LLM development, possibly between supervised fine-tuning and RLHF.

Future works could expand upon this by exploring more nuanced expressions of uncertainty, integrating aleatoric uncertainty, and possibly further refining the self-evaluation methodology to enhance model introspection abilities. Additionally, extending this approach to multi-modal models could yield interesting insights into cross-domain calibration techniques.

In conclusion, the paper decisively contributes to the ongoing efforts to make LLMs more reliable through nuanced and calibrated expressions of uncertainty, positioning itself as a significant step toward more trustworthy AI systems.