Human-like Affective Cognition in Foundation Models

Overview

The paper "Human-like Affective Cognition in Foundation Models" explores the capability of modern AI models to understand and reason about human emotions. Given the fundamental role emotions play in human interactions, examining AI's ability to infer emotions and their causal underpinnings from various scenarios is crucial. This research introduces a comprehensive evaluative framework grounded in psychological theory, generating diverse scenarios to test affective cognition in foundation models such as GPT-4, Claude-3, and Gemini-1.5-Pro.

Methodology

The research methodology is structured into three primary stages:

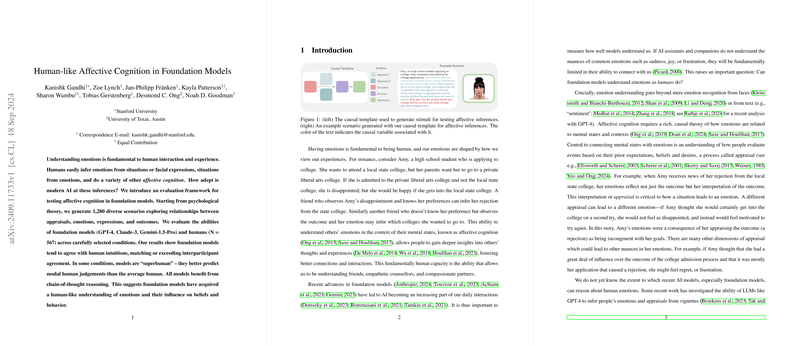

- Abstract Causal Template Definition: The foundation of the evaluation is an abstract causal graph informed by psychological theories which delineate the relationships between outcomes, appraisals, emotions, and facial expressions.

- Scenario Generation: Utilizing a LLM, variables within the template are populated to create specific scenarios. This stage involves generating appraisals, outcomes, and corresponding emotions, thereby creating a rich dataset for evaluation.

- Question and Stimuli Compilation: The populated templates yield numerous combinations, facilitating the creation of specific questions that probe different affective inferences. This includes multimodal questions that integrate facial expressions rendered using Facial Action Units in Unity.

Through these stages, the researchers generated 1,280 diverse scenarios, each scrutinized by human participants to establish ground truth. These human judgments constituted a benchmark for evaluating the performance of the foundation models.

Results

The evaluation yielded several notable insights:

- Interparticipant Agreement: Human participants demonstrated high agreement scores when making affective inferences, significantly above random choice. This cross-validation among human judgments served as a reliable foundation for comparing AI models.

- Model Performance: The foundation models (GPT-4, Claude-3, Gemini-1.5-Pro) showed substantial alignment with human intuitions:

- Emotional Inference: For example, Claude-3, with chain-of-thought (CoT) reasoning, exceeded human agreement scores in certain conditions, showing a high level of accuracy in inferring emotions from appraisals and outcomes.

- Outcome and Appraisal Inference: Human-like capabilities were also evident in inferring outcomes from emotional displays and appraisals with GPT-4 showing exceptionally high agreement scores.

- Chain-of-Thought Reasoning: The introduction of CoT reasoning significantly boosted the models’ performance, indicating that step-by-step logical deductions enhance the accuracy of affective judgments, thereby enhancing alignment with human reasoning patterns.

Discussion and Implications

The paper’s findings underscore the growing sophistication of foundation models in affective cognition. These models not only align with human judgments but, in some scenarios, surpass the average human's predictive accuracy. This suggests significant implications for both theoretical understanding and practical applications of AI:

- Theoretical Implications:

- Mechanistic Representations: Future research could delve into the neural network structures underpinning these capabilities, providing deeper insights into how emotions and affective reasoning are encoded and processed.

- Origins of Affective Cognition: Understanding the data and training processes that facilitate the emergence of emotional reasoning in AI could inform both AI development and cognitive science.

- Practical Applications:

- Enhanced Human-AI Interaction: These advancements pave the way for more empathetic and contextually aware AI systems, enhancing user experience in applications like virtual assistants and mental health support.

- Ethical Considerations: While these capabilities present opportunities, they also pose risks of misuse. It is imperative to address potential ethical implications proactively, ensuring that affective AI respects user emotions and privacy.

Conclusion

The paper provides a rigorous framework for evaluating affective cognition in foundation models and demonstrates these models' capabilities to reason about human emotions effectively. By leveraging psychological theory and systematic scenario generation, the paper sets a benchmark for future research, bridging the gap between human emotional understanding and AI capabilities. The findings signal a promising yet cautious future where AI might not only understand but potentially enhance human emotional interactions.