An Overview of MMEvol: Enhancing Multimodal LLMs through Evol-Instruct

The paper "MMEvol: Empowering Multimodal LLMs with Evol-Instruct" introduces a novel instructional data evolution framework, MMEvol, designed to address the limitations in the quality and diversity of data used for training Multimodal LLMs (MLLMs). The proposed framework aims to overcome the bottlenecks presented by traditional data curation methods, enhancing the complexity and diversity of image-text instruction datasets systematically.

Motivation and Problem Statement

The development of MLLMs has seen substantial advances in recent years, propelled by the success of LLMs. However, the performance of MLLMs is significantly constrained by the quantity and quality of multimodal instruction data. Current methods of data collection, either manual or distillation from black-box models, often yield simplistic or homogeneous datasets. This imposes a ceiling on the models' capabilities in handling complex and diverse tasks. The challenge lies in creating a diverse and complex dataset that can effectively enhance the operational capabilities of MLLMs.

The MMEvol Framework

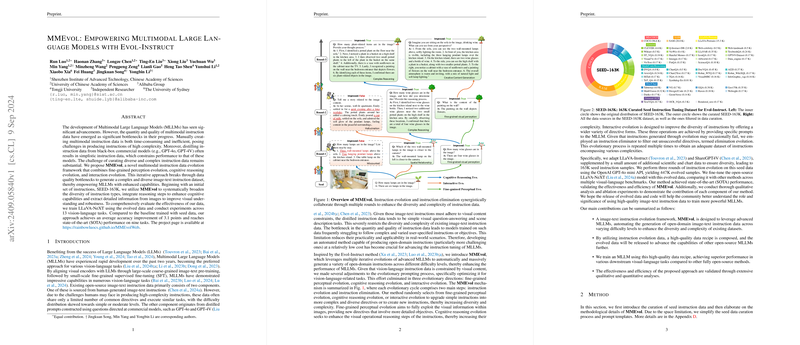

MMEvol introduces a systematic approach to iteratively improve the instruction data, incorporating three key evolutionary processes:

- Fine-grained Perceptual Evolution:

- Aims to augment the data by focusing on less frequent visual objects in the dataset to address the issue of long-tail distribution.

- Enhances the granularity of visual information used in instruction, ensuring a broader and more detailed understanding of visual content.

- Cognitive Reasoning Evolution:

- Designed to augment the reasoning complexity in the instructional data by adding more detailed visual operational reasoning steps.

- This process enhances the model's reasoning capabilities, allowing it to manage multi-step and complex queries effectively.

- Interactive Evolution:

- Enhances the diversity of task forms by automatically generating various types of instructional formats.

- This process addresses the limitation of pre-defined instruction formats, allowing the model to understand and generate a wider range of directives.

The iterative evolution process involves alternating between these evolution stages and an instruction elimination process, which filters out unsuccessful evolutions, optimizing the dataset's quality progressively.

Experimental Setup and Results

The paper describes an experimental setup where the evolved dataset, starting from SEED-163K, is used to train the LLaVA-NeXT model. The performance is evaluated across 13 vision-language benchmarks, showing an average accuracy improvement of 3.1 points over the baseline. The frameworks implemented through MMEvol achieved state-of-the-art (SOTA) performance on nine tasks, showcasing the efficacy of the evolved data.

Key findings from the experiments include:

- Enhanced Dataset Diversity and Complexity:

- The evolved data demonstrated a significant increase in skill length and reasoning step complexity.

- Improved long-tail distribution of visual objects, indicating better generalization and reduced visual hallucinations.

- Superior Performance:

- The MMEvol-trained model showed marked improvements across multiple benchmarks, confirming the advantage of high-quality instructional data.

- Comparative analysis with other models, such as Cambrian-1 and MiniCPM-v2.5, highlighted the importance of data quality over sheer volume in achieving superior model performance.

Implications and Future Directions

The MMEvol framework represents a significant step forward in the instructional tuning of MLLMs, providing a scalable method to enhance both the complexity and diversity of multimodal datasets. This advancement has substantial implications for the practical deployment of MLLMs in real-world scenarios, where models must navigate diverse and complex instructional tasks.

Looking forward, there are promising avenues for future research and development. Integrating image generation models to synthesize new visual content could further enhance the dataset, pushing the boundaries of MLLM capabilities even further. Additionally, exploring larger-scale implementations of MMEvol could yield even more pronounced performance improvements.

Conclusion

MMEvol provides a compelling solution to the data quality bottlenecks faced by MLLMs, employing a methodical approach to evolve multimodal instruction datasets iteratively. The framework's demonstrated ability to enhance dataset diversity and complexity, coupled with its scalable nature, positions it as an essential tool in the ongoing development and refinement of MLLMs. The significant performance gains observed in its application underscore the critical role of high-quality data in maximizing model efficacy.