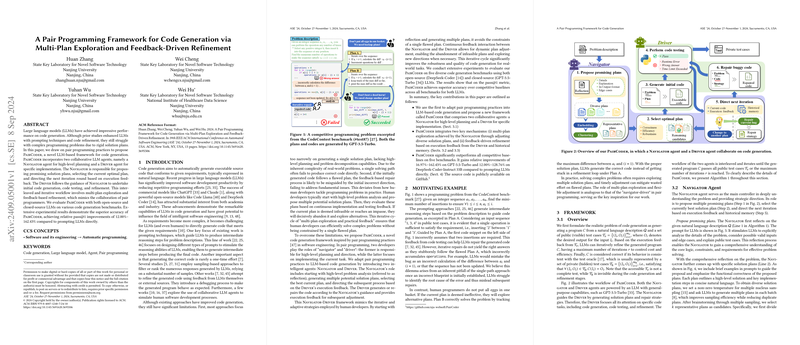

The paper introduces PairCoder, a novel framework for code generation using LLM agents that mimics pair programming practices. The framework addresses limitations in existing code generation approaches, which often struggle with complex programming problems due to their reliance on single, potentially flawed solution plans. PairCoder employs two collaborative agents: a Navigator and a Driver. The Navigator focuses on high-level planning, generating multiple solution plans, selecting the optimal plan, and directing subsequent iterations based on execution feedback. The Driver focuses on specific code implementation, including initial code generation, testing, and refinement, guided by the Navigator.

The core idea behind PairCoder is to emulate the iterative and adaptive strategies used by human developers, specifically the "multi-plan exploration and practical feedback" cycle. The Navigator agent first reflects on the problem description to understand the requirements, constraints, and edge cases. Based on this reflection, the Navigator proposes potential solution plans . These plans are then clustered into representative candidates using text embeddings and the k-means++ algorithm [kmeans]. In each iteration, the Navigator selects the best solution plan from the remaining candidates based on correctness, efficiency, and robustness. This plan is then passed to the Driver agent for code generation.

The Driver agent generates the initial code based on the selected plan. The generated code is tested against a set of public test cases , where is the input and is the desired output. The execution feedback is categorized into four types: Pass, Runtime Error, Wrong Answer, and Time Limit Exceeded. If the code passes all public test cases, the process terminates. Otherwise, the Driver sends the code and execution feedback back to the Navigator.

To avoid getting stuck in a dead-end loop, PairCoder incorporates a long-term memory module that stores the coding history and the execution feedback history . The Navigator uses this history to determine whether to change the solution plan or continue refining the current code. If the generated code or execution feedback has already occurred in the past, the Navigator selects a new solution plan from the remaining candidates. Otherwise, the Navigator proposes a repair strategy based on the execution feedback, and the Driver refines the code accordingly. The process continues until the code passes all public test cases or the maximum number of iterations is reached.

The time complexity of PairCoder is , where is the number of iterations and is the cost of operations within each iteration. The space complexity is , due to the storage of historical coding and execution data.

The paper evaluates PairCoder on five code generation benchmarks: HumanEval, HumanEval+, MBPP, MBPP+, and CodeContest. The results demonstrate that PairCoder achieves superior accuracy compared to competitive baselines, including prompting techniques like CoT [cot] and SCoT, as well as refinement-based approaches like Self-repair, Self-debugging, INTERVENOR, and Reflexion. PairCoder achieves relative pass@1 improvements of 12.00\%--162.43\% compared to direct prompting with LLMs.

The paper analyzes the impact of the maximum number of iterations and the number of clusters on PairCoder's accuracy. As the iteration count increases, PairCoder exhibits continuous accuracy improvement, outperforming refinement-based baselines, which tend to plateau after a certain number of iterations. A moderate cluster number of appears to be optimal for PairCoder with the maximum number of iterations .

Ablation studies demonstrate that both multi-plan exploration and feedback-driven refinement contribute to PairCoder's performance, with feedback-driven refinement showing more significant improvements across the benchmarks. Error analysis reveals that Wrong Answer is the most common error type, highlighting the need to improve the functional correctness of code generation. The coverage of public test cases is found to limit PairCoder's ability to facilitate code generation.

The paper also conducts a cost analysis, measuring the average number of API calls and token consumption per problem. PairCoder requires more API calls than most prompting techniques and some refinement-based approaches, but maintains moderate token consumption, justifying the performance gains.