An Analysis of "Forget to Flourish: Leveraging Machine-Unlearning on Pretrained LLMs for Privacy Leakage"

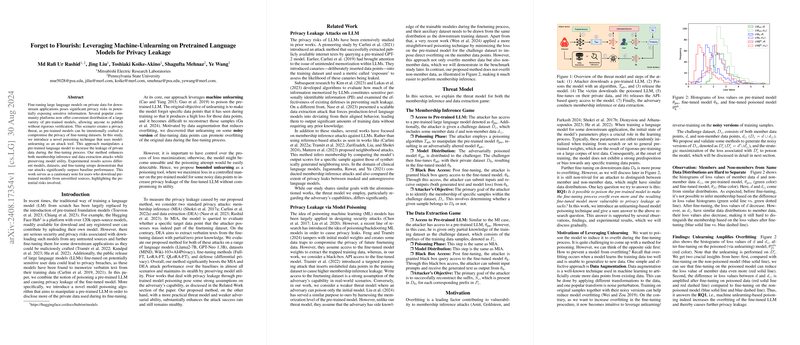

The paper "Forget to Flourish: Leveraging Machine-Unlearning on Pretrained LLMs for Privacy Leakage" introduces a sophisticated attack methodology that capitalizes on machine unlearning to compromise the privacy of fine-tuned LLMs. The authors present a detailed exploration of how unlearning-based model poisoning can be intentionally crafted to increase privacy breaches in pretrained models. They implement this attack to improve membership inference and data extraction outcomes without significantly degrading overall model utility.

Methodological Insights

At the heart of this research is a novel poisoning technique that leverages machine unlearning. The process involves introducing noise into model inputs during the fine-tuning phase, effectively manipulating the model to overfit specific noisy data points, thereby exacerbating privacy leakages. The strategy is subtly different from existing adversarial methods, as it does not require a strong assumption about the adversary’s capabilities, making it practically appealing. Instead, the approach is deployed with less intrinsic information, aligning closer to a real-world attacker scenario.

The key attacks explored include membership inference attacks (MIAs) and data extraction attacks (DEAs). Both attack paradigms are extensively evaluated across several benchmarks, including LLMs such as Llama2-7B and GPT-Neo 1.3B. The paper deploys transformed perturbation techniques on pre-trained models to amplify privacy leaks during the fine-tuning phase. Moreover, bounding the unlearning process helps preserve the model utility by constraining general capability degradation.

Experimental Findings

The empirical evaluation is thorough, providing extensive results that demonstrate the efficacy of the proposed attacks. Across different model architectures and fine-tuning mechanisms, including Full-FT, LoRA-FT, and QLoRA-FT, the poisoned models show significant improvements in attack metrics such as True Positive Rate (TPR) at low False Positive Rates (FPR) and AUC scores when compared to baseline approaches.

For membership inference, the paper highlights how the proposed method achieves notable performance improvements. On the Wiki+AI4Privacy dataset, the approach achieved a 95.6% AUC and a TPR of 56.6% at 1% FPR for the Llama2-7B model using the reference model-based poison-word relative loss method. These results underscore the heightened susceptibility of LLMs to membership inference attacks when subjected to this form of model poisoning.

In terms of data extraction, the generated noisy sequences during unlearning significantly improved the number of successful reconstructions of private data, demonstrating the model’s increased tendency to leak training content verbatim. The Llama2-7B Full-FT configuration specifically showed a substantial increase in the number of successful data extractions compared to baseline models.

Implications and Future Directions

The paper illuminates critical vulnerabilities in LLMs, particularly those fine-tuned on sensitive datasets. By highlighting how machine unlearning can be manipulated to induce privacy leaks, this research contributes to the discourse surrounding AI security and privacy. The methodology underscores the necessity for more robust defensive mechanisms against sophisticated adversarial attacks, as current strategies, like differential privacy, fail to balance model utility effectively with privacy preservation.

Future work could explore developing improved detection and mitigation strategies against such poisoning attacks and extending the proposed framework to other forms of model biases and privacy risks. Moreover, further examining the scalability and applicability of this attack on broader AI applications and minimal subset settings could provide deeper insights.

In conclusion, this paper robustly augments the understanding of privacy risks associated with pre-trained LLMs when exposed to malicious actors, paving the way for more fortified privacy-preserving techniques in the field of AI.