LLM Pruning and Distillation in Practice: The Minitron Approach

"LLM Pruning and Distillation in Practice: The Minitron Approach," authored by Sharath Turuvekere Sreenivas et al., presents an in-depth methodology for compressing LLMs to reduce their size while retaining their performance. This paper primarily targets models such as Llama 3.1 8B and Mistral NeMo 12B, compressing them to 4B and 8B parameters, respectively. The paper leverages techniques such as structured pruning and knowledge distillation to achieve these reductions.

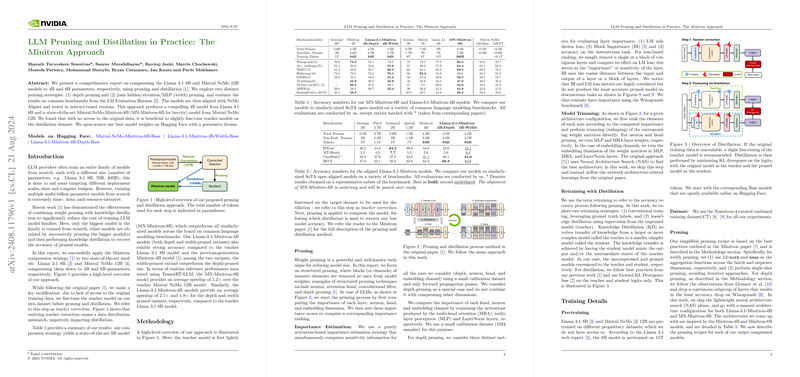

Methodology

Pruning Strategies

The paper details two distinct pruning strategies: depth pruning and width pruning. Depth pruning involves removing entire layers based on their importance, while width pruning targets specific components within layers—such as neurons, attention heads, and embedding dimensions—thereby maintaining the original model depth. Importance metrics for these components are calculated using activation-based strategies, with several benchmarks utilized to determine layers' impact on model performance.

Distillation

Knowledge Distillation (KD) is employed to recover the accuracy lost during pruning. This involves using a larger, unpruned model (the teacher) to guide the training of the smaller, pruned model (the student). The forward KL Divergence loss is minimized between the teacher and student logits during training, which ensures the student model mimics the teacher closely. Given the lack of access to the original data, the paper introduces a crucial modification termed "teacher correction," where the teacher model is fine-tuned on a distillation dataset before the pruning and distillation processes. This step addresses distribution mismatches and significantly enhances the effectiveness of the distillation process.

Results

The paper's results are notable:

- The MN-Minitron-8B model, derived from Mistral NeMo 12B, exceeds its counterparts in various common benchmarks, including Winogrande, Arc Challenge, MMLU, and Hellaswag.

- The Llama-3.1-Minitron-4B models, both depth and width-pruned variants, also show strong performance metrics. Among them, the width-pruned variant consistently outperforms the depth-pruned variant in terms of accuracy.

- Instruct-tuned versions of these models evaluated on IFEval, MT-Bench, ChatRAG-Bench, and BFCL showcase competitive or superior instruction-following and function-calling capabilities.

These results underline the effectiveness of the Minitron approach in delivering models that are computationally efficient yet state-of-the-art in performance. The MN-Minitron-8B model, for instance, not only performs better than its teacher on certain benchmarks but also offers significant speedups in runtime inference (1.2x for MN-Minitron-8B over Mistral NeMo 12B).

Implications and Future Directions

The practical implications of this work are significant:

- Deployment Efficiency: By reducing model size, the computational cost for deploying these models in real-world applications decreases substantially.

- Environmental Impact: Smaller models require less energy and fewer resources, which is beneficial from an environmental sustainability perspective.

- Accessibility: Open-sourcing these model weights on platforms like Hugging Face makes advanced LLMs more accessible for broader research and industry applications.

Theoretical implications include advancing the understanding of how structured pruning and knowledge distillation can be effectively combined. The introduction of teacher correction underscores its necessity for optimal distillation when original training datasets are unavailable.

Future research could explore several promising avenues:

- Automated Architecture Search: Integrate more sophisticated Neural Architecture Search (NAS) methods to identify optimal pruning configurations dynamically.

- Enhanced Pruning Metrics: Develop more precise importance metrics for pruning to further reduce accuracy loss.

- Broader Applications: Apply these techniques to a wider variety of LLMs and tasks to validate their generalizability.

Conclusion

This paper offers a comprehensive framework for reducing the size of LLMs without significantly sacrificing performance. By combining structured pruning with knowledge distillation and incorporating innovative steps such as teacher correction, the authors provide a practical solution to the resource-intensive nature of training and deploying large-scale LLMs. The findings and methodologies presented have substantial implications for both the practical deployment and theoretical understanding of LLM model compression, paving the way for more efficient AI applications in the future.