RAGLAB: A Modular and Research-Oriented Unified Framework for Retrieval-Augmented Generation

Overview

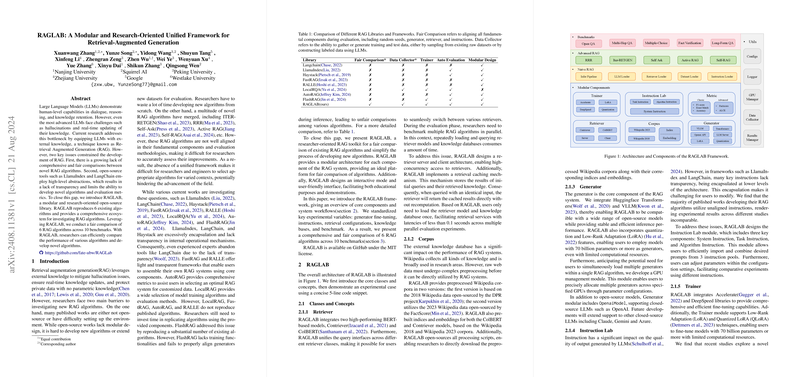

The paper presents RAGLAB, an open-source, research-oriented framework designed to facilitate the development, comparison, and evaluation of Retrieval-Augmented Generation (RAG) algorithms. RAGLAB addresses key challenges currently hindering the advancement of RAG methodologies, specifically the lack of comprehensive and fair comparisons between novel algorithms and the opacity inherent in existing frameworks such as LlamaIndex and LangChain.

Core Contributions

- Modular Architecture:

- RAGLAB introduces a highly modular design that decomposes the RAG system into retrievers, generators, corpora, trainers, and instruction sets. This modularity ensures that researchers can easily replace and upgrade individual components to develop new algorithms or evaluate existing ones.

- Reproduction and Extension:

- The framework reproduces six existing RAG algorithms, including ITER-RETGEN, RRR, Self-Ask, Active RAG, Self-RAG, and Naive RAG, thus providing a standardized benchmark for comparison. By doing so, RAGLAB simplifies the process for researchers to extend their own RAG implementations or benchmark new methodologies against established algorithms.

- Transparency and Fair Comparison:

- RAGLAB mitigates issues with encapsulation and opacity seen in other frameworks. The research presents a fair comparison of six RAG algorithms across ten benchmarks while maintaining uniform conditions such as aligned instructions, fixed random seeds, and consistent hardware configurations.

- Performance Metrics and Benchmarks:

- RAGLAB incorporates three classic metrics (accuracy, exact match, and F1 score) and two advanced metrics (FactScore and ALCE). The benchmarks span various tasks including OpenQA, Multi-HopQA, Multiple-Choice, Fact Verification, and Long-Form QA. Detailed performance metrics for each RAG algorithm are provided, fostering a comprehensive understanding of relative performance in diverse contexts.

Experimental Results and Analysis

The framework evaluates the performance of various RAG algorithms using three distinct base models: Llama3-8B, Llama3-70B, and GPT3.5. The results indicate significant findings:

- Impact of Model Size:

- When employing selfrag-llama3-8B, the performance across benchmarks did not significantly differ from other RAG algorithms. However, selfrag-llama3-70B displayed substantial improvements, suggesting a correlation between model size and performance in RAG systems.

- Algorithmic Comparisons:

- Naive RAG, RRR, Iter-RETGEN, and Active RAG demonstrated similar performance across multiple datasets, with ITER-RETGEN showing a slight edge in Multi-HopQA tasks. Interestingly, RAG systems did not outperform direct LLMs in multiple-choice question tasks, an observation that aligns with other research findings.

Practical and Theoretical Implications

Practical Implications:

- RAGLAB provides researchers and developers with a flexible, transparent, and efficient toolset to test novel RAG algorithms or optimize existing ones. The modular design facilitates rapid prototyping, fair comparisons, and extensive evaluation, thereby accelerating the development lifecycle and enhancing reproducibility in RAG research.

Theoretical Implications:

- The findings underscore the importance of model size and the complexity of the retrieval process in enhancing RAG system performance. Additionally, the uniform benchmarking approach proposed by RAGLAB sets a new standard for evaluating RAG methodologies, contributing to more robust and transparent research practices.

Future Directions:

- RAGLAB's limitations, such as its current scope being restricted to six algorithms and ten benchmarks, indicate pathways for future work. Expanding the range of algorithms, incorporating additional benchmarks, and exploring the impact of different retrievers and knowledge bases will provide deeper insights.

- Incorporating more variety in evaluation metrics, considering aspects such as resource consumption and inference latency, will further solidify the framework’s comprehensiveness.

Conclusion

RAGLAB represents a significant advancement in the domain of Retrieval-Augmented Generation, addressing pivotal issues of transparency and comparability in algorithm evaluation. It facilitates both the fair benchmarking of existing methodologies and the streamlined development of new algorithms. As the field progresses, RAGLAB is poised to become a critical tool for researchers, offering the potential to refine and evolve the landscape of retrieval-augmented systems efficiently and transparently.