Speech-MASSIVE: A Multilingual Speech Dataset for SLU and Beyond

Introduction

The paper introduces Speech-MASSIVE, an extension of the MASSIVE textual corpus to include multilingual spoken data, directed towards supporting Spoken Language Understanding (SLU) and other tasks. The dataset spans 12 languages from diverse linguistic families and inherits annotations from MASSIVE for tasks like intent prediction and slot filling. The authors aim to address the gap in massively multilingual SLU datasets, offering a resource for assessing and advancing foundational models (e.g., LLMs, speech encoders) across multiple languages and tasks. This resource also facilitates benchmarking for additional tasks such as automatic speech recognition (ASR), speech translation (ST), and language identification (LID).

Data Collection and Validation

The audio data was collected via crowdsourcing, engaging native speakers to record and validate the spoken version of MASSIVE sentences. The authors employed a two-iteration validation protocol to ensure high-quality recordings: initial recordings were validated by a separate group of native speakers, and any invalid recordings underwent a second round of validation. This rigorous process ensured the quality and reliability of the dataset, which was further bolstered by inserting known quality control samples into the validation phase.

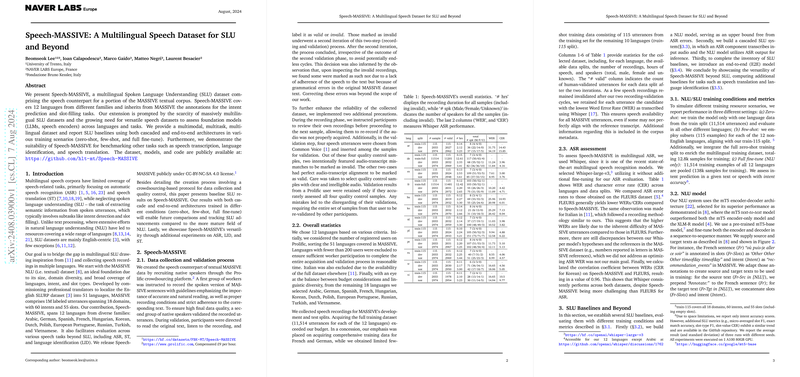

Dataset Statistics

Speech-MASSIVE's dataset statistics are well-documented, detailing the number of samples, the hours of recorded speech, and the speaker demographics for each language. French and German were fully covered in the training data, while other languages received a few-shot representation. This design accounted for practical constraints like budget and availability of crowdworkers, aiming for a balanced linguistic diversity.

ASR Performance

The authors assessed Speech-MASSIVE using Whisper, a state-of-the-art multilingual ASR model. Higher Word Error Rates (WERs) were observed in Speech-MASSIVE compared to FLEURS, suggesting inherently more challenging utterances. However, the high correlation (0.96) between WERs across both datasets indicates consistent Whisper performance.

SLU Baselines

Three main SLU baselines were established:

- NLU Model: Using mT5-based models, representing an ideal upper bound free from ASR errors.

- Cascaded SLU System: Combining Whisper for transcription and the mT5 model for intent and slot predictions.

- End-to-End (E2E) Model: Training Whisper directly for SLU, bypassing intermediate text representations.

The baselines showcased performance improvements from zero-shot to few-shot and full fine-tuning regimes. Notably, cascaded SLU performance in full fine-tuning was competitive with the text-only NLU baseline, especially for well-represented languages in the mC4 dataset.

Comparative Performance and Training Language Impact

A detailed comparison revealed that the cascaded SLU generally outperformed E2E models in zero-shot settings using English data. However, in the few-shot scenario, E2E models achieved comparable or better performance, especially when pre-trained with French data from Speech-MASSIVE. This underscores the significant influence of the training language on SLU performance, particularly in multilingual and few-shot contexts.

Versatility Beyond SLU

Additional baselines for LID and ST demonstrated Speech-MASSIVE's utility beyond SLU. Whisper's performance in these tasks provided a robust benchmark for evaluating cross-task and cross-lingual capabilities, highlighting the dataset's potential for broader speech research applications.

Conclusion

Speech-MASSIVE offers a significant multilingual resource with 12 languages for SLU and other speech-related tasks. The dataset and corresponding benchmarks enable comprehensive evaluation and comparison, fostering advancements in multilingual, multimodal, and multi-task speech processing. Future research will benefit from exploring the influence of training languages in SLU performance, comparing cascade versus E2E architectures deeply, and pushing the boundaries of multi-task speech systems.

Implications and Future Directions

Practically, Speech-MASSIVE can significantly aid the development of more robust, multilingual SLU systems, enhancing user interactions across diverse languages. Theoretically, the dataset provides a fertile ground for investigating multilingual model training, the efficacy of cascade versus E2E architectures, and their performance dynamics in zero-shot and few-shot settings. Future research should focus on extending multilingual corpora in training foundational models and exploring multi-task learning paradigms to exploit cross-task synergies effectively.

By making Speech-MASSIVE publicly available, the authors set a foundation that could catalyze significant progress in multilingual SLU and related fields.