MedTrinity-25M: A Comprehensive Multimodal Dataset for Medical AI

Overview

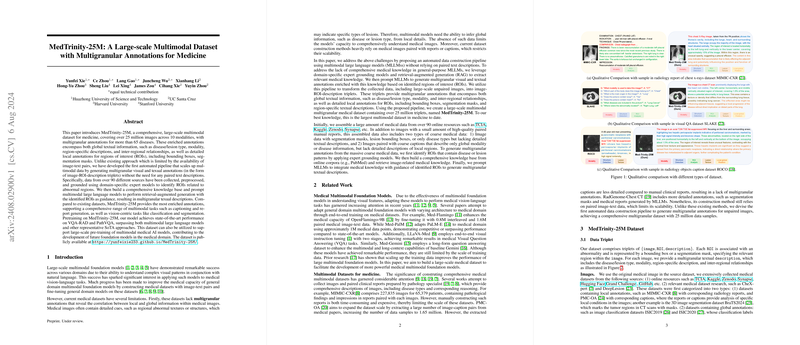

The introduction of MedTrinity-25M marks a significant advancement in the availability and richness of medical datasets for AI research. This dataset comprises over 25 million images spanning 10 modalities and covering more than 65 diseases. Each image is paired with detailed multigranular annotations, including descriptions of disease types, regions of interest (ROIs), modality information, region-specific descriptions, and inter-regional relationships. Unlike traditional datasets that often rely on paired image-text datasets, MedTrinity-25M employs an automated pipeline that generates annotations from unpaired images, thus significantly scaling up the data.

Dataset Construction

Data Collection

MedTrinity-25M aggregates data from over 90 sources, including well-known repositories such as TCIA, Kaggle, Zenodo, and Synapse. This extensive collection encompasses various imaging modalities, including X-ray, MRI, CT, Ultrasound, and Histopathology, ensuring comprehensive coverage of medical imaging techniques. The data sources include images annotated with different levels of detail, from broad disease types to precisely marked segmentation masks and bounding boxes.

Annotation Strategy

- Metadata Integration: Basic image attributes, such as modality and disease types, are derived from existing dataset metadata. This metadata is used to generate "coarse captions," which provide essential contextual information for each image.

- ROI Locating: Various expert models (e.g., SAT, Chexmask, HoverNet) are leveraged to identify ROIs within the images. These models either use text prompts or segmentation techniques to locate regions indicative of abnormalities.

- Medical Knowledge Retrieval: To enhance the quality of textual descriptions, external medical knowledge is integrated. This knowledge is retrieved from databases such as PubMed and StatPearls, ensuring that the annotations are infused with domain-specific expertise.

Automated Annotation Pipeline

The automated pipeline for annotation bypasses the need for paired image-text data, instead using domain-specific expert models and large multimodal LLMs (MLLMs). The pipeline consists of two major stages:

- Data Processing: This stage involves preprocessing the data to extract coarse captions, locate ROIs, and retrieve relevant medical knowledge. These elements provide a foundation upon which detailed annotations can be built.

- Generation of Multigranular Text Descriptions: Using the processed data, MLLMs (such as GPT-4V and LLaVA-Med Captioner) are prompted to generate structured, multigranular text descriptions. These descriptions offer a layered understanding of the image, integrating global and local information.

Evaluation and Quality

To ensure the generated annotations are of high quality and align well with human-generated annotations, the dataset was evaluated using GPT-4V. This evaluation focused on five key attributes: modality, structure detection, ROI analysis, lesion texture, and local-global relationships. The alignment scores indicate a high degree of agreement with human annotations, validating the dataset's reliability.

Benchmarking with MedTrinity-25M

The efficacy of MedTrinity-25M was demonstrated through the training of LLaVA-Med++, a state-of-the-art model for medical visual question answering (VQA). Pretraining on MedTrinity-25M led to significant improvements in performance across multiple VQA benchmarks (VQA-RAD, SLAKE, and PathVQA). These results underscore the dataset's potential to enhance the capabilities of multimodal medical AI models.

Practical Implications and Future Directions

By providing a large-scale, richly annotated dataset, MedTrinity-25M significantly lowers the barrier for training advanced AI models in medicine. Its comprehensive coverage across various modalities and diseases makes it a invaluable resource for developing AI models that can perform a multitude of tasks, from diagnostic imaging to automated report generation. Future developments could include expanding the dataset with additional modalities and diseases and further refining the annotation pipeline to incorporate evolving AI technologies and medical knowledge bases.

In summary, MedTrinity-25M addresses the critical need for large, detailed multimodal datasets in medical AI. Its automated pipeline for annotation, combined with the dataset's breadth and depth, positions it as a cornerstone resource for the next generation of medical AI research and applications.