Analysis of "Inductive or Deductive? Rethinking the Fundamental Reasoning Abilities of LLMs"

The paper "Inductive or Deductive? Rethinking the Fundamental Reasoning Abilities of LLMs," authored by Cheng et al., offers a comprehensive investigation into the distinct reasoning capabilities of LLMs, particularly focusing on the differentiation between inductive and deductive reasoning. While extensive research on LLMs’ reasoning capabilities exists, the majority fails to disentangle these two fundamental types of reasoning, therefore, blurring the boundaries of their true capabilities. This paper seeks to address this gap by analyzing LLMs' performance on tasks that distinctly require either inductive or deductive reasoning.

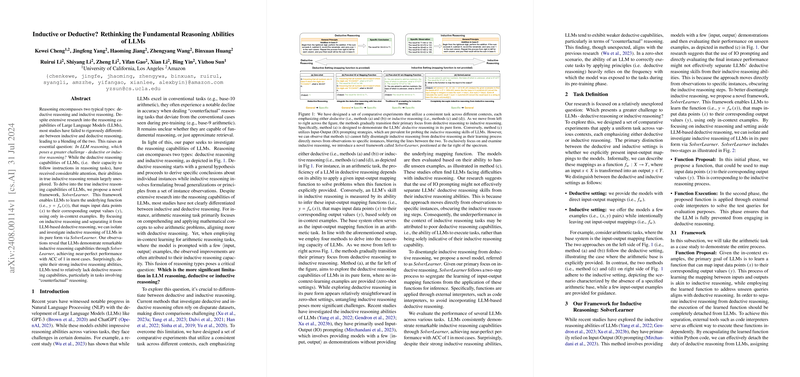

The authors introduce a novel framework called SolverLearner to effectively isolate and examine the inductive reasoning abilities of LLMs. This framework involves learning the underlying function mapping input data points to their corresponding outputs using in-context examples and employing external interpreters to execute these functions, thereby ensuring that deductive reasoning is not conflated with inductive reasoning.

Key Findings

- Inductive Reasoning Excellence: The empirical results reveal that when isolated from deductive reasoning interactions, LLMs, particularly GPT-4, exhibit remarkable inductive reasoning capabilities. SolverLearner achieves near-perfect accuracy (ACC of 1) across multiple tasks, demonstrating that these models can effectively learn and generalize functions from limited in-context examples.

- Deductive Reasoning Deficiencies: Contrarily, LLMs exhibit weaker performance in deductive reasoning, especially in "counterfactual" tasks where scenarios deviate from those observed during pre-training. This aligns with observations that LLMs struggle to follow instructions and execute commands in zero-shot settings, reflecting a fundamental limitation in their deductive capacities.

- Task-Specific Performance:

- Arithmetic Tasks: The performance in base-10 arithmetic is notably strong in zero-shot settings, indicative of the high frequency of similar tasks during pre-training. However, performance declines in other bases, which are less commonly encountered.

- Basic Syntactic Reasoning: Inductive reasoning through SolverLearner achieves perfect results across various artificial syntactic transformations, surpassing the conventional IO prompting methods.

- Spatial Reasoning and Cipher Decryption: SolverLearner also demonstrates its utility in spatial reasoning and decryption tasks by learning and applying complex rules effectively, in contrast to the relatively poor performance observed in purely deductive settings.

Methodological Insights

The distinction between inductive and deductive reasoning is systematically investigated through carefully designed tasks. For inductive reasoning, tasks like arithmetic in non-standard bases, syntactic transformations, spatial reasoning with altered coordinate systems, and decryption using non-standard ciphers were used. The proposed SolverLearner framework ensures inductive reasoning is executed devoid of deductive influences by leveraging external interpreters.

For the deductive reasoning evaluation, settings such as zero-shot and 8-IO with mapping functions provided the models contextual examples to further probe their deductive capacities. Despite the enhancement with in-context examples, the accuracy improvement was not substantial, highlighting the intrinsic difficulty LLMs face in executing deductive reasoning accurately, especially for tasks outside their pre-training familiarity.

Implications and Future Directions

The findings from this paper underscore a critical insight: while LLMs can excel in generalizing from examples (inductive reasoning), they struggle considerably with executing tasks that require strict adherence to provided instructions (deductive reasoning). This poses significant implications for the practical deployment of LLMs in applications demanding precise and complicated rule-following capabilities, such as legal reasoning or medical diagnostics.

Future work should focus on refining LLMs' deductive reasoning by exploring hybrid approaches that integrate symbolic logic systems with LLMs. Further research into improving the interpretability and robustness of inductive learning frameworks like SolverLearner could also yield substantial advancements in this domain. Moreover, investigating the impact of different pre-training strategies and dataset compositions on both inductive and deductive reasoning abilities can provide deeper insights into optimizing LLM designs.

Conclusion

The distinction between inductive and deductive reasoning in LLMs presented by Cheng et al. provides a nuanced understanding of where these models excel and where they falter. The SolverLearner framework showcases the impressive inductive reasoning prowess of LLMs, while also highlighting their limitations in deductive reasoning. This dichotomy is pivotal for both theoretical advancements in artificial intelligence and practical applications reliant on robust and reliable reasoning capabilities.