From Feature Importance to Natural Language Explanations Using LLMs with RAG

This paper addresses the complexities involved in making AI systems interpretable in a way that is accessible to non-technical users. It proposes a novel approach to explainable AI (XAI) by integrating LLMs with a decomposition-based methodology for extracting feature importance. The focus is on generating natural language explanations for scene-understanding tasks, which are often crucial in autonomous systems, including autonomous vehicles.

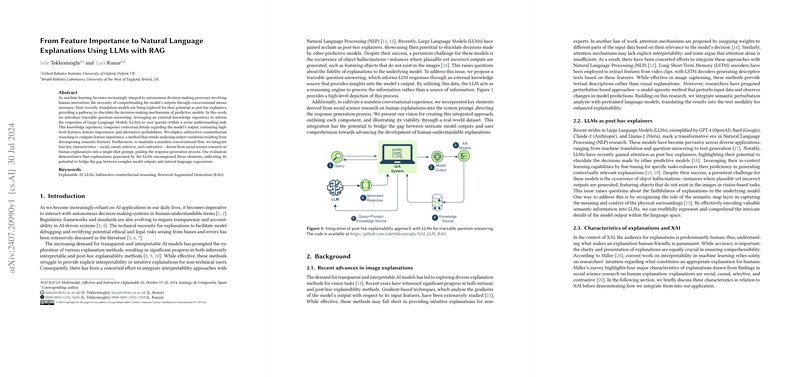

The core contribution is a method referred to as traceable question-answering through Retrieval-Augmented Generation (RAG). This technique enhances LLMs by referencing an external knowledge base, thereby resolving hallucination issues and ensuring that responses are grounded in verifiable model outputs. The external repository contains contextual data such as feature importance and predictions, enabling LLMs to act as reasoning engines rather than sources of information.

Methodology

The paper introduces a decomposition-based approach to infer feature importance, which involves systematically removing input components and observing changes in model outputs. This approach produces feature importance values that help in understanding model behavior. These values are then utilized in conjunction with a system prompt designed to imbue the LLMs with characteristics derived from social science research on human explanations. These characteristics include being social, causal, selective, and contrastive.

To achieve this, the system prompt directs the LLMs to format responses in a manner that mirrors human conversational norms, leveraging politeness and empathy while maintaining technical accuracy. The methodology is demonstrated through an application in scene-understanding tasks, specifically using urban scene segmentation data.

Evaluation and Results

Evaluation of the proposed approach is conducted using two models: GPT-3.5 and GPT-4. Their ability to generate explanations is assessed across sociability, causality, selectiveness, and contrastiveness. Results indicate that GPT-4 outperforms GPT-3.5 in all categories, showcasing higher accuracy and better causal inference capabilities. GPT-4's responses exhibit more nuanced expressions of causality and contrastiveness, confirming its potential for generating effective explanations.

The paper also evaluates simplicity in explanations, accounting for the frequency of technical jargon, response length, and the number of causes cited. GPT-4 again stands out by providing simpler and more comprehensible responses, suggesting its effectiveness in bridging the gap between complex model outputs and user understanding.

Implications and Future Directions

The research has important implications for advancing XAI, emphasizing the role of human-friendly explanations in enhancing trust and usability of AI systems. By integrating semantic feature importance with LLMs, the approach offers a potentially impactful solution for generating clarity in AI-driven decision processes. The dynamic nature of the external knowledge base further adds to the adaptability and applicability of this method across various domains.

Future research directions could explore enhanced dialogue management strategies to improve interaction personalization, potentially integrating multimodal elements for richer explanations. Additionally, cross-algorithm consistency in estimating feature importance will be crucial for future developments to ensure robustness and reliability in explainable AI frameworks. This work sets a solid foundation for ongoing advancements in making AI more interpretable and accountable to its users, paving the way for broader acceptance and integration of autonomous systems.